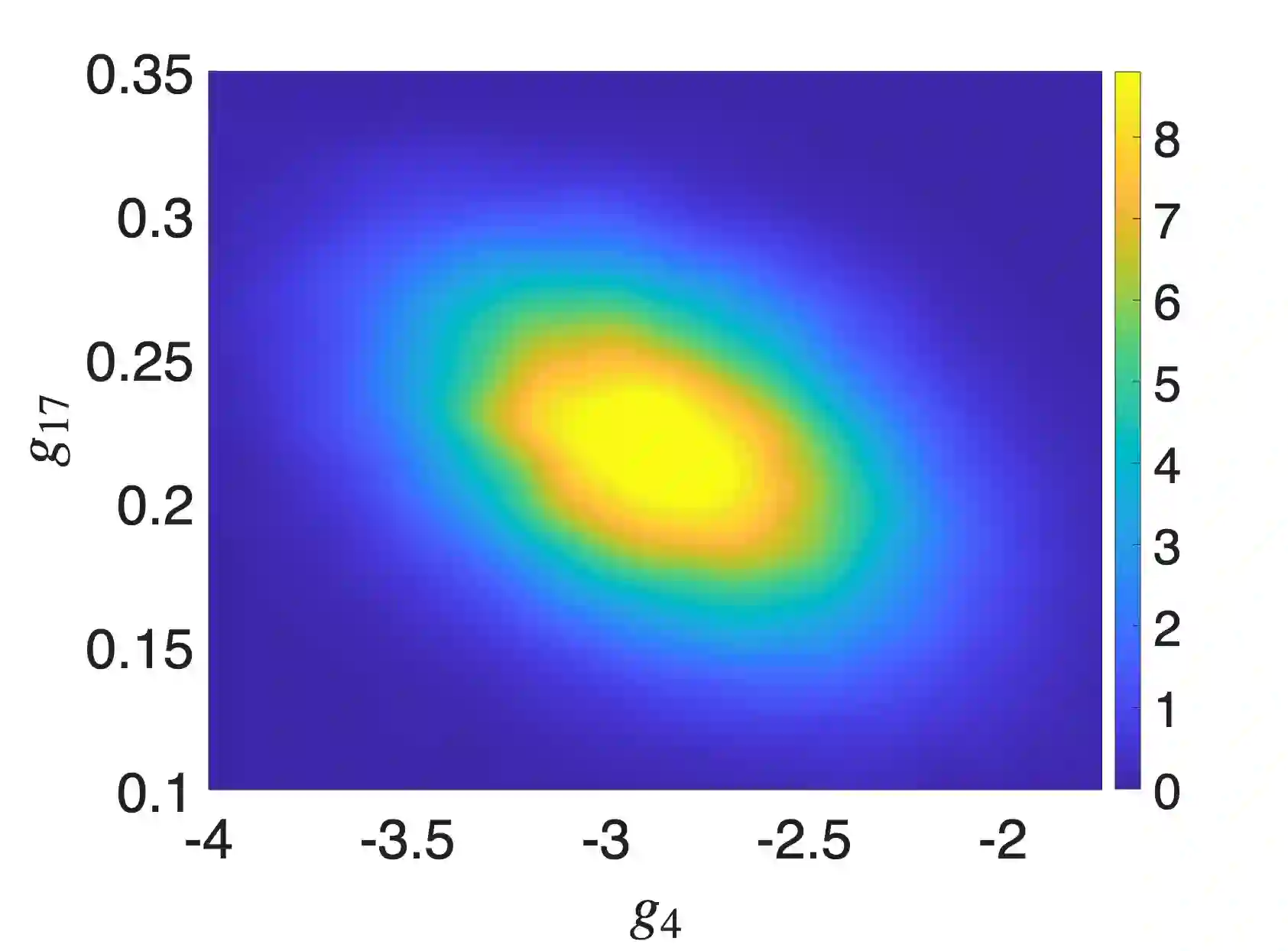

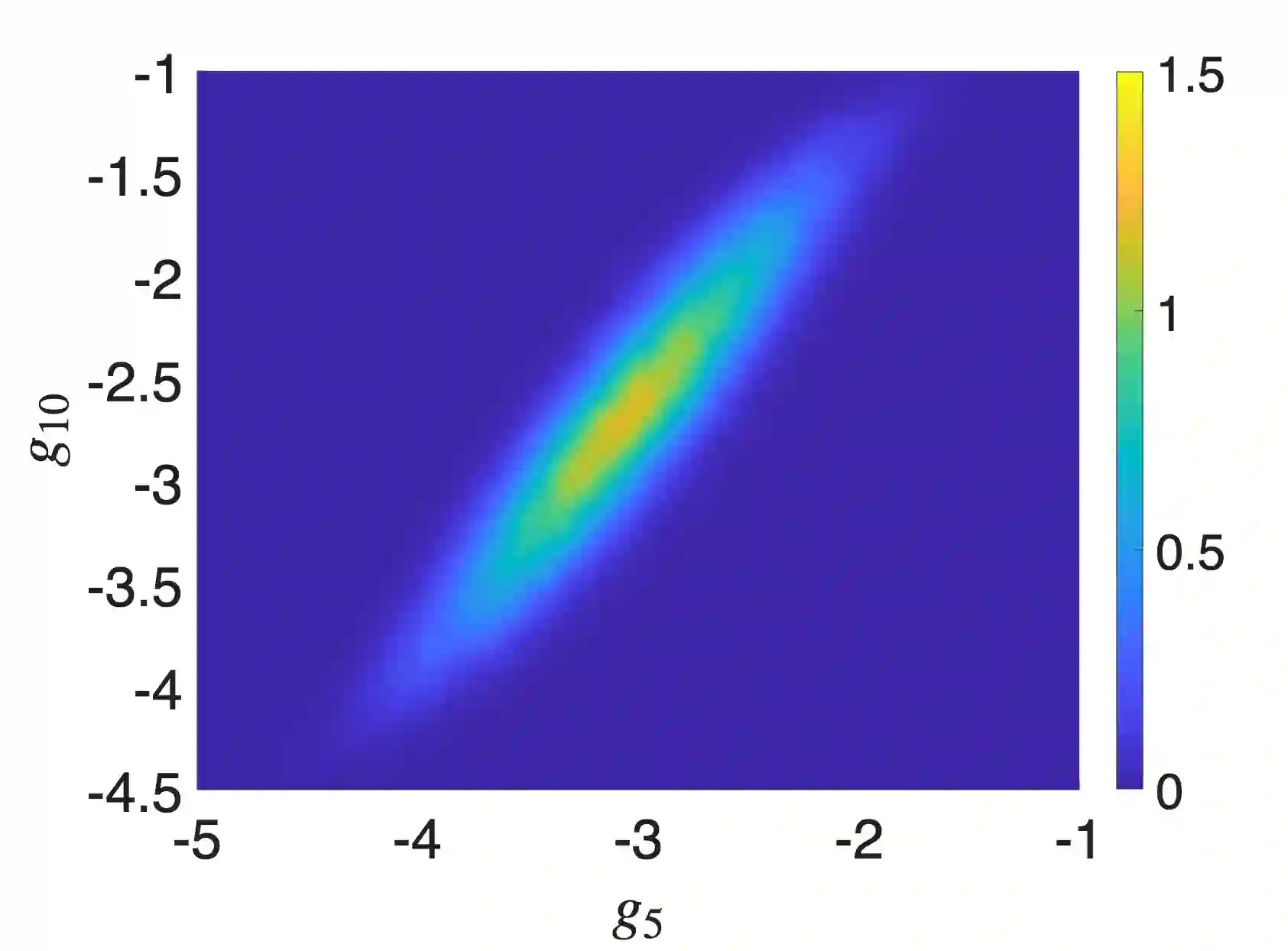

We develop new uncertainty propagation methods for feed-forward neural network architectures with leaky ReLU activation functions subject to random perturbations in the input vectors. In particular, we derive analytical expressions for the probability density function (PDF) of the neural network output and its statistical moments as a function of the input uncertainty and the parameters of the network, i.e., weights and biases. A key finding is that an appropriate linearization of the leaky ReLU activation function yields accurate statistical results even for large perturbations in the input vectors. This can be attributed to the way information propagates through the network. We also propose new analytically tractable Gaussian copula surrogate models to approximate the full joint PDF of the neural network output. To validate our theoretical results, we conduct Monte Carlo simulations and a thorough error analysis on a multi-layer neural network representing a nonlinear integro-differential operator between two polynomial function spaces. Our findings demonstrate excellent agreement between the theoretical predictions and Monte Carlo simulations.

翻译:本文针对输入向量受随机扰动影响且采用Leaky ReLU激活函数的前馈神经网络架构,提出了新的不确定性传播方法。我们推导了神经网络输出概率密度函数及其统计矩的解析表达式,这些表达式是输入不确定性及网络参数(即权重与偏置)的函数。关键发现表明:即使输入向量存在较大扰动,对Leaky ReLU激活函数进行适当线性化仍能获得精确的统计结果,这归因于信息在神经网络中的传播特性。同时,我们提出新型解析可处理的高斯Copula代理模型,用以近似神经网络输出的完整联合概率密度函数。为验证理论结果,我们在表征多项式函数空间之间非线性积分-微分算子的多层神经网络上进行了蒙特卡洛模拟与系统误差分析。研究结果表明理论预测与蒙特卡洛模拟结果高度吻合。