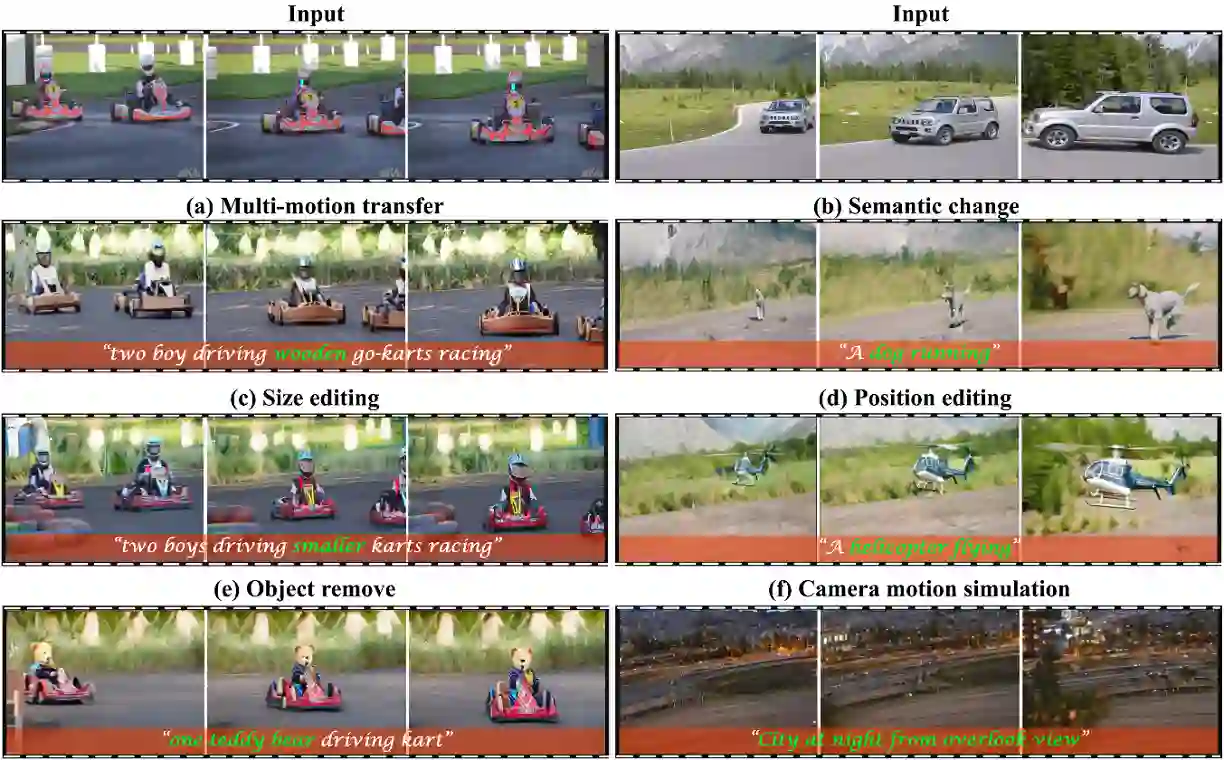

The development of Text-to-Video (T2V) generation has made motion transfer possible, enabling the control of video motion based on existing footage. However, current methods have two limitations: 1) struggle to handle multi-subjects videos, failing to transfer specific subject motion; 2) struggle to preserve the diversity and accuracy of motion as transferring to subjects with varying shapes. To overcome these, we introduce \textbf{ConMo}, a zero-shot framework that disentangle and recompose the motions of subjects and camera movements. ConMo isolates individual subject and background motion cues from complex trajectories in source videos using only subject masks, and reassembles them for target video generation. This approach enables more accurate motion control across diverse subjects and improves performance in multi-subject scenarios. Additionally, we propose soft guidance in the recomposition stage which controls the retention of original motion to adjust shape constraints, aiding subject shape adaptation and semantic transformation. Unlike previous methods, ConMo unlocks a wide range of applications, including subject size and position editing, subject removal, semantic modifications, and camera motion simulation. Extensive experiments demonstrate that ConMo significantly outperforms state-of-the-art methods in motion fidelity and semantic consistency. The code is available at https://github.com/Andyplus1/ConMo.

翻译:文本到视频(T2V)生成技术的发展使得运动迁移成为可能,能够基于现有素材控制视频运动。然而,现有方法存在两个局限性:1) 难以处理多主体视频,无法迁移特定主体的运动;2) 在将运动迁移至不同形状的主体时,难以保持运动的多样性和准确性。为克服这些问题,我们提出了 \textbf{ConMo},一个零样本框架,用于解耦并重组主体运动与摄像机运动。ConMo 仅利用主体掩码,即可从源视频的复杂轨迹中分离出单个主体与背景的运动线索,并将其重组以生成目标视频。该方法能够对不同主体实现更精确的运动控制,并提升多主体场景下的性能。此外,我们在重组阶段提出了软引导机制,通过控制原始运动的保留程度来调整形状约束,从而辅助主体形状适应与语义转换。与先前方法不同,ConMo 解锁了广泛的应用场景,包括主体尺寸与位置编辑、主体移除、语义修改以及摄像机运动模拟。大量实验表明,ConMo 在运动保真度与语义一致性方面显著优于现有最先进方法。代码发布于 https://github.com/Andyplus1/ConMo。