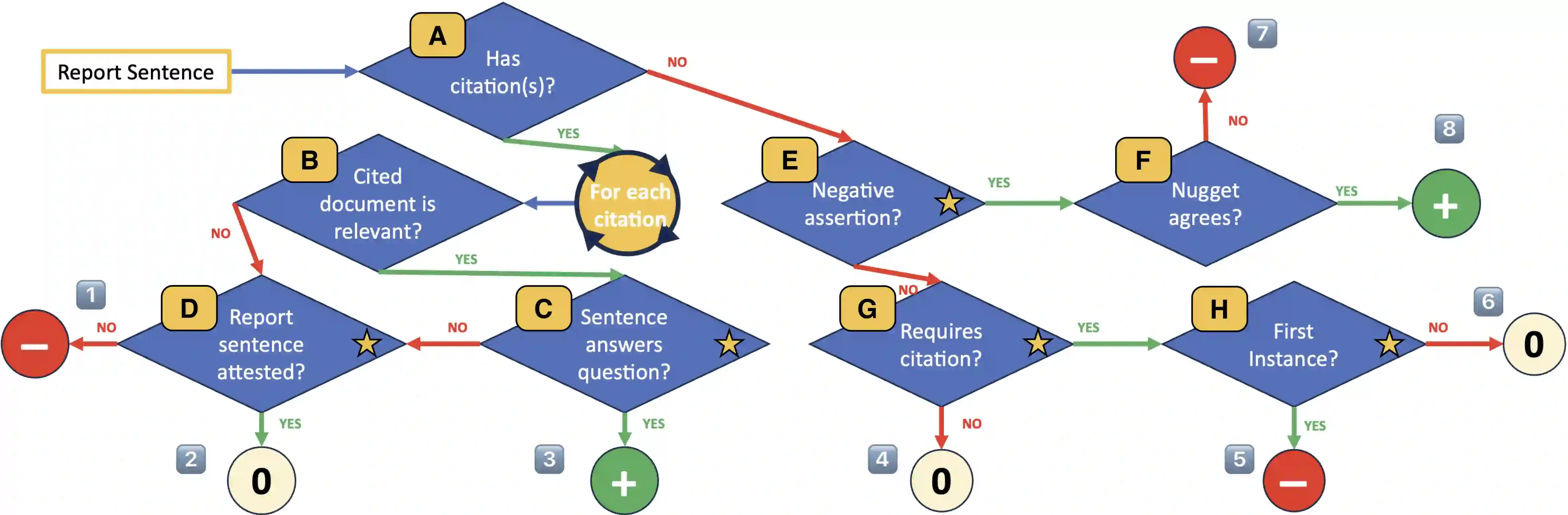

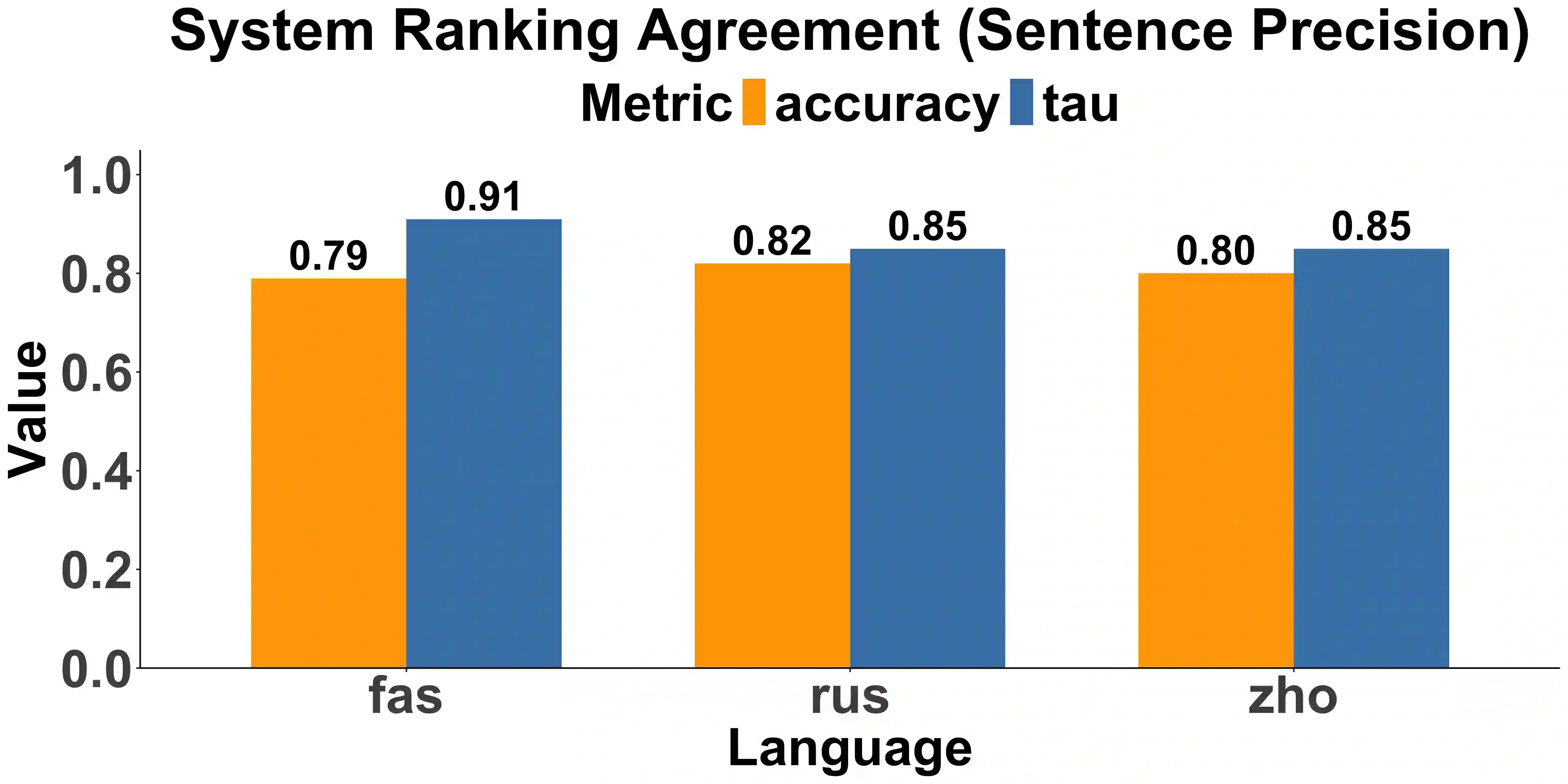

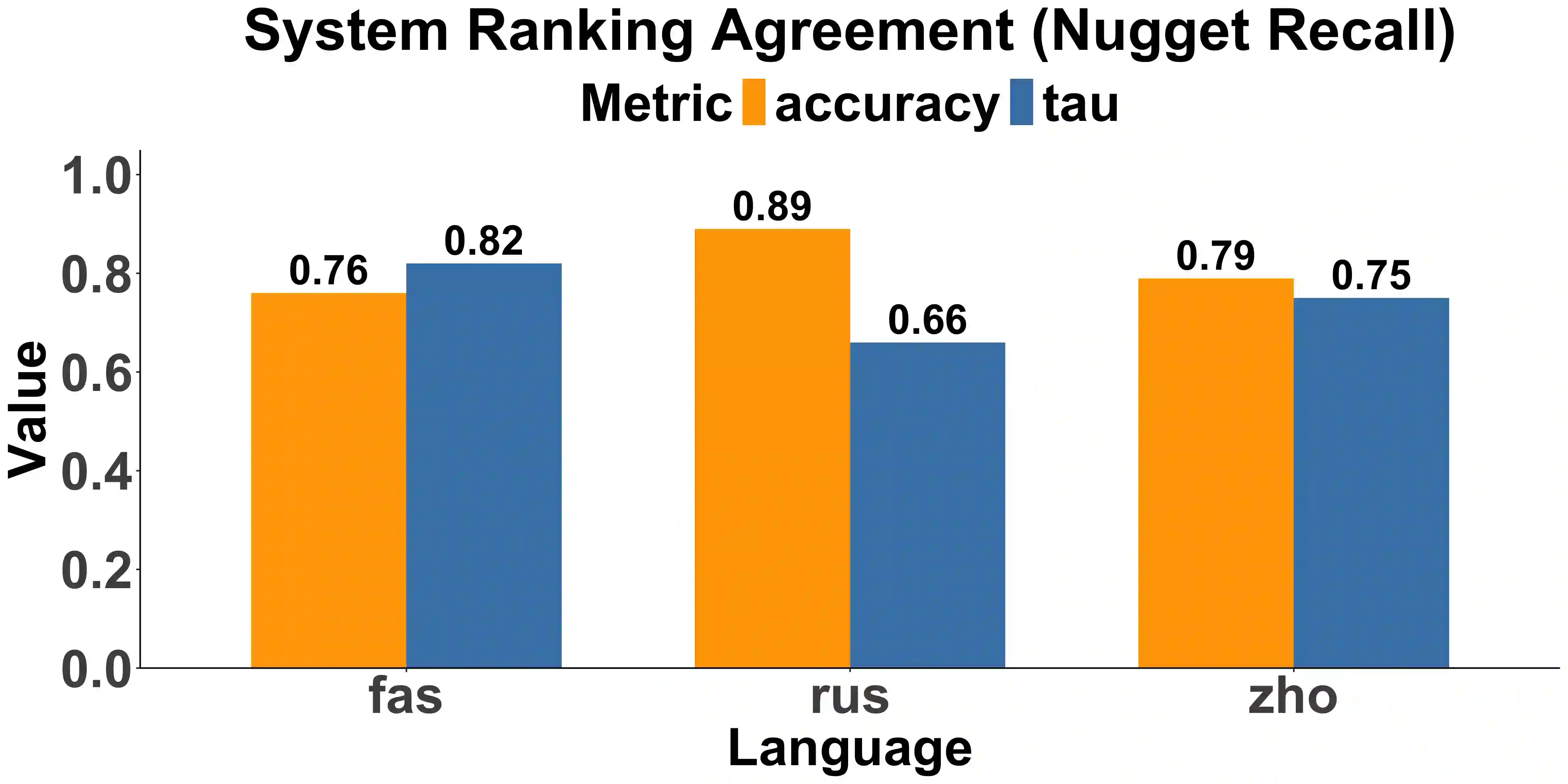

Generation of long-form, citation-backed reports is a primary use case for retrieval augmented generation (RAG) systems. While open-source evaluation tools exist for various RAG tasks, ones tailored to report generation are lacking. Accordingly, we introduce Auto-ARGUE, a robust LLM-based implementation of the recent ARGUE framework for report generation evaluation. We present analysis of Auto-ARGUE on the report generation pilot task from the TREC 2024 NeuCLIR track, showing good system-level correlations with human judgments. We further release a web app for visualization of Auto-ARGUE outputs.

翻译:生成长篇、有引文支撑的报告是检索增强生成(RAG)系统的一个主要应用场景。虽然存在用于各种RAG任务的开源评估工具,但专门针对报告生成的工具仍然缺乏。为此,我们引入了Auto-ARGUE,这是对近期提出的用于报告生成评估的ARGUE框架的一个鲁棒的、基于大型语言模型(LLM)的实现。我们展示了Auto-ARGUE在TREC 2024 NeuCLIR赛道报告生成试点任务上的分析结果,表明其在系统层面与人工评判具有良好的相关性。我们进一步发布了一个用于可视化Auto-ARGUE输出的Web应用程序。