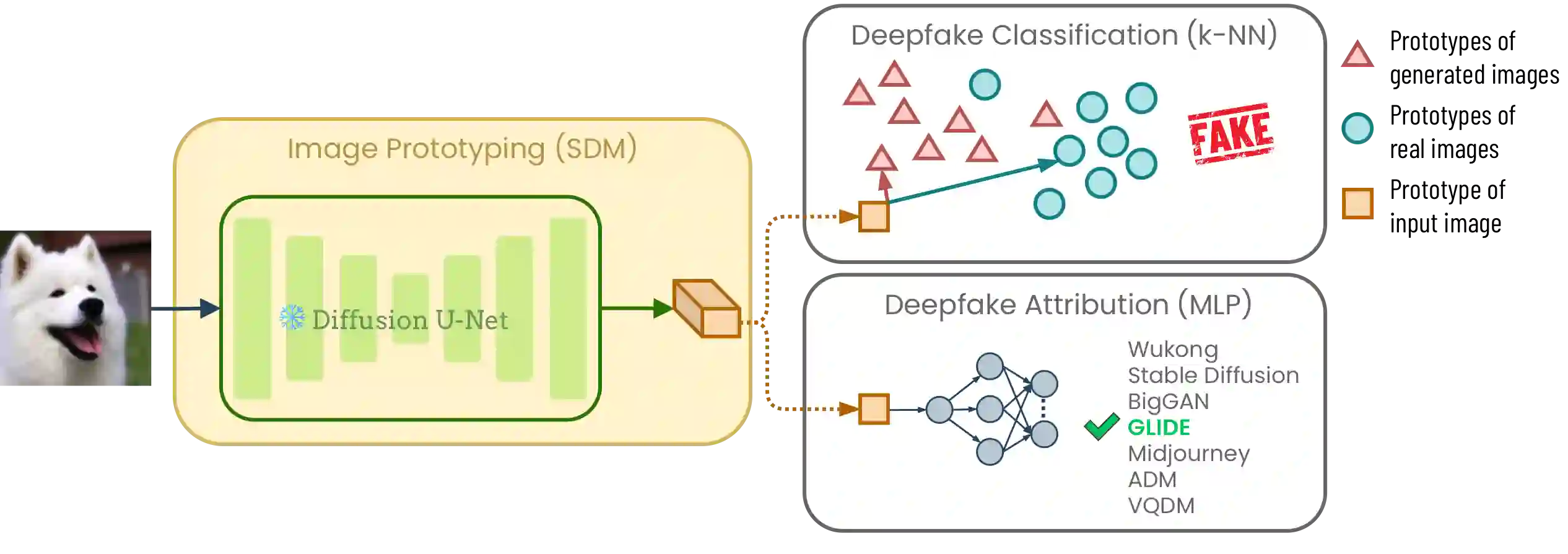

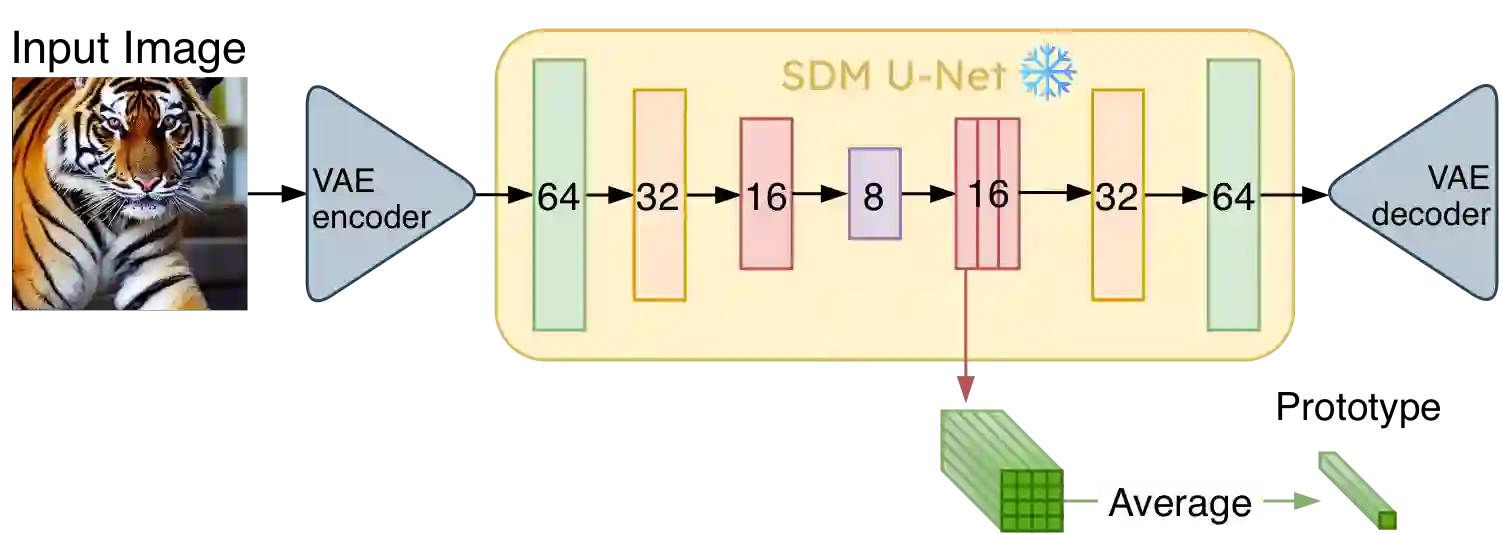

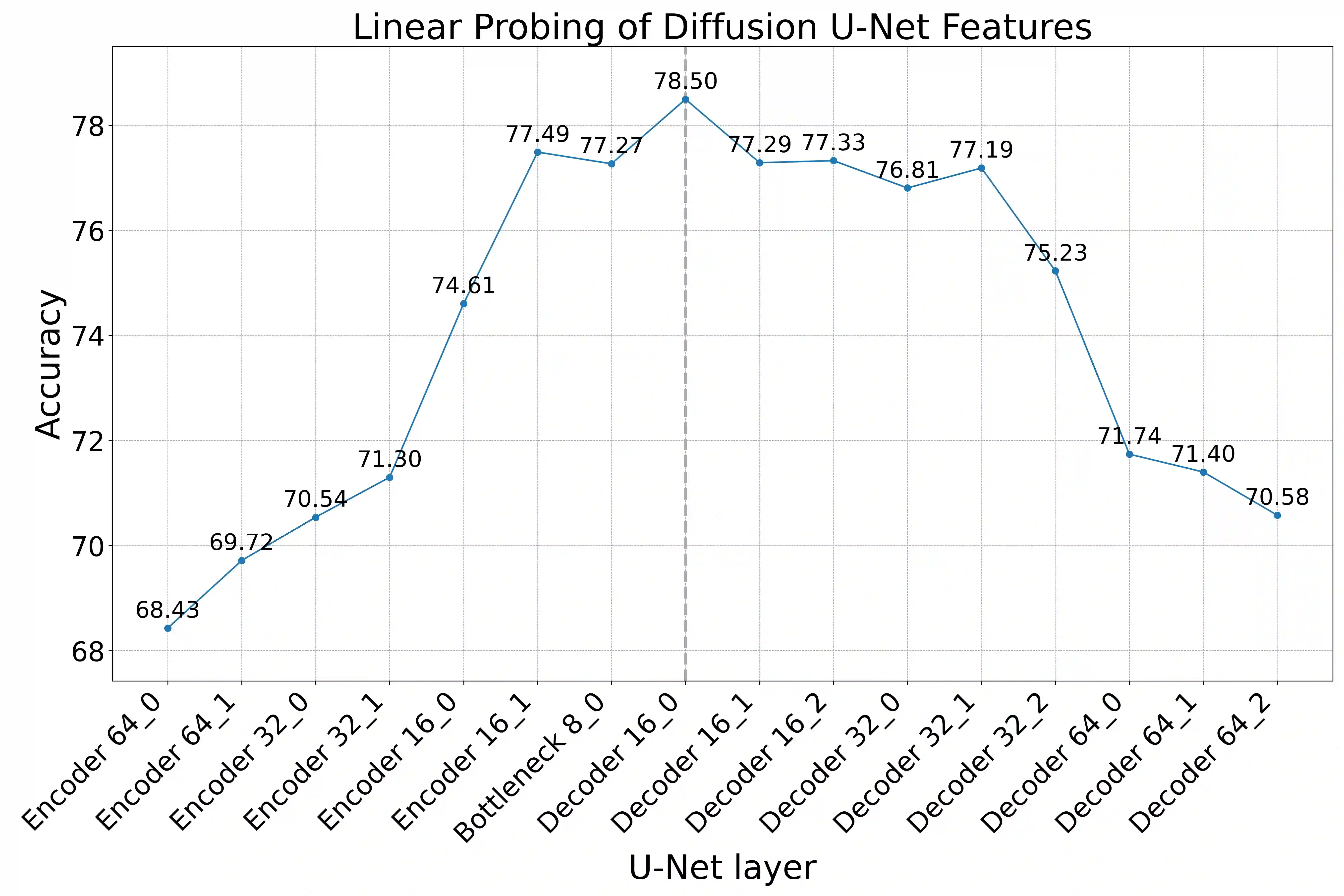

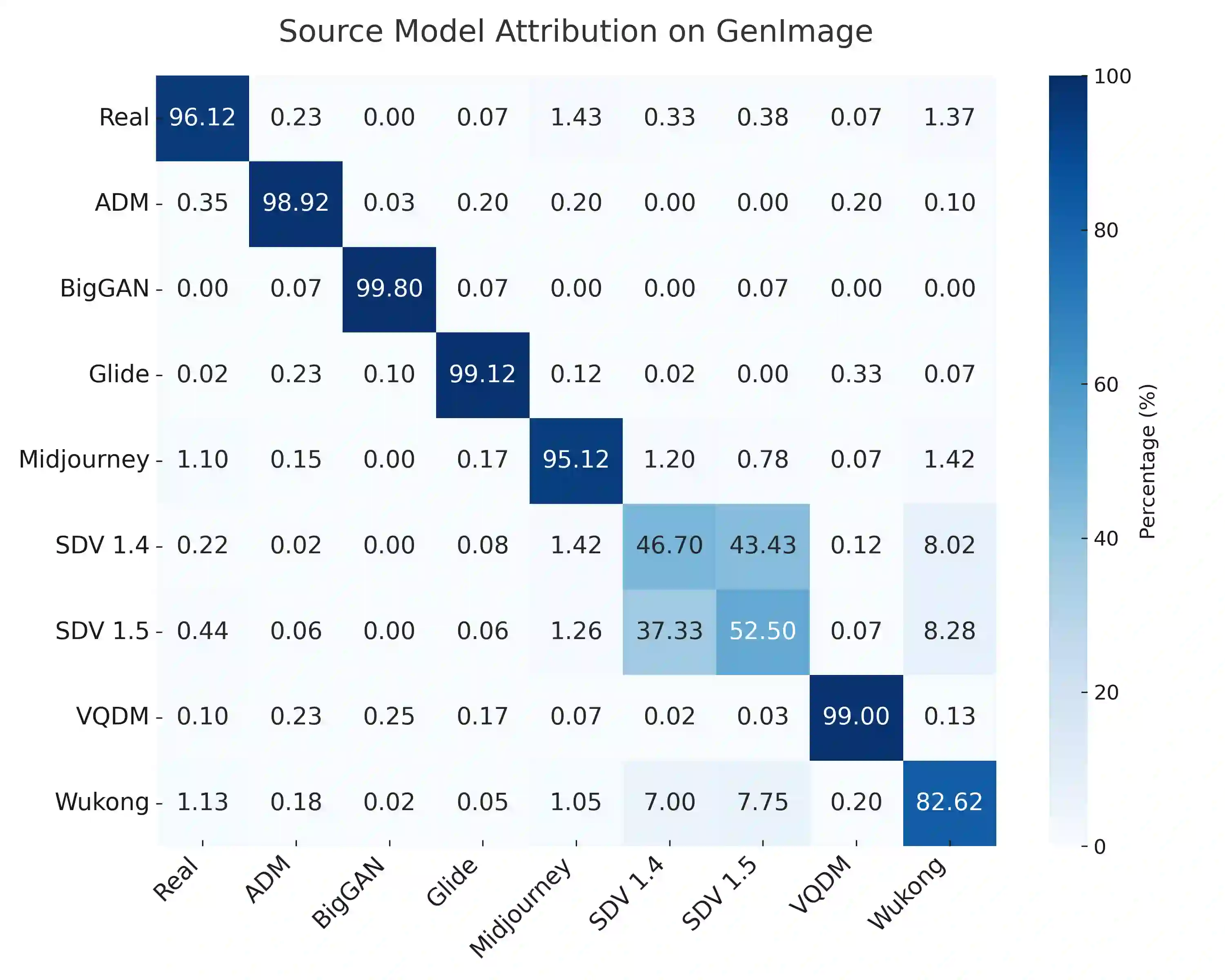

The rapid progress of generative diffusion models has enabled the creation of synthetic images that are increasingly difficult to distinguish from real ones, raising concerns about authenticity, copyright, and misinformation. Existing supervised detectors often struggle to generalize across unseen generators, requiring extensive labeled data and frequent retraining. We introduce FRIDA (Fake-image Recognition and source Identification via Diffusion-features Analysis), a lightweight framework that leverages internal activations from a pre-trained diffusion model for deepfake detection and source generator attribution. A k-nearest-neighbor classifier applied to diffusion features achieves state-of-the-art cross-generator performance without fine-tuning, while a compact neural model enables accurate source attribution. These results show that diffusion representations inherently encode generator-specific patterns, providing a simple and interpretable foundation for synthetic image forensics.

翻译:生成式扩散模型的快速发展使得合成图像越来越难以与真实图像区分,引发了关于真实性、版权和虚假信息的担忧。现有的监督检测器通常难以泛化至未见过的生成器,需要大量标注数据和频繁的重新训练。我们提出了FRIDA(基于扩散特征分析的伪造图像识别与来源鉴定),这是一个轻量级框架,利用预训练扩散模型的内部激活特征进行深度伪造检测和生成器来源追溯。对扩散特征应用k近邻分类器,无需微调即可实现最先进的跨生成器检测性能,而紧凑的神经网络模型能够实现精确的来源追溯。这些结果表明,扩散表示本质上编码了生成器特定的模式,为合成图像取证提供了简单且可解释的基础。