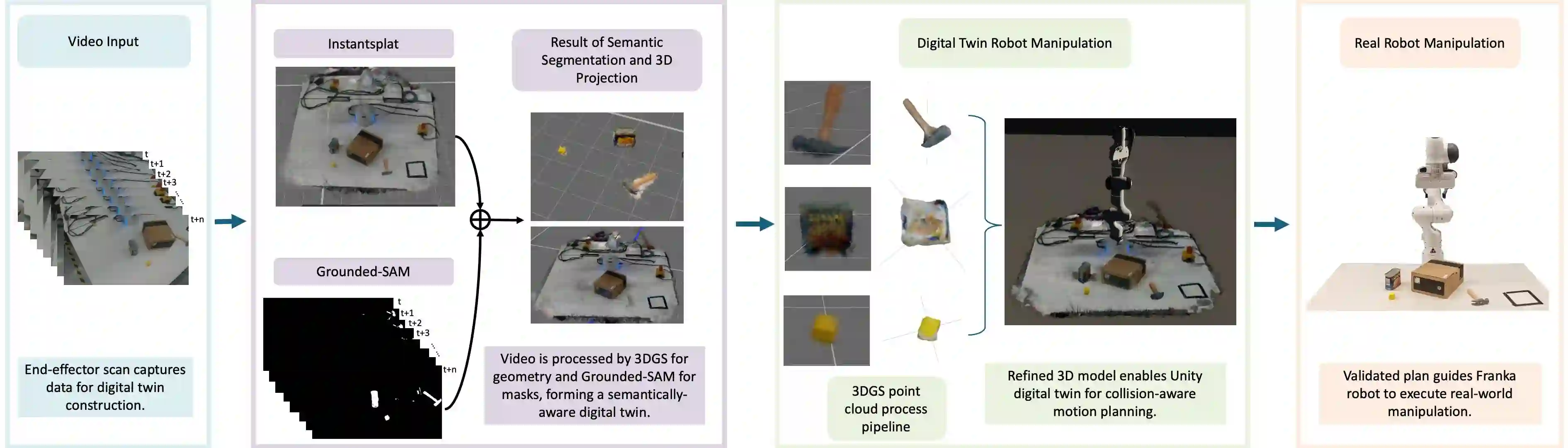

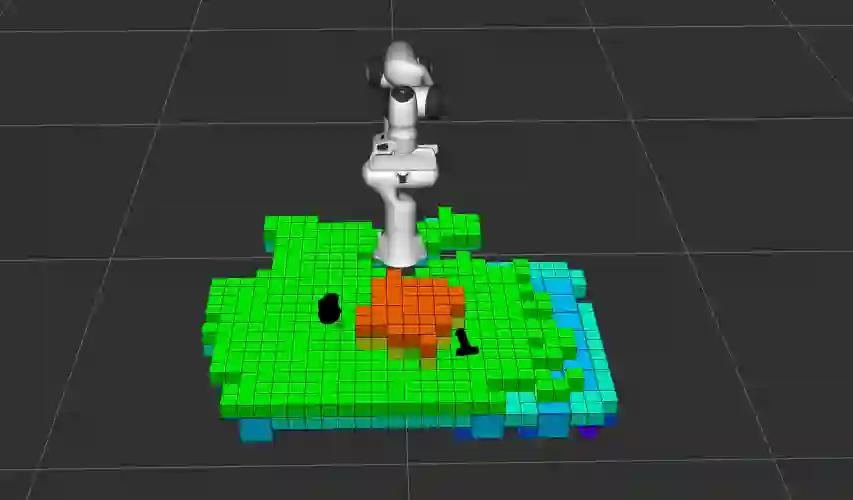

Developing high-fidelity, interactive digital twins is crucial for enabling closed-loop motion planning and reliable real-world robot execution, which are essential to advancing sim-to-real transfer. However, existing approaches often suffer from slow reconstruction, limited visual fidelity, and difficulties in converting photorealistic models into planning-ready collision geometry. We present a practical framework that constructs high-quality digital twins within minutes from sparse RGB inputs. Our system employs 3D Gaussian Splatting (3DGS) for fast, photorealistic reconstruction as a unified scene representation. We enhance 3DGS with visibility-aware semantic fusion for accurate 3D labelling and introduce an efficient, filter-based geometry conversion method to produce collision-ready models seamlessly integrated with a Unity-ROS2-MoveIt physics engine. In experiments with a Franka Emika Panda robot performing pick-and-place tasks, we demonstrate that this enhanced geometric accuracy effectively supports robust manipulation in real-world trials. These results demonstrate that 3DGS-based digital twins, enriched with semantic and geometric consistency, offer a fast, reliable, and scalable path from perception to manipulation in unstructured environments.

翻译:开发高保真、可交互的数字孪生对于实现闭环运动规划与可靠的现实世界机器人执行至关重要,这是推进仿真到现实迁移的关键。然而,现有方法通常存在重建速度慢、视觉保真度有限以及难以将逼真模型转换为可用于规划的碰撞几何等问题。我们提出了一种实用框架,可在数分钟内从稀疏RGB输入构建高质量数字孪生。该系统采用3D高斯溅射(3DGS)进行快速、逼真的重建,并将其作为统一场景表示。我们通过可见性感知语义融合增强3DGS以实现精确的3D标注,并引入一种高效的基于滤波器的几何转换方法,以生成可无缝集成至Unity-ROS2-MoveIt物理引擎的碰撞就绪模型。在使用Franka Emika Panda机器人执行抓放任务的实验中,我们证明这种增强的几何精度能有效支持实际试验中的鲁棒操作。这些结果表明,基于3DGS并融合语义与几何一致性的数字孪生,为无结构环境中从感知到操作提供了快速、可靠且可扩展的路径。