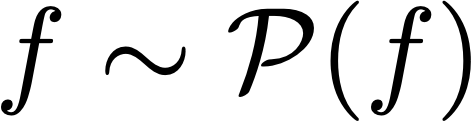

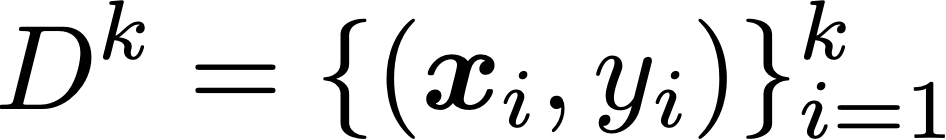

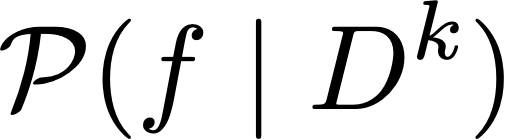

This paper develops a finite-sample statistical theory for in-context learning (ICL), analyzed within a meta-learning framework that accommodates mixtures of diverse task types. We introduce a principled risk decomposition that separates the total ICL risk into two orthogonal components: Bayes Gap and Posterior Variance. The Bayes Gap quantifies how well the trained model approximates the Bayes-optimal in-context predictor. For a uniform-attention Transformer, we derive a non-asymptotic upper bound on this gap, which explicitly clarifies the dependence on the number of pretraining prompts and their context length. The Posterior Variance is a model-independent risk representing the intrinsic task uncertainty. Our key finding is that this term is determined solely by the difficulty of the true underlying task, while the uncertainty arising from the task mixture vanishes exponentially fast with only a few in-context examples. Together, these results provide a unified view of ICL: the Transformer selects the optimal meta-algorithm during pretraining and rapidly converges to the optimal algorithm for the true task at test time.

翻译:本文针对上下文学习(ICL)建立了有限样本统计理论,该理论在可容纳多种任务类型混合的元学习框架内进行分析。我们提出了一个原则性的风险分解方法,将总ICCL风险分解为两个正交分量:贝叶斯间隙与后验方差。贝叶斯间隙量化了训练模型逼近贝叶斯最优上下文预测器的程度。对于均匀注意力Transformer,我们推导了该间隙的非渐近上界,明确揭示了其对预训练提示数量及其上下文长度的依赖关系。后验方差是与模型无关的风险项,表征了任务固有的不确定性。我们的核心发现是:该项完全由真实底层任务的难度决定,而来自任务混合的不确定性仅需少量上下文示例便会以指数级速度消失。综合来看,这些结果为ICL提供了统一的理论视角:Transformer在预训练期间选择最优元算法,并在测试时快速收敛至针对真实任务的最优算法。