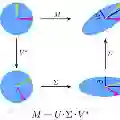

Traditional post-training quantization (PTQ) is considered an effective approach to reduce model size and accelerate inference of large-scale language models (LLMs). However, existing low-rank PTQ methods require costly fine-tuning to determine a compromise rank for diverse data and layers in large models, failing to exploit their full potential. Additionally, the current SVD-based low-rank approximation compounds the computational overhead. In this work, we thoroughly analyze the varying effectiveness of low-rank approximation across different layers in representative models. Accordingly, we introduce \underline{F}lexible \underline{L}ow-\underline{R}ank \underline{Q}uantization (FLRQ), a novel solution designed to quickly identify the accuracy-optimal ranks and aggregate them to achieve minimal storage combinations. FLRQ comprises two powerful components, Rank1-Sketch-based Flexible Rank Selection (R1-FLR) and Best Low-rank Approximation under Clipping (BLC). R1-FLR applies the R1-Sketch with Gaussian projection for the fast low-rank approximation, enabling outlier-aware rank extraction for each layer. Meanwhile, BLC aims at minimizing the low-rank quantization error under the scaling and clipping strategy through an iterative method. FLRQ demonstrates strong effectiveness and robustness in comprehensive experiments, achieving state-of-the-art performance in both quantization quality and algorithm efficiency.

翻译:传统后训练量化(PTQ)被认为是减小模型规模、加速大规模语言模型(LLM)推理的有效方法。然而,现有的低秩PTQ方法需要昂贵的微调来确定大模型中不同数据和层之间的折衷秩,未能充分发挥其潜力。此外,当前基于奇异值分解(SVD)的低秩近似方法进一步增加了计算开销。在本工作中,我们深入分析了低秩近似在代表性模型不同层中的有效性差异。据此,我们提出了灵活低秩量化(FLRQ),这是一种新颖的解决方案,旨在快速识别精度最优的秩并将其聚合,以实现最小存储组合。FLRQ包含两个核心组件:基于Rank1-Sketch的灵活秩选择(R1-FLR)以及裁剪下的最佳低秩近似(BLC)。R1-FLR应用带有高斯投影的R1-Sketch进行快速低秩近似,实现对每一层的异常值感知秩提取。同时,BLC通过迭代方法,旨在缩放和裁剪策略下最小化低秩量化误差。综合实验表明,FLRQ具有强大的有效性和鲁棒性,在量化质量和算法效率方面均达到了最先进的性能。