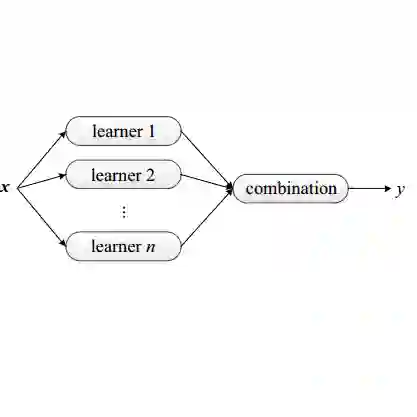

This paper develops the algorithmic and dynamical foundations of recursive ensemble learning driven by Fibonacci-type update flows. In contrast with classical boosting Freund and Schapire (1997); Friedman (2001), where the ensemble evolves through first-order additive updates, we study second-order recursive architectures in which each predictor depends on its two immediate predecessors. These Fibonacci flows induce a learning dynamic with memory, allowing ensembles to integrate past structure while adapting to new residual information. We introduce a general family of recursive weight-update algorithms encompassing Fibonacci, tribonacci, and higher-order recursions, together with continuous-time limits that yield systems of differential equations governing ensemble evolution. We establish global convergence conditions, spectral stability criteria, and non-asymptotic generalization bounds under Rademacher Bartlett and Mendelson (2002) and algorithmic stability analyses. The resulting theory unifies recursive ensembles, structured weighting, and dynamical systems viewpoints in statistical learning. Experiments with kernel ridge regression Rasmussen and Williams (2006), spline smoothers Wahba (1990), and random Fourier feature models Rahimi and Recht (2007) demonstrate that recursive flows consistently improve approximation and generalization beyond static weighting. These results complete the trilogy begun in Papers I and II: from Fibonacci weighting, through geometric weighting theory, to fully dynamical recursive ensemble learning systems.

翻译:本文发展了由斐波那契型更新流驱动的递归集成学习的算法与动力学基础。与经典的提升方法(Freund 和 Schapire,1997;Friedman,2001)中集成通过一阶加法更新演化不同,我们研究二阶递归架构,其中每个预测器依赖于其两个直接前驱。这些斐波那契流诱导了一种具有记忆的学习动态,使得集成能够整合过去的结构,同时适应新的残差信息。我们引入了一个通用的递归权重更新算法族,涵盖斐波那契、三波那契及更高阶递归,连同其连续时间极限,这些极限产生支配集成演化的微分方程组。我们在 Rademacher 复杂度(Bartlett 和 Mendelson,2002)和算法稳定性分析框架下,建立了全局收敛条件、谱稳定性准则以及非渐近泛化界。所得理论统一了统计学习中的递归集成、结构化加权和动力系统视角。在核岭回归(Rasmussen 和 Williams,2006)、样条平滑器(Wahba,1990)和随机傅里叶特征模型(Rahimi 和 Recht,2007)上的实验表明,递归流持续改进近似和泛化能力,超越静态加权。这些结果完成了始于论文 I 和 II 的三部曲:从斐波那契加权,到几何加权理论,再到完全动态的递归集成学习系统。