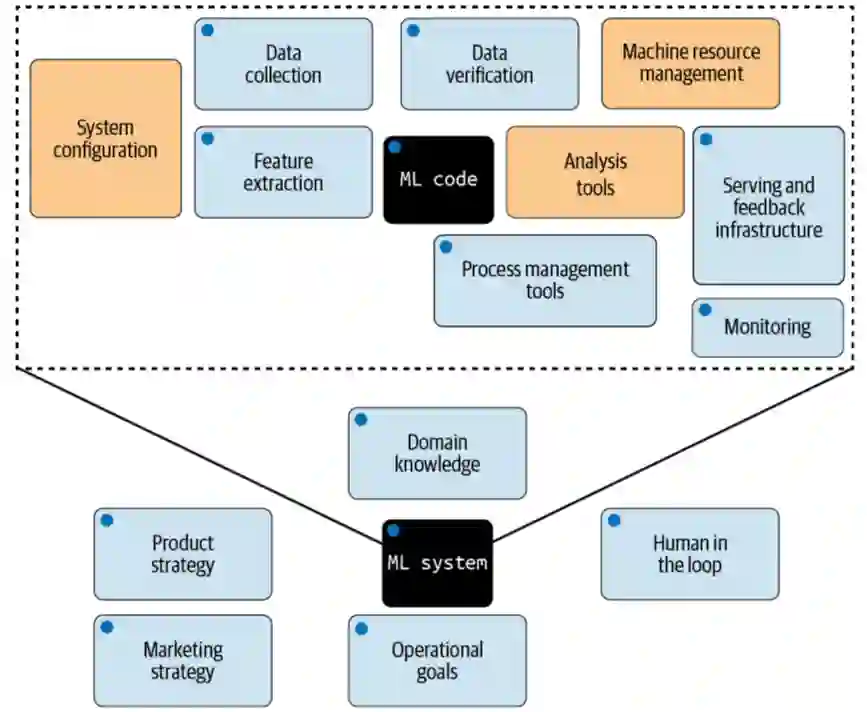

Red teaming has evolved from its origins in military applications to become a widely adopted methodology in cybersecurity and AI. In this paper, we take a critical look at the practice of AI red teaming. We argue that despite its current popularity in AI governance, there exists a significant gap between red teaming's original intent as a critical thinking exercise and its narrow focus on discovering model-level flaws in the context of generative AI. Current AI red teaming efforts focus predominantly on individual model vulnerabilities while overlooking the broader sociotechnical systems and emergent behaviors that arise from complex interactions between models, users, and environments. To address this deficiency, we propose a comprehensive framework operationalizing red teaming in AI systems at two levels: macro-level system red teaming spanning the entire AI development lifecycle, and micro-level model red teaming. Drawing on cybersecurity experience and systems theory, we further propose a set of six recommendations. In these, we emphasize that effective AI red teaming requires multifunctional teams that examine emergent risks, systemic vulnerabilities, and the interplay between technical and social factors.

翻译:红队测试已从军事应用起源演变为网络安全和人工智能领域广泛采用的方法论。本文对AI红队测试实践进行了批判性审视。我们认为,尽管当前AI治理中红队测试备受推崇,但其作为批判性思维训练的最初意图与生成式AI背景下聚焦模型层面缺陷的狭隘定位存在显著差距。当前AI红队测试主要关注个体模型漏洞,却忽视了模型、用户与环境复杂交互所产生的更广泛社会技术系统及涌现行为。为弥补这一缺陷,我们提出了在AI系统中实施红队测试的综合框架,涵盖两个层面:贯穿AI全生命周期的宏观系统级红队测试,以及微观模型级红队测试。借鉴网络安全经验与系统理论,我们进一步提出六项建议,强调有效的AI红队测试需要跨职能团队共同审视涌现风险、系统性漏洞以及技术与社会因素的相互作用。