Users of Augmentative and Alternative Communication (AAC) may write letter-by-letter via an interface that uses a character language model. However, most state-of-the-art large pretrained language models predict subword tokens of variable length. We investigate how to practically use such models to make accurate and efficient character predictions. Our algorithm for producing character predictions from a subword large language model (LLM) provides more accurate predictions than using a classification layer, a byte-level LLM, or an n-gram model. Additionally, we investigate a domain adaptation procedure based on a large dataset of sentences we curated based on scoring how useful each sentence might be for spoken or written AAC communication. We find our procedure further improves model performance on simple, conversational text.

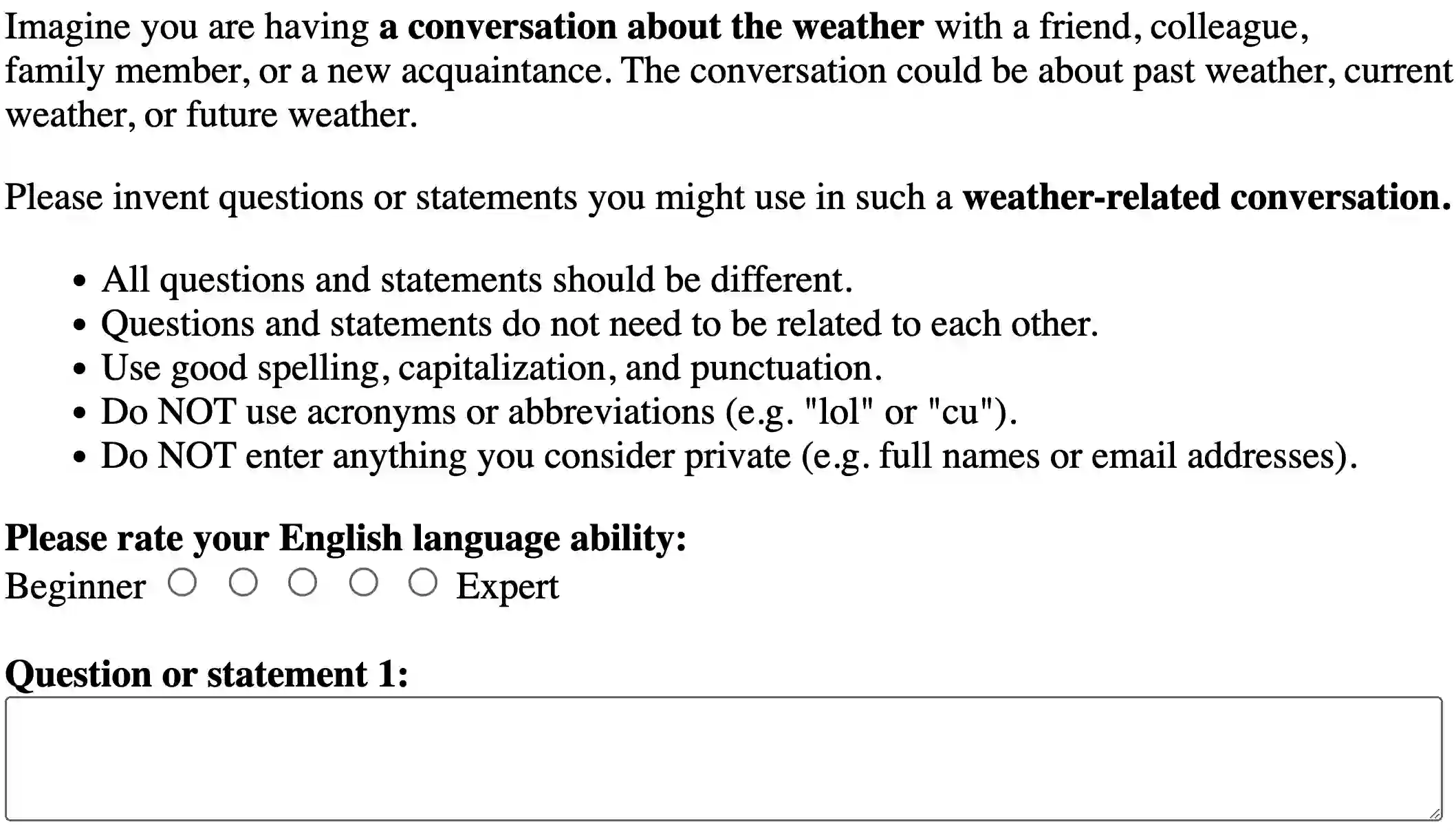

翻译:增强与替代通信(AAC)用户可能通过使用字符语言模型的界面逐字母书写。然而,大多数最先进的大型预训练语言模型预测的是可变长度的子词标记。我们研究了如何实际使用此类模型来进行准确且高效的字符预测。我们提出的从子词大型语言模型(LLM)生成字符预测的算法,比使用分类层、字节级LLM或n-gram模型能提供更准确的预测。此外,我们研究了一种基于我们整理的大型句子数据集的领域适应方法,该数据集中的每个句子都根据其对口语或书面AAC通信可能的有用程度进行了评分。我们发现,我们的方法进一步提高了模型在简单对话文本上的性能。