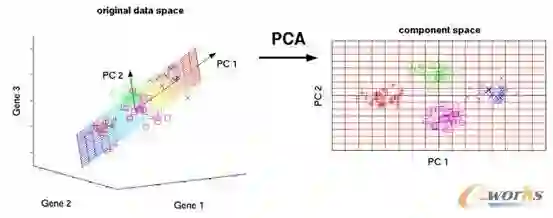

We propose a new method for statistical inference in generalized linear models. In the overparameterized regime, Principal Component Regression (PCR) reduces variance by projecting high-dimensional data to a low-dimensional principal subspace before fitting. However, PCR incurs truncation bias whenever the true regression vector has mass outside the retained principal components (PC). To mitigate the bias, we propose Calibrated Principal Component Regression (CPCR), which first learns a low-variance prior in the PC subspace and then calibrates the model in the original feature space via a centered Tikhonov step. CPCR leverages cross-fitting and controls the truncation bias by softening PCR's hard cutoff. Theoretically, we calculate the out-of-sample risk in the random matrix regime, which shows that CPCR outperforms standard PCR when the regression signal has non-negligible components in low-variance directions. Empirically, CPCR consistently improves prediction across multiple overparameterized problems. The results highlight CPCR's stability and flexibility in modern overparameterized settings.

翻译:我们提出了一种用于广义线性模型中统计推断的新方法。在过参数化体系中,主成分回归(PCR)通过将高维数据投影到低维主成分子空间后再进行拟合,从而降低方差。然而,当真实回归向量在保留的主成分(PC)之外具有分量时,PCR会产生截断偏差。为减轻此偏差,我们提出校准主成分回归(CPCR),该方法首先在主成分子空间中学习一个低方差先验,然后通过中心化Tikhonov步骤在原始特征空间中对模型进行校准。CPCR利用交叉拟合并通过软化PCR的硬截断来控制截断偏差。理论上,我们在随机矩阵体系下计算了样本外风险,结果表明当回归信号在低方差方向上具有不可忽略的分量时,CPCR优于标准PCR。实证上,CPCR在多个过参数化问题中持续提升了预测性能。这些结果突显了CPCR在现代过参数化设置中的稳定性和灵活性。