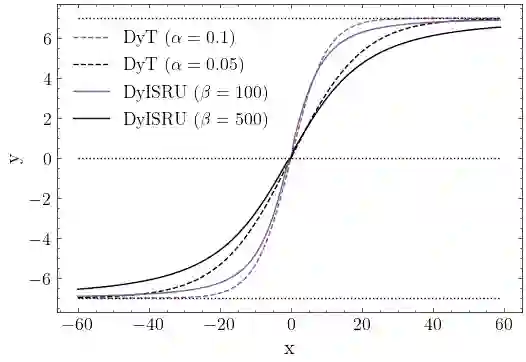

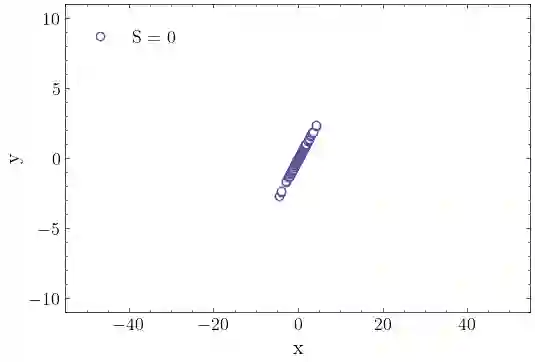

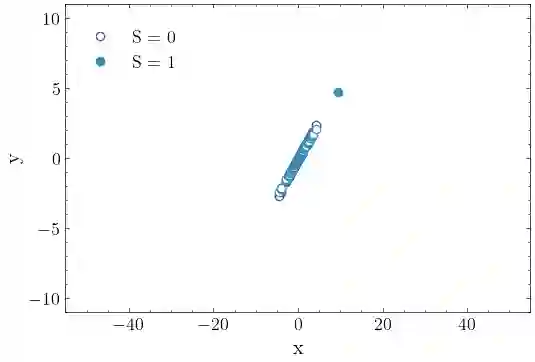

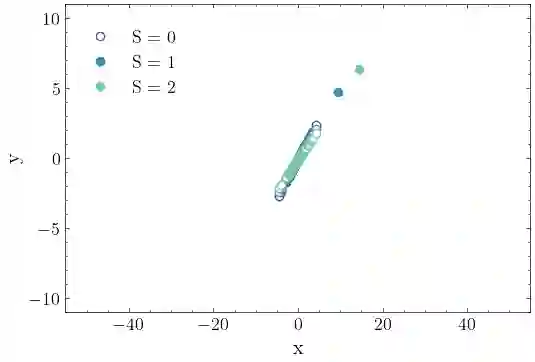

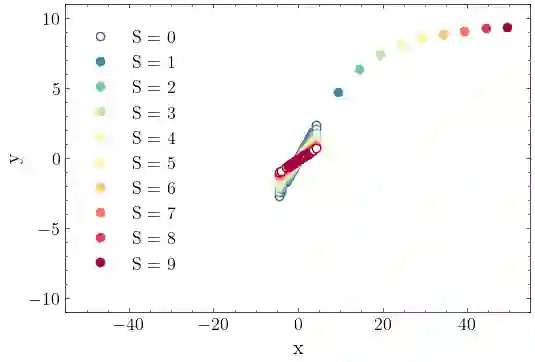

A recent paper proposes Dynamic Tanh (DyT) as a drop-in replacement for layer normalization (LN). Although the method is empirically well-motivated and appealing from a practical point of view, it lacks a theoretical foundation. In this work, we shed light on the mathematical relationship between layer normalization and dynamic activation functions. In particular, we derive DyT from LN and show that a well-defined approximation is needed to do so. By dropping said approximation, an alternative activation function is obtained, which we call Dynamic Inverse Square Root Unit (DyISRU). DyISRU is the exact counterpart of layer normalization, and we demonstrate numerically that it indeed resembles LN more accurately than DyT does.

翻译:近期一篇论文提出将动态双曲正切函数作为层归一化的即插即用替代方案。尽管该方法具有充分的实证依据且从实用角度颇具吸引力,但其缺乏理论基础。本工作揭示了层归一化与动态激活函数之间的数学关系。具体而言,我们从层归一化推导出动态双曲正切函数,并证明该推导需要建立明确的近似关系。通过舍弃该近似条件,我们得到一种替代激活函数,称为动态逆平方根单元。动态逆平方根单元是层归一化的精确对应形式,我们通过数值计算证明其确实比动态双曲正切函数更精确地逼近层归一化。