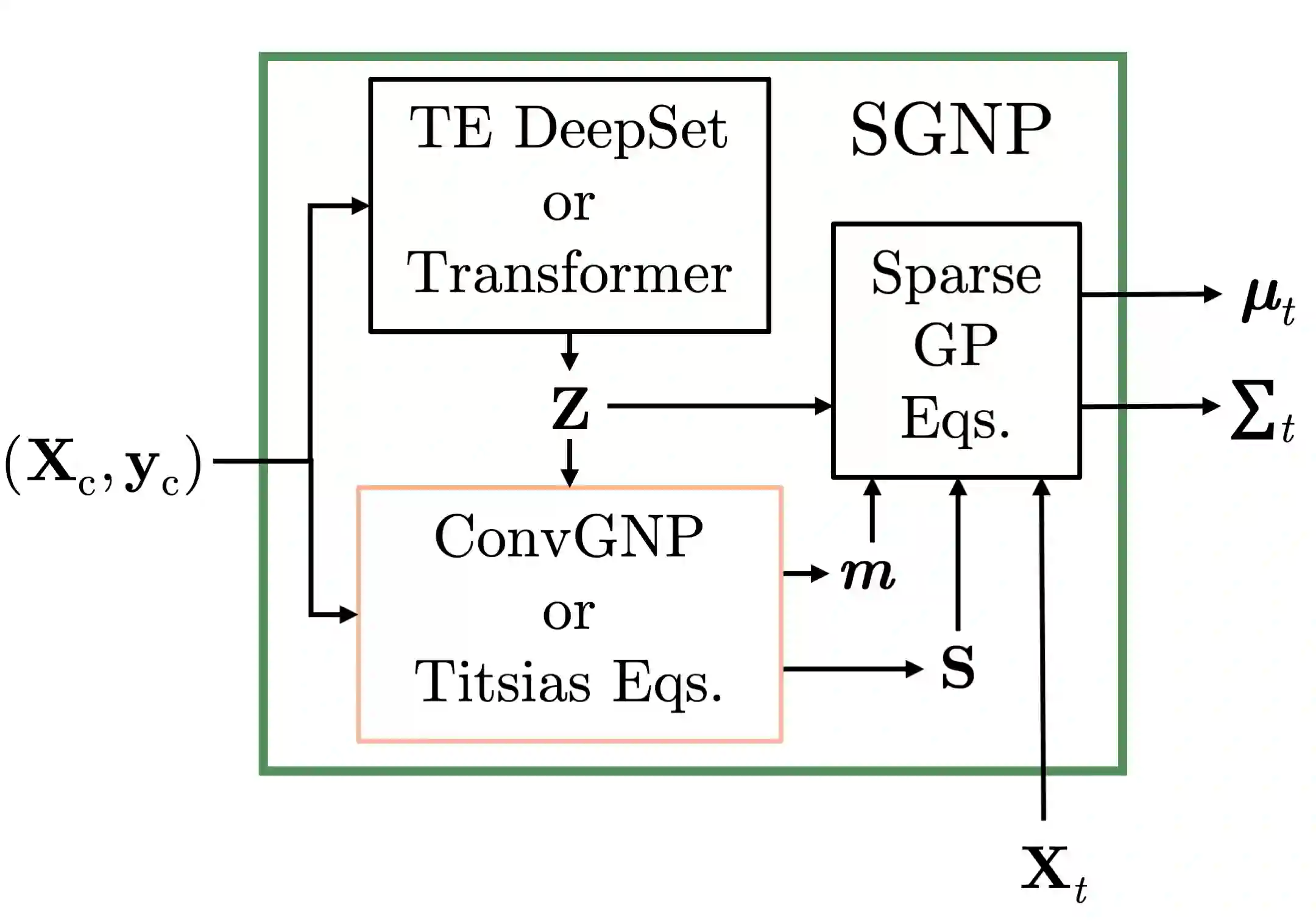

Despite significant recent advances in probabilistic meta-learning, it is common for practitioners to avoid using deep learning models due to a comparative lack of interpretability. Instead, many practitioners simply use non-meta-models such as Gaussian processes with interpretable priors, and conduct the tedious procedure of training their model from scratch for each task they encounter. While this is justifiable for tasks with a limited number of data points, the cubic computational cost of exact Gaussian process inference renders this prohibitive when each task has many observations. To remedy this, we introduce a family of models that meta-learn sparse Gaussian process inference. Not only does this enable rapid prediction on new tasks with sparse Gaussian processes, but since our models have clear interpretations as members of the neural process family, it also allows manual elicitation of priors in a neural process for the first time. In meta-learning regimes for which the number of observed tasks is small or for which expert domain knowledge is available, this offers a crucial advantage.

翻译:尽管概率元学习领域近期取得了显著进展,但由于深度学习模型相对缺乏可解释性,实践者通常避免使用此类模型。相反,许多实践者仅采用具有可解释先验的非元模型(如高斯过程),并对遇到的每个任务都执行繁琐的从头训练流程。对于数据点有限的任务,这种做法尚可接受,但精确高斯过程推断的三次计算复杂度使得该方法在单个任务包含大量观测数据时变得不可行。为解决这一问题,我们提出了一类能够元学习稀疏高斯过程推断的模型。该模型不仅能在新任务上实现基于稀疏高斯过程的快速预测,而且由于模型本身具有明确的神经过程家族成员解释,首次实现了在神经过程中进行人工先验设定。在观测任务数量较少或存在专家领域知识的元学习场景中,这一特性提供了关键优势。