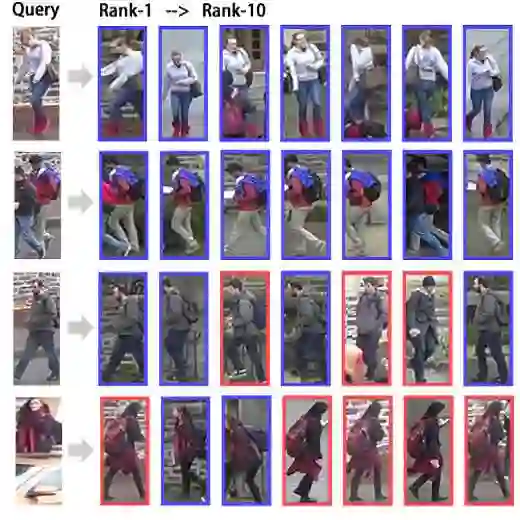

Video-based Person Re-IDentification (VPReID) aims to retrieve the same person from videos captured by non-overlapping cameras. At extreme far distances, VPReID is highly challenging due to severe resolution degradation, drastic viewpoint variation and inevitable appearance noise. To address these issues, we propose a Scale-Adaptive framework with Shape Priors for VPReID, named SAS-VPReID. The framework is built upon three complementary modules. First, we deploy a Memory-Enhanced Visual Backbone (MEVB) to extract discriminative feature representations, which leverages the CLIP vision encoder and multi-proxy memory. Second, we propose a Multi-Granularity Temporal Modeling (MGTM) to construct sequences at multiple temporal granularities and adaptively emphasize motion cues across scales. Third, we incorporate Prior-Regularized Shape Dynamics (PRSD) to capture body structure dynamics. With these modules, our framework can obtain more discriminative feature representations. Experiments on the VReID-XFD benchmark demonstrate the effectiveness of each module and our final framework ranks the first on the VReID-XFD challenge leaderboard. The source code is available at https://github.com/YangQiWei3/SAS-VPReID.

翻译:视频行人重识别旨在从非重叠摄像头拍摄的视频中检索出同一行人。在极端远距离条件下,由于严重的分辨率退化、剧烈的视角变化以及不可避免的外观噪声,视频行人重识别面临巨大挑战。为解决这些问题,我们提出了一种融合形状先验的尺度自适应视频行人重识别框架,命名为SAS-VPReID。该框架建立在三个互补的模块之上。首先,我们部署了一个记忆增强视觉主干网络,以提取具有判别性的特征表示,该网络利用了CLIP视觉编码器和多代理记忆机制。其次,我们提出了多粒度时序建模方法,用于构建多个时间粒度上的序列,并自适应地强调跨尺度的运动线索。第三,我们引入了先验正则化的形状动态模块,以捕捉人体结构动态。通过这些模块,我们的框架能够获得更具判别性的特征表示。在VReID-XFD基准测试上的实验证明了每个模块的有效性,并且我们的最终框架在VReID-XFD挑战赛排行榜上位列第一。源代码可在 https://github.com/YangQiWei3/SAS-VPReID 获取。