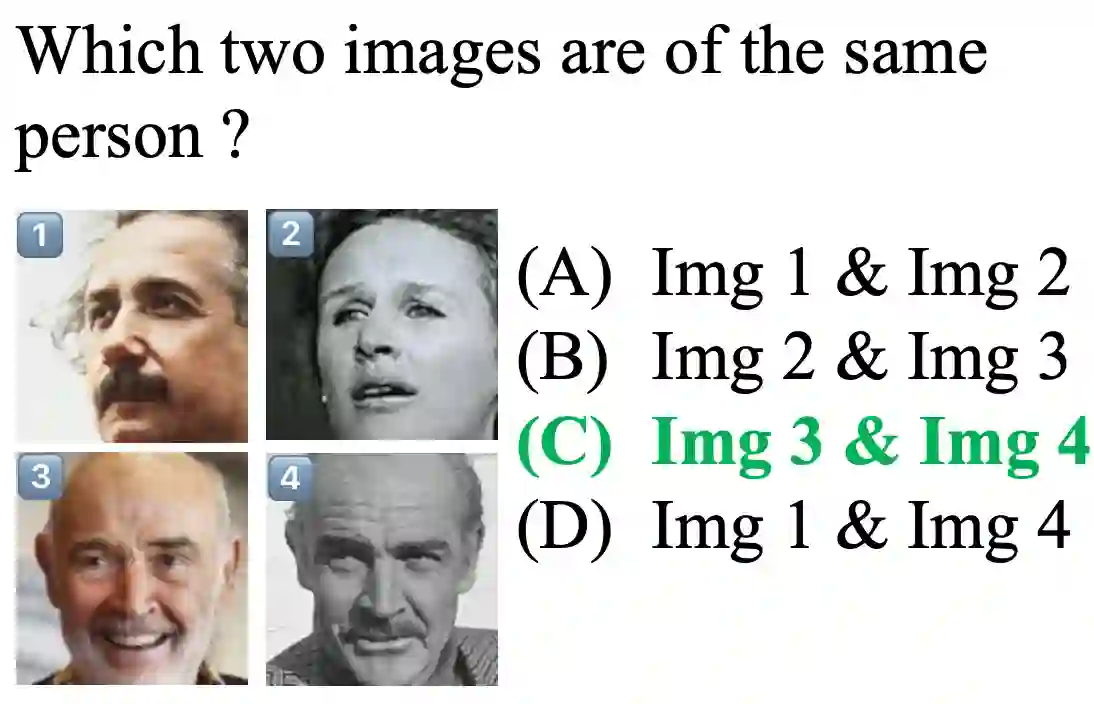

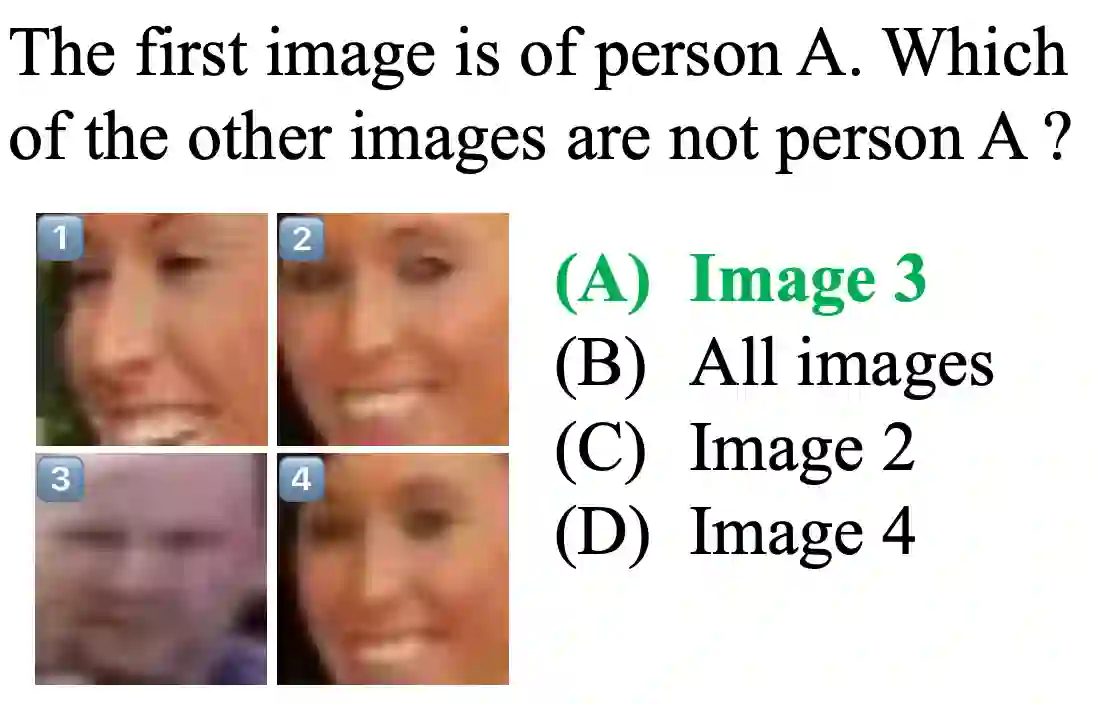

Multimodal large language models (MLLMs) have achieved remarkable performance across diverse vision-and-language tasks. However, their potential in face recognition remains underexplored. In particular, the performance of open-source MLLMs needs to be evaluated and compared with existing face recognition models on standard benchmarks with similar protocol. In this work, we present a systematic benchmark of state-of-the-art MLLMs for face recognition on several face recognition datasets, including LFW, CALFW, CPLFW, CFP, AgeDB and RFW. Experimental results reveal that while MLLMs capture rich semantic cues useful for face-related tasks, they lag behind specialized models in high-precision recognition scenarios in zero-shot applications. This benchmark provides a foundation for advancing MLLM-based face recognition, offering insights for the design of next-generation models with higher accuracy and generalization. The source code of our benchmark is publicly available in the project page.

翻译:多模态大语言模型(MLLMs)在多种视觉-语言任务中取得了显著性能。然而,它们在人脸识别方面的潜力仍未得到充分探索。特别是,需要在标准基准测试中,以相似的评估协议对开源MLLMs的性能进行评估,并与现有人脸识别模型进行比较。在本工作中,我们在多个人脸识别数据集(包括LFW、CALFW、CPLFW、CFP、AgeDB和RFW)上,对最先进的MLLMs进行了系统性的基准测试。实验结果表明,尽管MLLMs能够捕获对人脸相关任务有用的丰富语义线索,但在零样本应用的高精度识别场景中,它们仍落后于专用模型。本基准测试为推进基于MLLM的人脸识别研究奠定了基础,并为设计具有更高准确性和泛化能力的下一代模型提供了见解。我们的基准测试源代码已在项目页面公开。