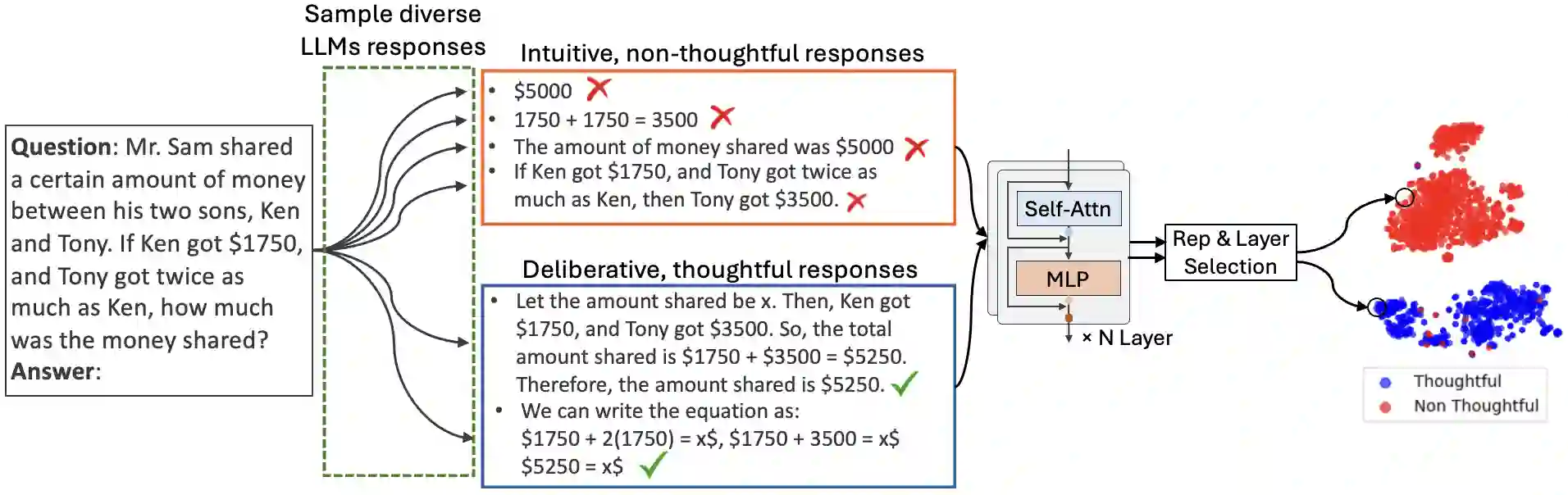

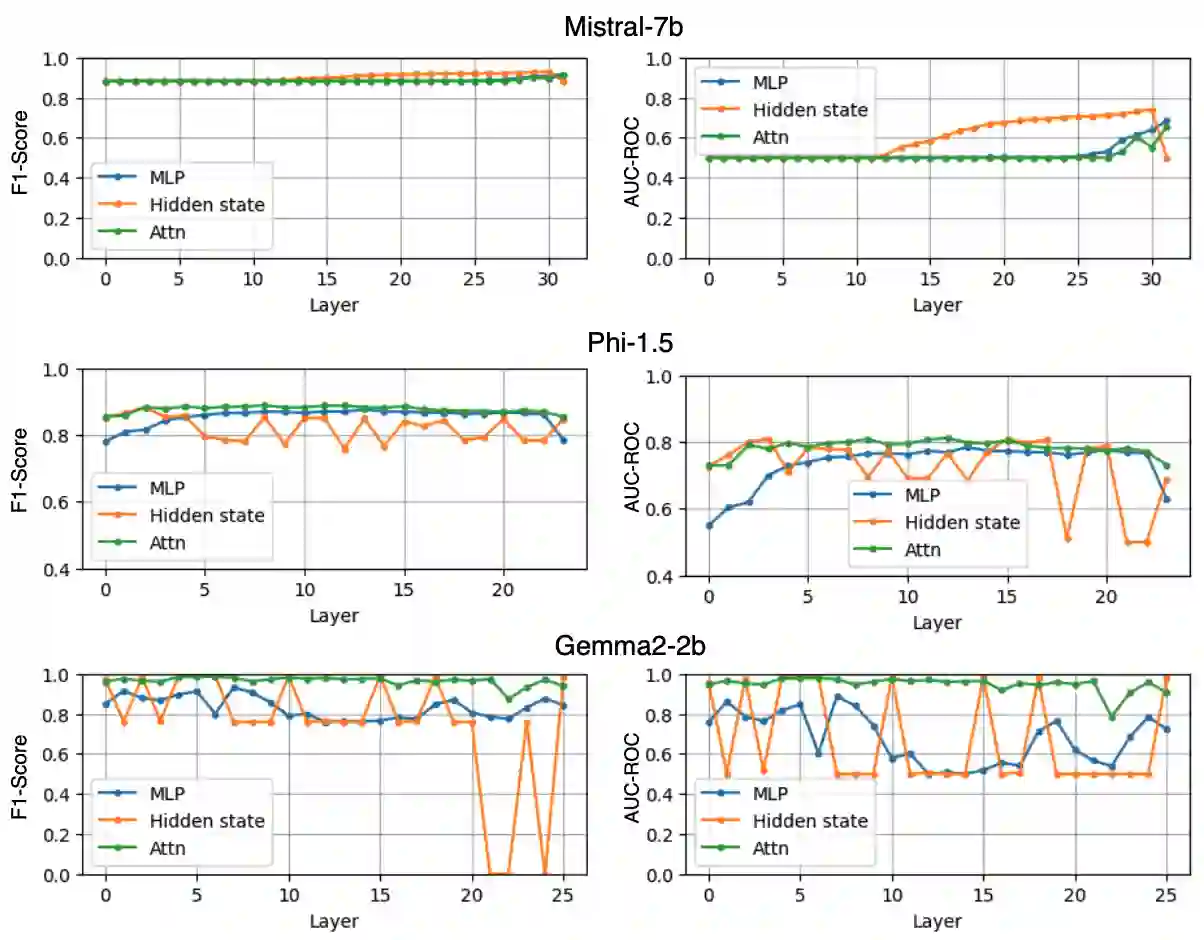

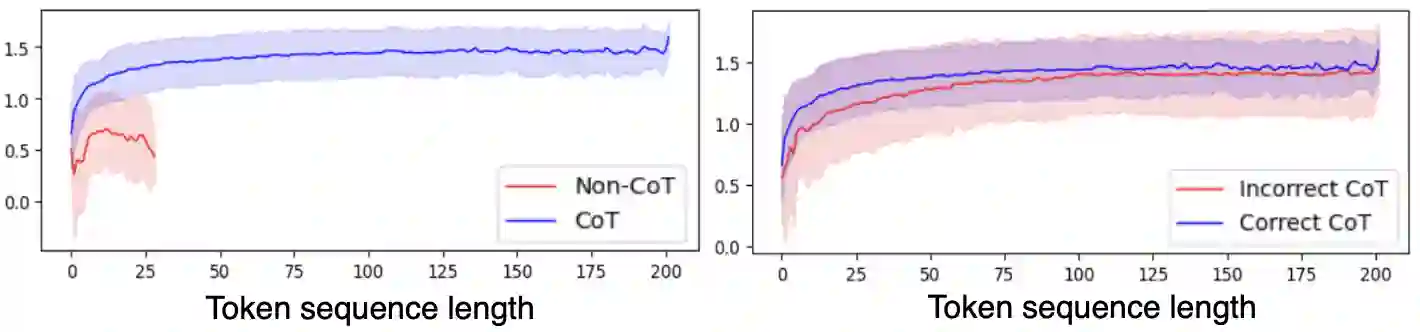

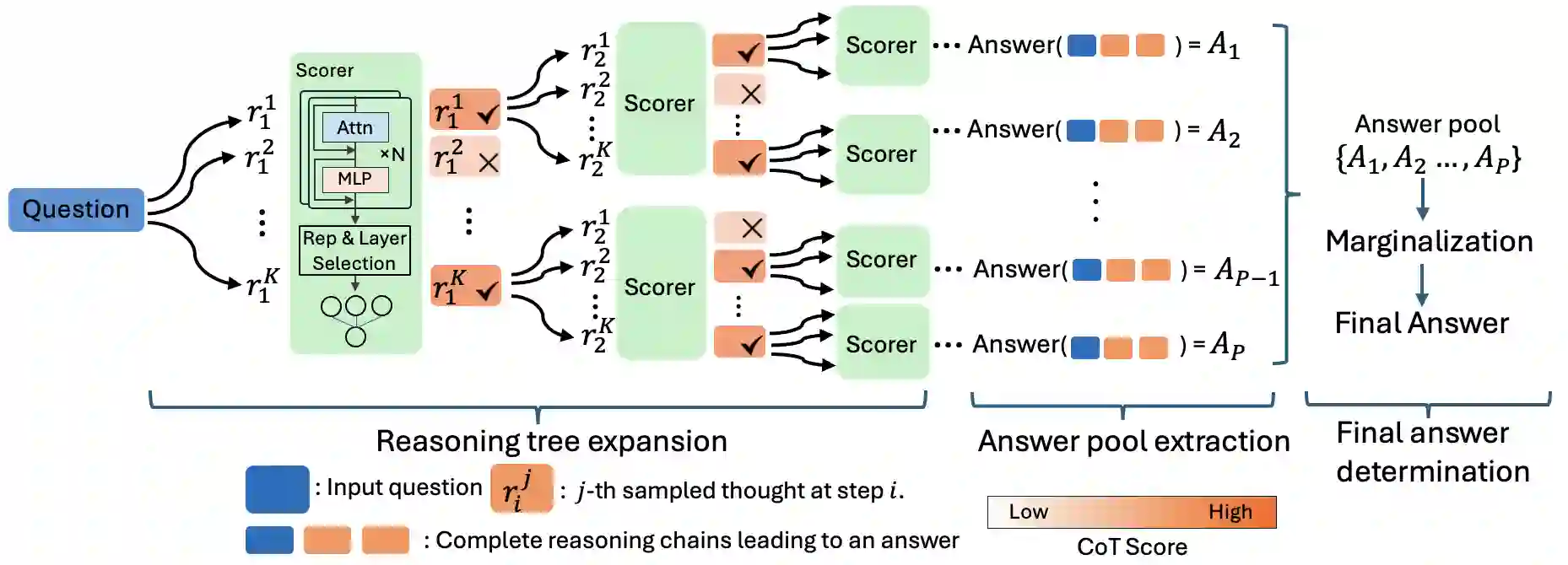

This paper introduces ThoughtProbe, a novel inference time framework that leverages the hidden reasoning features of Large Language Models (LLMs) to improve their reasoning performance. Unlike previous works that manipulate the hidden representations to steer LLM generation, we harness them as discriminative signals to guide the tree structured response space exploration. In each node expansion, a classifier serves as a scoring and ranking mechanism that efficiently allocates computational resources by prioritizing higher score candidates for continuation. After completing the tree expansion, we collect answers from all branches to form a candidate answer pool. We then propose a branch aggregation method that marginalizes over all supporting branches by aggregating their CoT scores, thereby identifying the optimal answer from the pool. Experimental results show that our framework's comprehensive exploration not only covers valid reasoning chains but also effectively identifies them, achieving significant improvements across multiple arithmetic reasoning benchmarks.

翻译:本文提出了ThoughtProbe,一种新颖的推理时框架,该框架利用大语言模型的隐藏推理特征来提升其推理性能。与以往通过操纵隐藏表征来引导大语言模型生成的工作不同,我们将其作为判别性信号,用以指导树状结构响应空间的探索。在每次节点扩展中,一个分类器充当评分与排序机制,通过优先处理得分较高的候选路径进行延续,从而高效分配计算资源。完成树扩展后,我们从所有分支收集答案以形成候选答案池。随后,我们提出一种分支聚合方法,通过汇总所有支持分支的思维链分数进行边缘化处理,从而从池中识别出最优答案。实验结果表明,我们的框架所进行的全面探索不仅覆盖了有效的推理链,还能有效地识别它们,在多个算术推理基准测试中实现了显著提升。