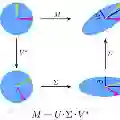

Vision language models (VLMs) have excelled in visual reasoning but often incur high computational costs. One key reason is the redundancy of visual tokens. Although recent token reduction methods claim to achieve minimal performance loss, our extensive experiments reveal that token reduction can substantially alter a model's output distribution, leading to changes in prediction patterns that standard metrics such as accuracy loss do not fully capture. Such inconsistencies are especially concerning for practical applications where system stability is critical. To investigate this phenomenon, we analyze how token reduction influences the energy distribution of a VLM's internal representations using a lower-rank approximation via Singular Value Decomposition (SVD). Our results show that changes in the Inverse Participation Ratio of the singular value spectrum are strongly correlated with the model's consistency after token reduction. Based on these insights, we propose LoFi--a training-free visual token reduction method that utilizes the leverage score from SVD for token pruning. Experimental evaluations demonstrate that LoFi not only reduces computational costs with minimal performance degradation but also significantly outperforms state-of-the-art methods in terms of output consistency.

翻译:视觉语言模型(VLMs)在视觉推理任务中表现出色,但通常伴随着高昂的计算成本。其中一个关键原因是视觉令牌的冗余性。尽管近期的令牌缩减方法声称仅带来微小的性能损失,但我们的广泛实验表明,令牌缩减会显著改变模型的输出分布,导致预测模式的变化,而准确率损失等标准指标无法完全捕捉这种变化。这种不一致性在系统稳定性至关重要的实际应用中尤其值得关注。为探究这一现象,我们通过奇异值分解(SVD)的低秩近似方法,分析了令牌缩减如何影响VLM内部表示的能量分布。结果表明,奇异值谱的逆参与率变化与令牌缩减后模型的一致性高度相关。基于这些发现,我们提出了LoFi——一种无需训练的视觉令牌缩减方法,该方法利用SVD的杠杆得分进行令牌剪枝。实验评估表明,LoFi不仅能在最小化性能损失的同时降低计算成本,而且在输出一致性方面显著优于现有最先进方法。