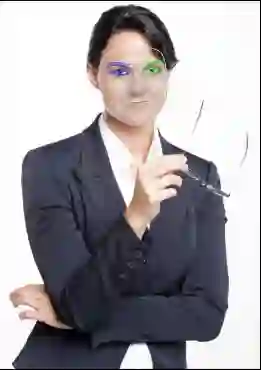

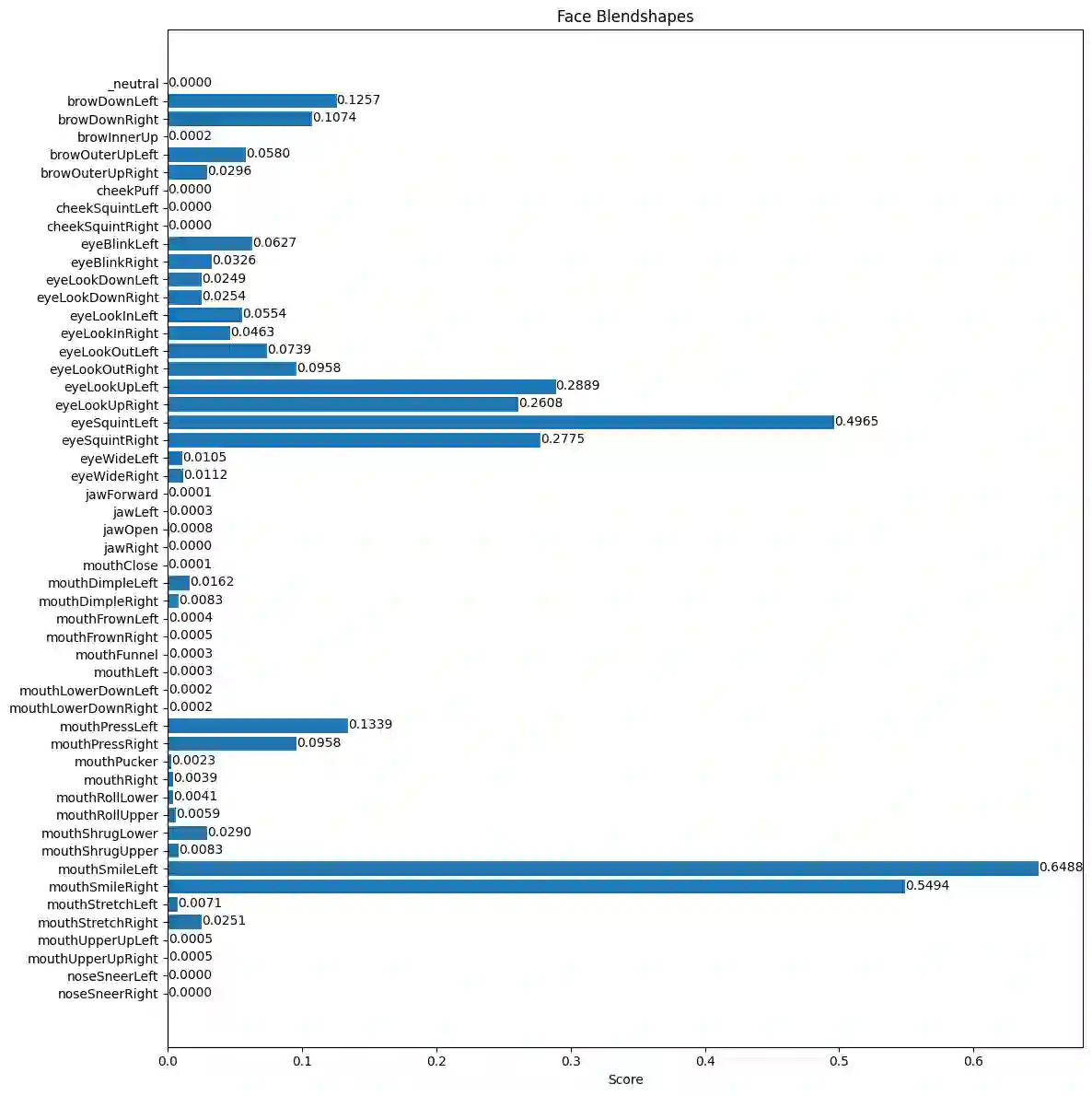

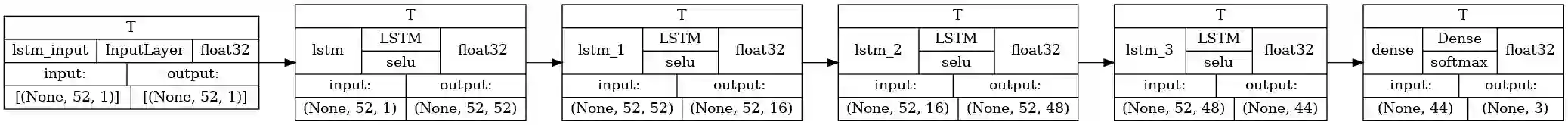

Emotion estimation in general is a field that has been studied for a long time, and several approaches exist using machine learning. in this paper, we present an LSTM model, that processes the blend-shapes produced by the library MediaPipe, for a face detected in a live stream of a camera, to estimate the main emotion from the facial expressions, this model is trained on the FER2013 dataset and delivers a result of 71% accuracy and 62% f1-score which meets the accuracy benchmark of the FER2013 dataset, with significantly reduced computation costs. https://github.com/Samir-atra/Emotion_estimation_from_video_footage_with_LSTM_ML_algorithm

翻译:情感估计作为一个研究领域已有较长历史,目前存在多种基于机器学习的研究方法。本文提出一种LSTM模型,该模型通过处理MediaPipe库生成的面部混合形状数据,对摄像头实时流中检测到的人脸进行主要情感估计。该模型在FER2013数据集上进行训练,取得了71%的准确率和62%的F1分数,达到了FER2013数据集的精度基准,同时显著降低了计算成本。https://github.com/Samir-atra/Emotion_estimation_from_video_footage_with_LSTM_ML_algorithm