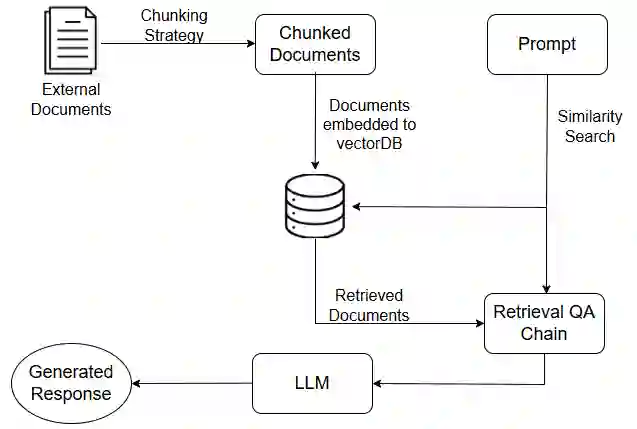

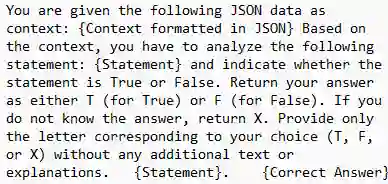

Security applications are increasingly relying on large language models (LLMs) for cyber threat detection; however, their opaque reasoning often limits trust, particularly in decisions that require domain-specific cybersecurity knowledge. Because security threats evolve rapidly, LLMs must not only recall historical incidents but also adapt to emerging vulnerabilities and attack patterns. Retrieval-Augmented Generation (RAG) has demonstrated effectiveness in general LLM applications, but its potential for cybersecurity remains underexplored. In this work, we introduce a RAG-based framework designed to contextualize cybersecurity data and enhance LLM accuracy in knowledge retention and temporal reasoning. Using external datasets and the Llama-3-8B-Instruct model, we evaluate baseline RAG, an optimized hybrid retrieval approach, and conduct a comparative analysis across multiple performance metrics. Our findings highlight the promise of hybrid retrieval in strengthening the adaptability and reliability of LLMs for cybersecurity tasks.

翻译:安全应用正日益依赖大型语言模型(LLMs)进行网络威胁检测;然而,其不透明的推理过程常常限制了信任度,特别是在需要特定领域网络安全知识的决策中。由于安全威胁快速演变,LLMs不仅需要记忆历史事件,还必须适应新出现的漏洞和攻击模式。检索增强生成(RAG)在通用LLM应用中已显示出有效性,但其在网络安全领域的潜力仍未得到充分探索。本研究提出了一种基于RAG的框架,旨在将网络安全数据情境化,并提升LLM在知识保持和时间推理方面的准确性。通过使用外部数据集和Llama-3-8B-Instruct模型,我们评估了基线RAG、一种优化的混合检索方法,并在多个性能指标上进行了比较分析。我们的研究结果突显了混合检索在增强LLMs应对网络安全任务的适应性和可靠性方面的潜力。