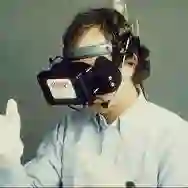

Virtual Reality (VR) games require players to translate high-level semantic actions into precise device manipulations using controllers and head-mounted displays (HMDs). While humans intuitively perform this translation based on common sense and embodied understanding, whether Large Language Models (LLMs) can effectively replicate this ability remains underexplored. This paper introduces a benchmark, ComboBench, evaluating LLMs' capability to translate semantic actions into VR device manipulation sequences across 262 scenarios from four popular VR games: Half-Life: Alyx, Into the Radius, Moss: Book II, and Vivecraft. We evaluate seven LLMs, including GPT-3.5, GPT-4, GPT-4o, Gemini-1.5-Pro, LLaMA-3-8B, Mixtral-8x7B, and GLM-4-Flash, compared against annotated ground truth and human performance. Our results reveal that while top-performing models like Gemini-1.5-Pro demonstrate strong task decomposition capabilities, they still struggle with procedural reasoning and spatial understanding compared to humans. Performance varies significantly across games, suggesting sensitivity to interaction complexity. Few-shot examples substantially improve performance, indicating potential for targeted enhancement of LLMs' VR manipulation capabilities. We release all materials at https://sites.google.com/view/combobench.

翻译:虚拟现实(VR)游戏要求玩家将高层次语义动作转化为通过控制器和头戴式显示器(HMD)进行的精确设备操控。人类凭借常识和具身理解能直观地完成这种转化,但大型语言模型(LLMs)是否能有效复现这种能力仍待深入探究。本文提出了一个基准测试ComboBench,用于评估LLMs在四种热门VR游戏(《半衰期:爱莉克斯》、《Into the Radius》、《Moss: Book II》和《Vivecraft》)的262个场景中,将语义动作转化为VR设备操作序列的能力。我们评估了七种LLMs,包括GPT-3.5、GPT-4、GPT-4o、Gemini-1.5-Pro、LLaMA-3-8B、Mixtral-8x7B和GLM-4-Flash,并与标注的真实数据及人类表现进行对比。结果表明,尽管Gemini-1.5-Pro等表现最佳的模型展现出强大的任务分解能力,但在程序推理和空间理解方面仍不及人类。不同游戏间的性能差异显著,表明模型对交互复杂性较为敏感。少量示例能大幅提升性能,这为针对性增强LLMs的VR操控能力提供了可能。所有材料已发布于https://sites.google.com/view/combobench。