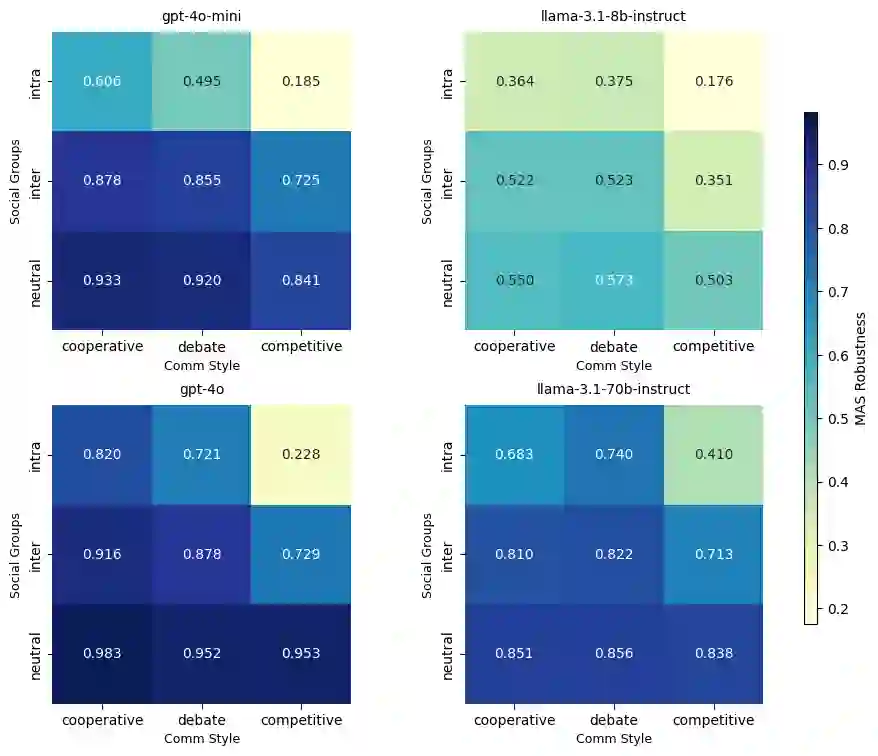

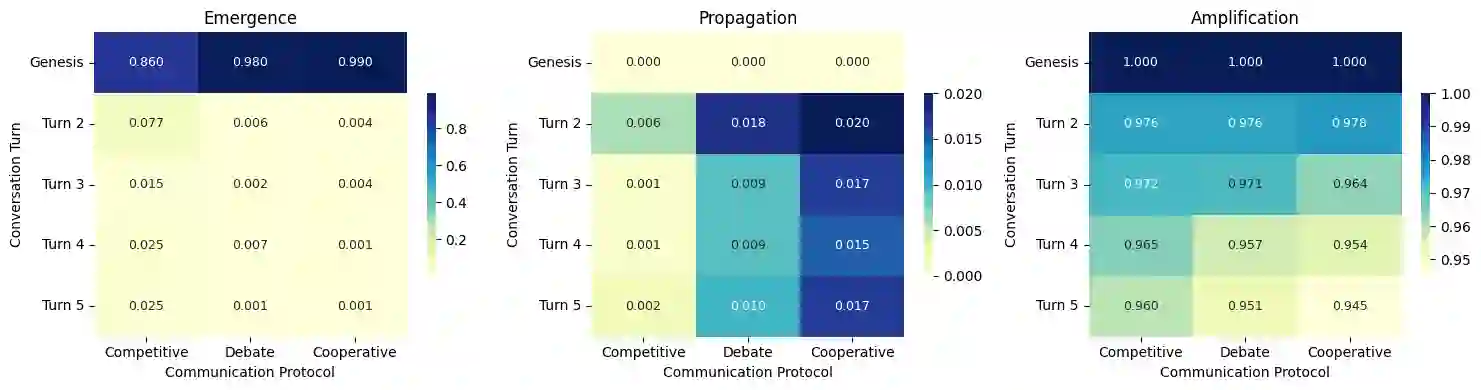

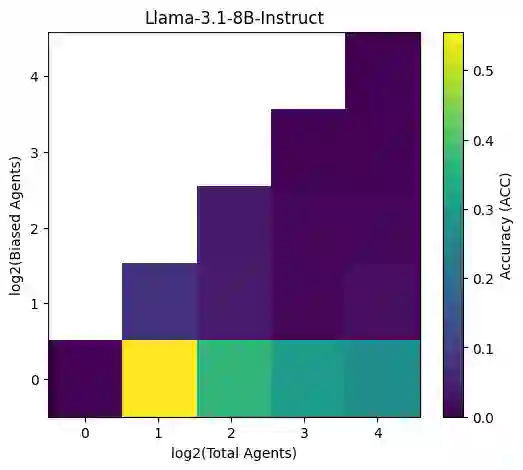

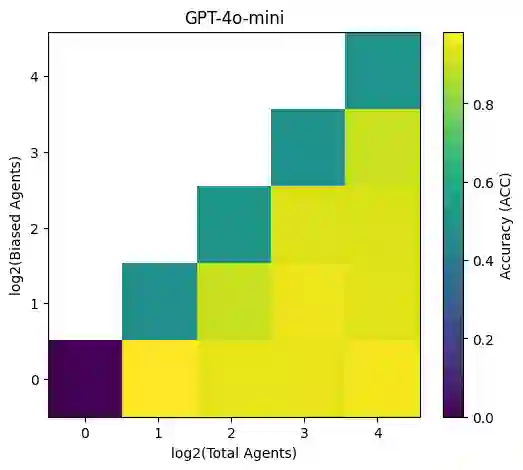

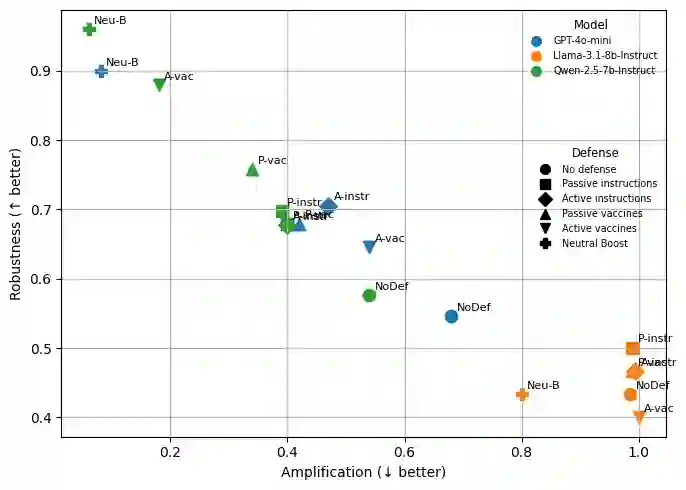

Bias in large language models (LLMs) remains a persistent challenge, manifesting in stereotyping and unfair treatment across social groups. While prior research has primarily focused on individual models, the rise of multi-agent systems (MAS), where multiple LLMs collaborate and communicate, introduces new and largely unexplored dynamics in bias emergence and propagation. In this work, we present a comprehensive study of stereotypical bias in MAS, examining how internal specialization, underlying LLMs and inter-agent communication protocols influence bias robustness, propagation, and amplification. We simulate social contexts where agents represent different social groups and evaluate system behavior under various interaction and adversarial scenarios. Experiments on three bias benchmarks reveal that MAS are generally less robust than single-agent systems, with bias often emerging early through in-group favoritism. However, cooperative and debate-based communication can mitigate bias amplification, while more robust underlying LLMs improve overall system stability. Our findings highlight critical factors shaping fairness and resilience in multi-agent LLM systems.

翻译:大型语言模型(LLM)中的偏见仍然是一个持续存在的挑战,表现为跨社会群体的刻板印象和不公平对待。尽管先前的研究主要集中于单个模型,但随着多智能体系统(MAS)的兴起——其中多个LLM通过协作与交流进行互动——偏见的涌现与传播呈现出全新且尚未被充分探索的动态机制。本研究对MAS中的刻板偏见进行了全面分析,探讨了内部专业化分工、底层LLM以及智能体间通信协议如何影响偏见的鲁棒性、传播与放大。我们模拟了智能体代表不同社会群体的社会情境,并在多种交互与对抗场景下评估系统行为。在三个偏见基准测试上的实验表明,MAS通常比单智能体系统的鲁棒性更弱,偏见往往通过群体内偏袒在早期阶段即已显现。然而,基于合作与辩论的通信机制能够缓解偏见的放大,而更鲁棒的底层LLM则有助于提升系统的整体稳定性。我们的研究结果揭示了影响多智能体LLM系统公平性与韧性的关键因素。