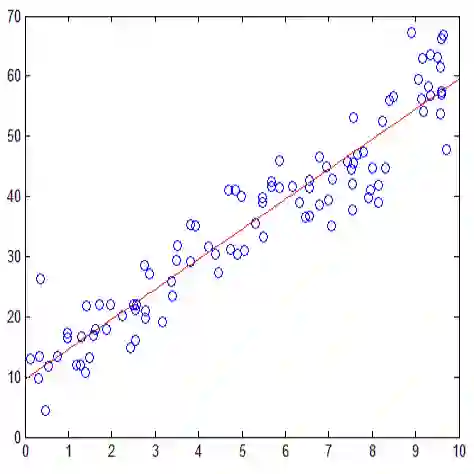

Bayesian model selection commonly relies on Laplace approximation or the Bayesian Information Criterion (BIC), which assume that the effective model dimension equals the number of parameters. Singular learning theory replaces this assumption with the real log canonical threshold (RLCT), an effective dimension that can be strictly smaller in overparameterized or rank-deficient models. We study linear-Gaussian rank models and linear subspace (dictionary) models in which the exact marginal likelihood is available in closed form and the RLCT is analytically tractable. In this setting, we show theoretically and empirically that the error of Laplace/BIC grows linearly with (d/2 minus lambda) times log n, where d is the ambient parameter dimension and lambda is the RLCT. An RLCT-aware correction recovers the correct evidence slope and is invariant to overcomplete reparameterizations that represent the same data subspace. Our results provide a concrete finite-sample characterization of Laplace failure in singular models and demonstrate that evidence slopes can be used as a practical estimator of effective dimension in simple linear settings.

翻译:贝叶斯模型选择通常依赖于拉普拉斯近似或贝叶斯信息准则(BIC),这些方法假设有效模型维度等于参数数量。奇异学习理论用实对数典范阈值(RLCT)取代了这一假设,该有效维度在过参数化或秩亏模型中可严格小于参数数量。我们研究了线性高斯秩模型与线性子空间(字典)模型,其中精确边缘似然具有闭式解,且RLCT可通过解析方法处理。在此设定下,我们从理论与实验上证明:拉普拉斯近似/BIC的误差随(d/2减λ)乘以log n线性增长,其中d为环境参数维度,λ为RLCT。引入RLCT感知的校正可恢复正确的证据斜率,且对表示同一数据子空间的过完备重参数化具有不变性。我们的结果为奇异模型中拉普拉斯近似失效提供了具体的有限样本特征描述,并证明在简单线性设定中证据斜率可作为有效维度的实用估计量。