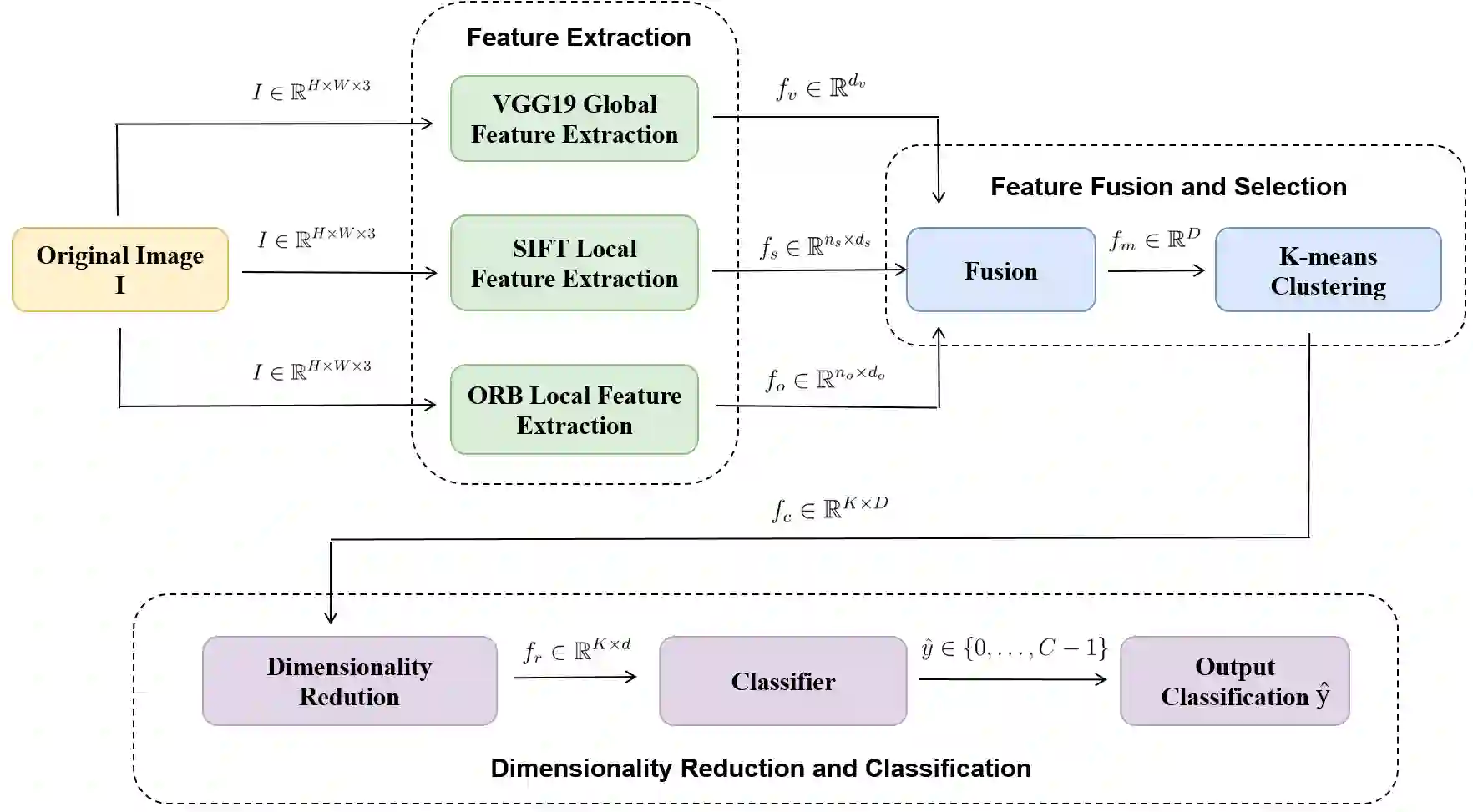

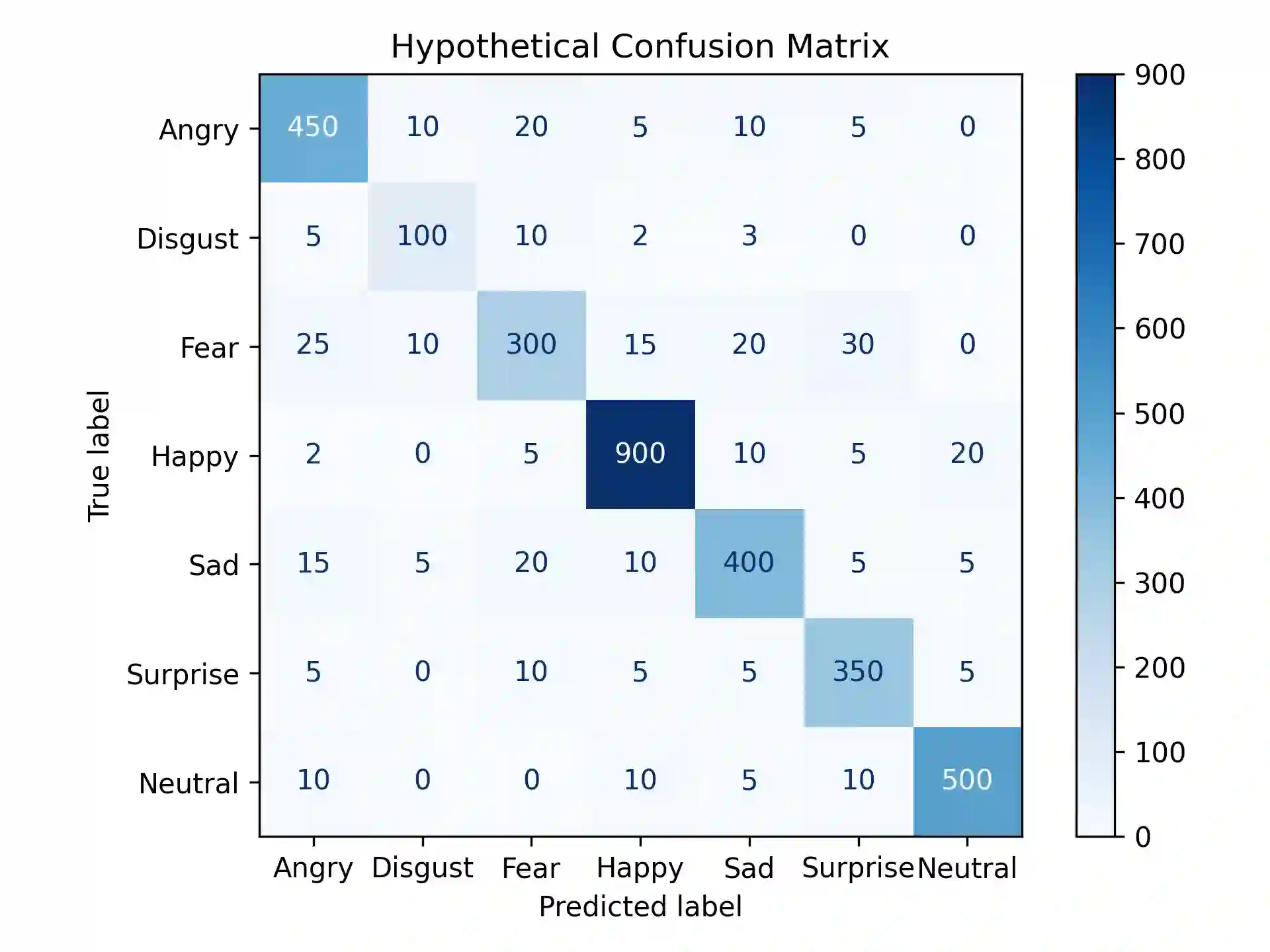

Facial expression classification remains a challenging task due to the high dimensionality and inherent complexity of facial image data. This paper presents Hy-Facial, a hybrid feature extraction framework that integrates both deep learning and traditional image processing techniques, complemented by a systematic investigation of dimensionality reduction strategies. The proposed method fuses deep features extracted from the Visual Geometry Group 19-layer network (VGG19) with handcrafted local descriptors and the scale-invariant feature transform (SIFT) and Oriented FAST and Rotated BRIEF (ORB) algorithms, to obtain rich and diverse image representations. To mitigate feature redundancy and reduce computational complexity, we conduct a comprehensive evaluation of dimensionality reduction techniques and feature extraction. Among these, UMAP is identified as the most effective, preserving both local and global structures of the high-dimensional feature space. The Hy-Facial pipeline integrated VGG19, SIFT, and ORB for feature extraction, followed by K-means clustering and UMAP for dimensionality reduction, resulting in a classification accuracy of 83. 3\% in the facial expression recognition (FER) dataset. These findings underscore the pivotal role of dimensionality reduction not only as a pre-processing step but as an essential component in improving feature quality and overall classification performance.

翻译:面部表情分类由于面部图像数据的高维性和固有复杂性,仍然是一项具有挑战性的任务。本文提出Hy-Facial,一种混合特征提取框架,该框架集成了深度学习和传统图像处理技术,并辅以对降维策略的系统性研究。所提方法融合了从Visual Geometry Group 19层网络(VGG19)提取的深度特征,以及手工设计的局部描述符与尺度不变特征变换(SIFT)和定向FAST与旋转BRIEF(ORB)算法,以获得丰富多样的图像表示。为减少特征冗余并降低计算复杂度,我们对降维技术与特征提取进行了全面评估。其中,UMAP被确定为最有效的方法,它能同时保留高维特征空间的局部和全局结构。Hy-Facial流程集成了VGG19、SIFT和ORB进行特征提取,随后采用K-means聚类和UMAP进行降维,在面部表情识别(FER)数据集中实现了83.3%的分类准确率。这些发现强调了降维的关键作用,其不仅是预处理步骤,更是提升特征质量和整体分类性能的核心组成部分。