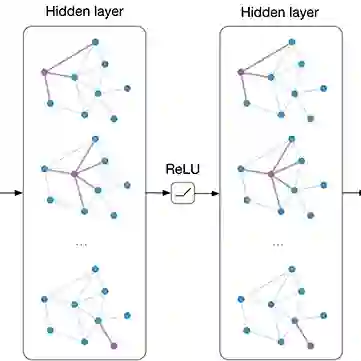

Recently, dense video captioning has made attractive progress in detecting and captioning all events in a long untrimmed video. Despite promising results were achieved, most existing methods do not sufficiently explore the scene evolution within an event temporal proposal for captioning, and therefore perform less satisfactorily when the scenes and objects change over a relatively long proposal. To address this problem, we propose a graph-based partition-and-summarization (GPaS) framework for dense video captioning within two stages. For the ``partition" stage, a whole event proposal is split into short video segments for captioning at a finer level. For the ``summarization" stage, the generated sentences carrying rich description information for each segment are summarized into one sentence to describe the whole event. We particularly focus on the ``summarization" stage, and propose a framework that effectively exploits the relationship between semantic words for summarization. We achieve this goal by treating semantic words as nodes in a graph and learning their interactions by coupling Graph Convolutional Network (GCN) and Long Short Term Memory (LSTM), with the aid of visual cues. Two schemes of GCN-LSTM Interaction (GLI) modules are proposed for seamless integration of GCN and LSTM. The effectiveness of our approach is demonstrated via an extensive comparison with the state-of-the-arts methods on the two benchmarks ActivityNet Captions dataset and YouCook II dataset.

翻译:近年来,密集视频描述在检测和描述长未剪辑视频中的所有事件方面取得了显著进展。尽管已取得有希望的结果,但现有方法大多未能充分挖掘事件时序提议内的场景演化信息以生成描述,因此在场景和物体在较长提议区间内发生变化时表现欠佳。为解决此问题,我们提出一种基于图结构的划分与摘要(GPaS)两阶段框架用于密集视频描述。在“划分”阶段,将完整事件提议分割为短视频片段以进行更精细粒度的描述生成。在“摘要”阶段,将各片段生成的包含丰富描述信息的句子汇总为描述整个事件的单一句子。我们特别聚焦于“摘要”阶段,提出一种能有效利用语义词间关系进行摘要生成的框架。该框架通过将语义词视为图中的节点,并借助视觉线索耦合图卷积网络(GCN)与长短期记忆网络(LSTM)来学习其交互关系。我们提出了两种GCN-LSTM交互(GLI)模块方案以实现GCN与LSTM的无缝集成。通过在ActivityNet Captions和YouCook II两个基准数据集上与前沿方法进行广泛比较,验证了我们方法的有效性。