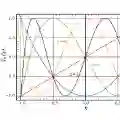

Consider two problems about an unknown probability distribution $p$: 1. How many samples from $p$ are required to test if $p$ is supported on $n$ elements or not? Specifically, given samples from $p$, determine whether it is supported on at most $n$ elements, or it is "$\epsilon$-far" (in total variation distance) from being supported on $n$ elements. 2. Given $m$ samples from $p$, what is the largest lower bound on its support size that we can produce? The best known upper bound for problem (1) uses a general algorithm for learning the histogram of the distribution $p$, which requires $\Theta(\tfrac{n}{\epsilon^2 \log n})$ samples. We show that testing can be done more efficiently than learning the histogram, using only $O(\tfrac{n}{\epsilon \log n} \log(1/\epsilon))$ samples, nearly matching the best known lower bound of $\Omega(\tfrac{n}{\epsilon \log n})$. This algorithm also provides a better solution to problem (2), producing larger lower bounds on support size than what follows from previous work. The proof relies on an analysis of Chebyshev polynomial approximations outside the range where they are designed to be good approximations, and the paper is intended as an accessible self-contained exposition of the Chebyshev polynomial method.

翻译:考虑关于未知概率分布 $p$ 的两个问题:1. 检验 $p$ 是否支撑在 $n$ 个元素上需要多少样本?具体而言,给定来自 $p$ 的样本,判断其是否至多支撑在 $n$ 个元素上,或者其与支撑在 $n$ 个元素上的分布在总变差距离意义下“$\epsilon$-远离”。2. 给定 $p$ 的 $m$ 个样本,我们能够给出的关于其支撑集大小的最大下界是多少?对于问题(1),已知的最佳上界使用了一种学习分布 $p$ 直方图的通用算法,该算法需要 $\Theta(\tfrac{n}{\epsilon^2 \log n})$ 个样本。我们证明,检验可以比学习直方图更高效地完成,仅需 $O(\tfrac{n}{\epsilon \log n} \log(1/\epsilon))$ 个样本,这几乎匹配了已知的最佳下界 $\Omega(\tfrac{n}{\epsilon \log n})$。该算法也为问题(2)提供了更好的解决方案,能够产生比先前工作所得更大的支撑集大小下界。证明依赖于对切比雪夫多项式近似在其设计优良的区间之外行为的分析,本文旨在提供关于切比雪夫多项式方法的易于理解且自包含的阐述。