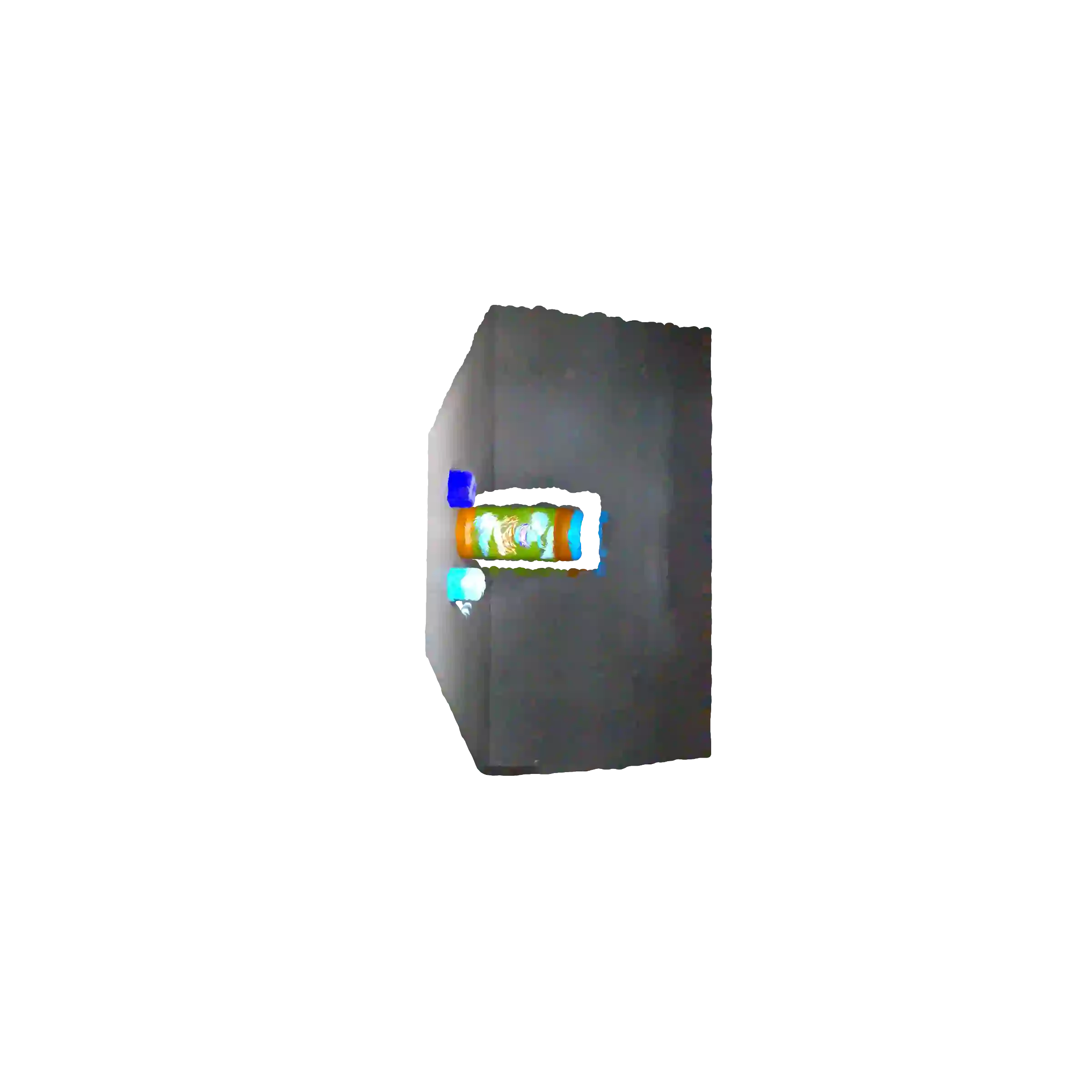

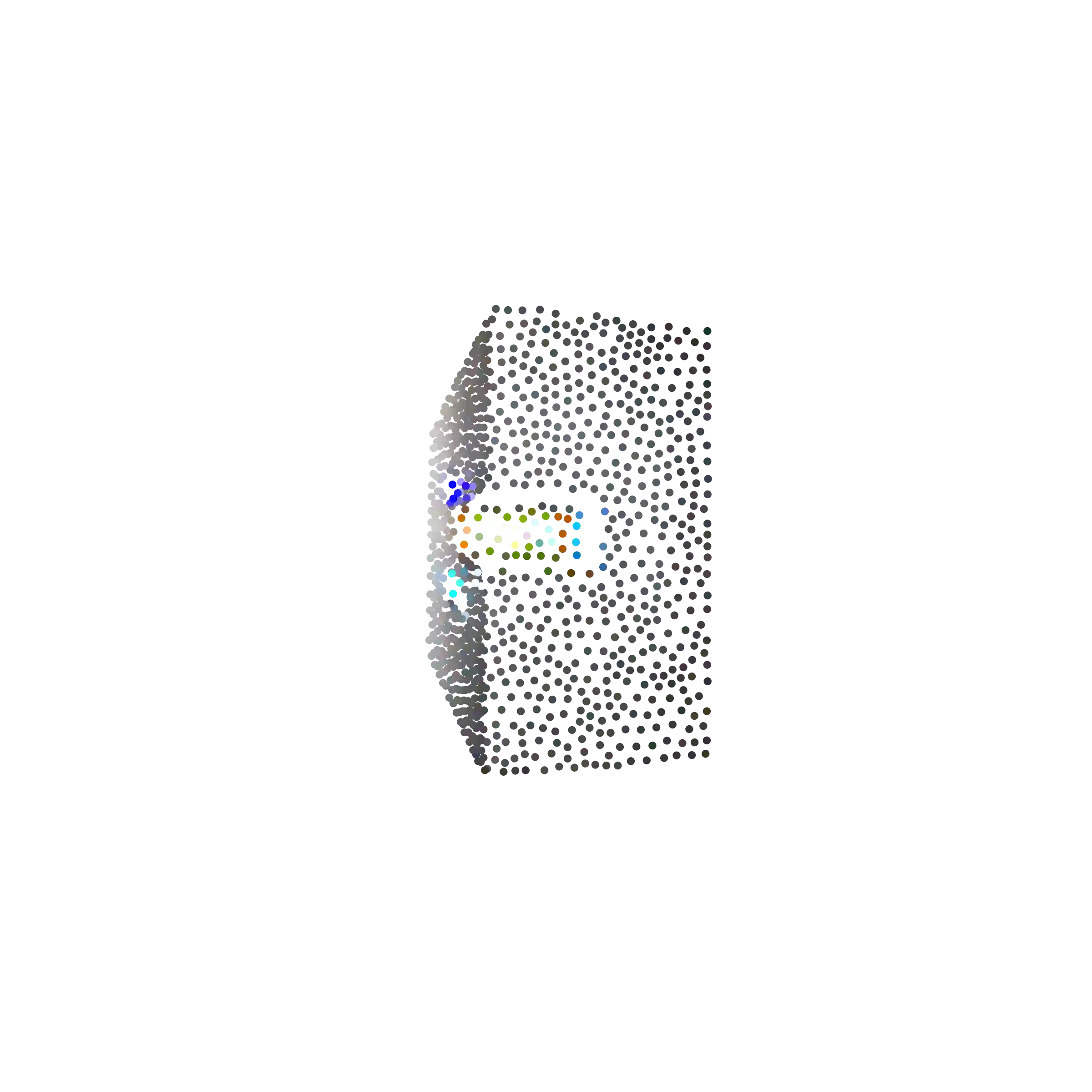

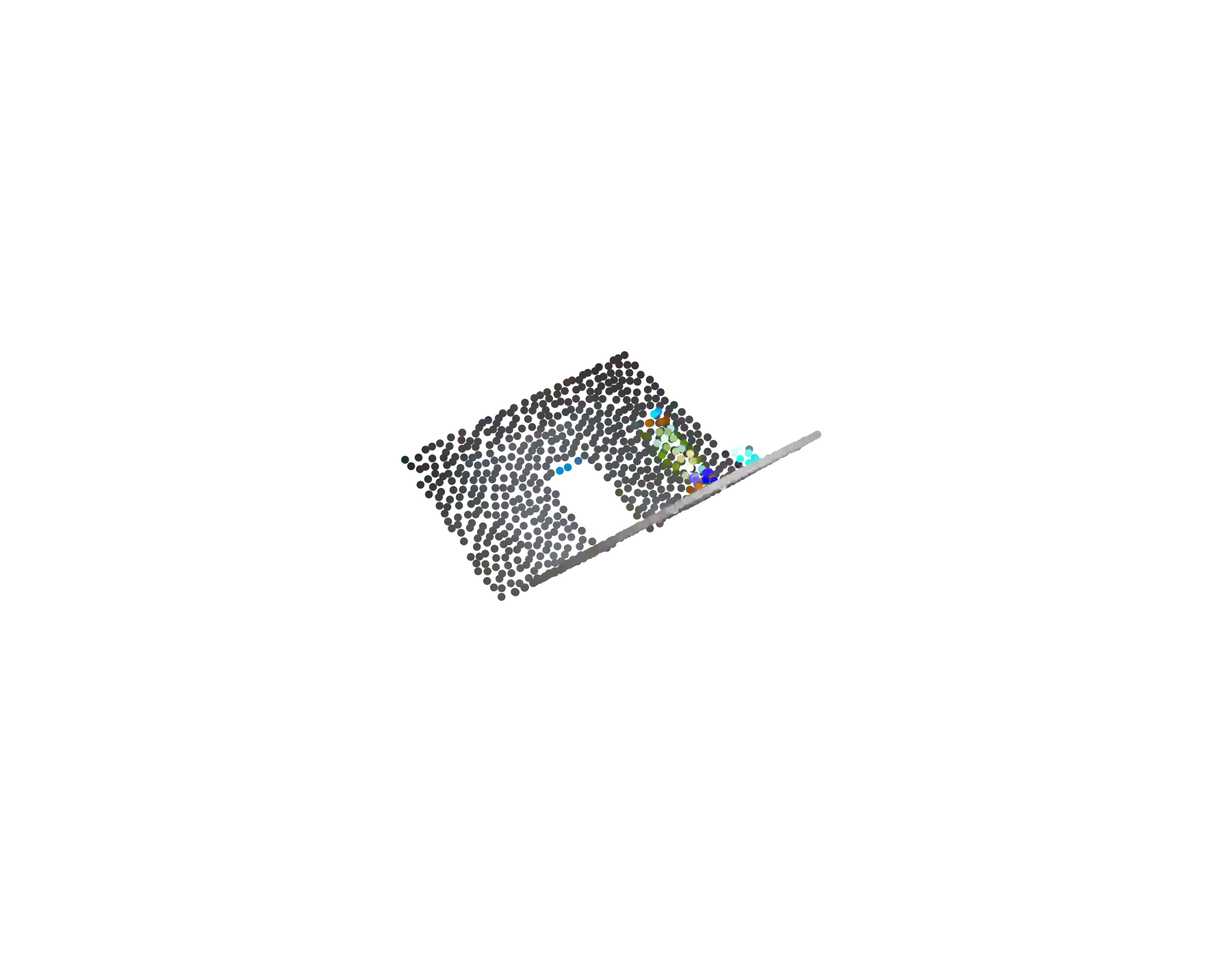

Reinforcement Learning (RL) from raw visual input has achieved impressive successes in recent years, yet it remains fragile to out-of-distribution variations such as changes in lighting, color, and viewpoint. Point Cloud Reinforcement Learning (PC-RL) offers a promising alternative by mitigating appearance-based brittleness, but its sensitivity to camera pose mismatches continues to undermine reliability in realistic settings. To address this challenge, we propose PCA Point Cloud (PPC), a canonicalization framework specifically tailored for downstream robotic control. PPC maps point clouds under arbitrary rigid-body transformations to a unique canonical pose, aligning observations to a consistent frame, thereby substantially decreasing viewpoint-induced inconsistencies. In our experiments, we show that PPC improves robustness to unseen camera poses across challenging robotic tasks, providing a principled alternative to domain randomization.

翻译:原始视觉输入的强化学习(RL)近年来取得了显著进展,但其对光照、颜色和视角等分布外变化仍表现出脆弱性。点云强化学习(PC-RL)通过减轻基于外观的脆弱性提供了有前景的替代方案,但其对相机位姿失配的敏感性仍在现实场景中影响可靠性。为应对这一挑战,我们提出PCA点云(PPC)——一种专为下游机器人控制设计的规范化框架。PPC将任意刚体变换下的点云映射至唯一规范位姿,将观测对齐到统一坐标系,从而显著降低视角引起的不一致性。实验表明,PPC在复杂机器人任务中提升了对未见相机位姿的鲁棒性,为领域随机化提供了理论严谨的替代方案。