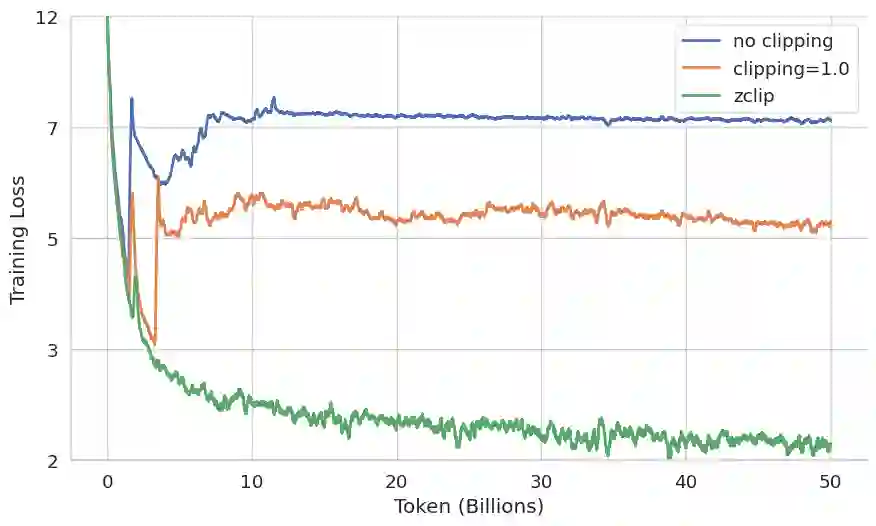

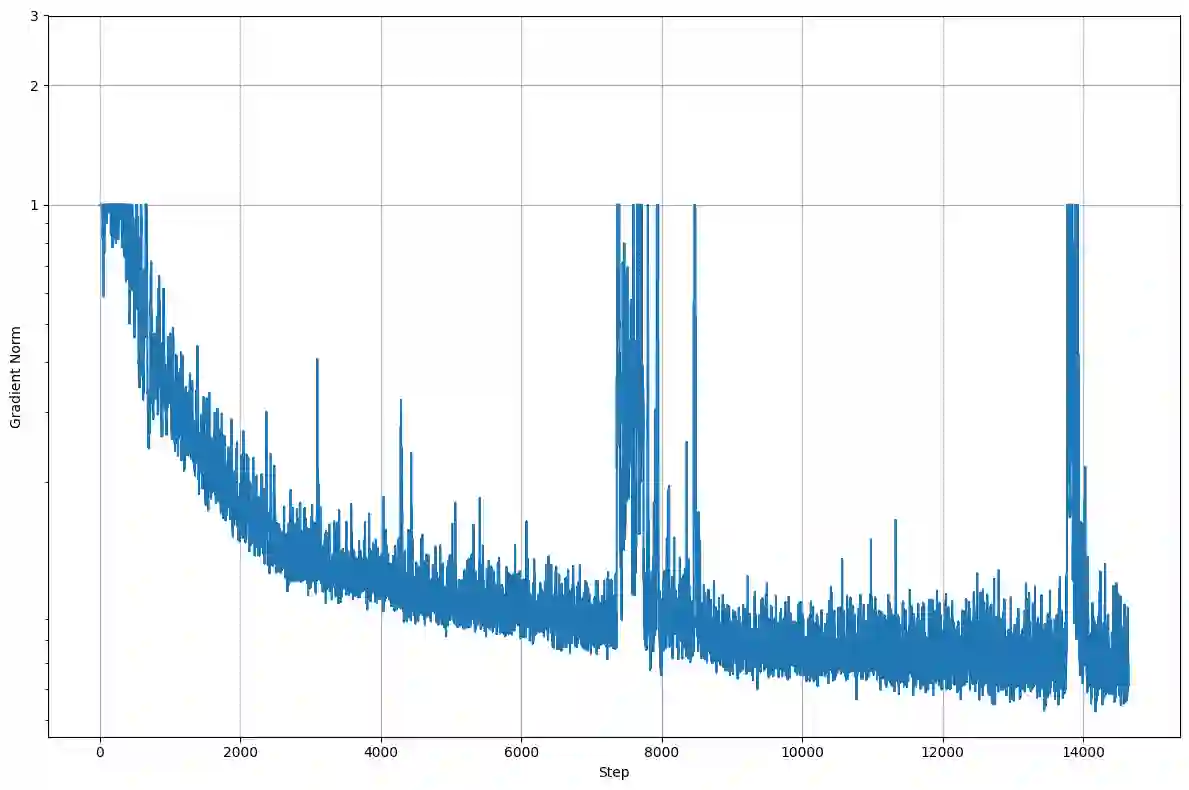

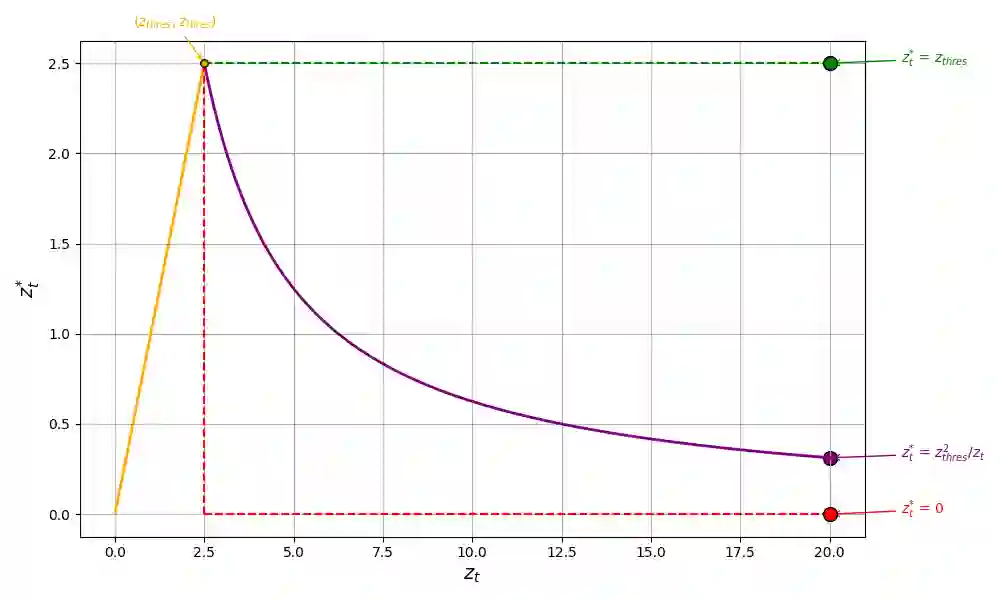

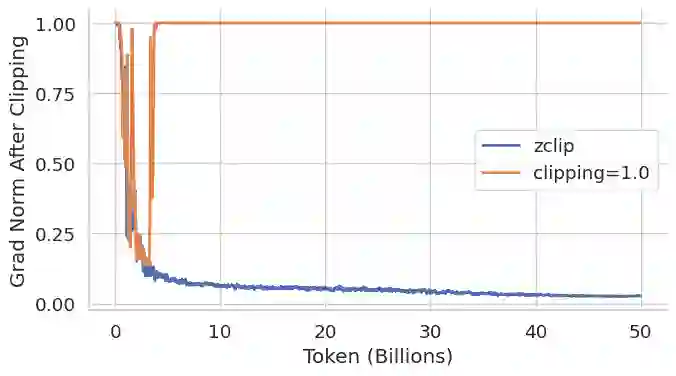

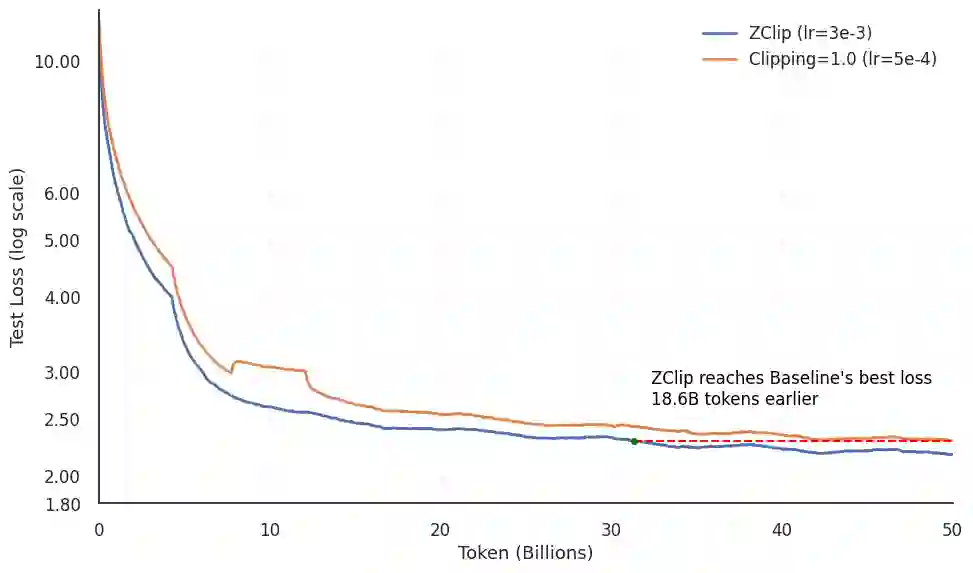

Training large language models (LLMs) presents numerous challenges, including gradient instability and loss spikes. These phenomena can lead to catastrophic divergence, requiring costly checkpoint restoration and data batch skipping. Traditional gradient clipping techniques, such as constant or norm-based methods, fail to address these issues effectively due to their reliance on fixed thresholds or heuristics, leading to inefficient learning and requiring frequent manual intervention. In this work, we propose ZClip, an adaptive gradient clipping algorithm that dynamically adjusts the clipping threshold based on statistical properties of gradient norms over time. Unlike prior reactive strategies, ZClip proactively adapts to training dynamics without making any prior assumptions on the scale and the temporal evolution of gradient norms. At its core, it leverages z-score-based anomaly detection to identify and mitigate large gradient spikes, preventing malignant loss spikes while not interfering with convergence otherwise. Our code is available at: https://github.com/bluorion-com/ZClip.

翻译:大语言模型(LLM)的训练面临诸多挑战,包括梯度不稳定性和损失值尖峰现象。这些现象可能导致灾难性发散,需要耗费大量资源进行检查点恢复和数据批次跳过。传统的梯度裁剪技术(如常数裁剪或基于范数的方法)由于依赖固定阈值或启发式规则,无法有效解决这些问题,导致学习效率低下且需要频繁的人工干预。本研究提出ZClip,一种自适应梯度裁剪算法,该算法基于梯度范数随时间变化的统计特性动态调整裁剪阈值。与以往的反应式策略不同,ZClip无需对梯度范数的尺度及时间演化做任何先验假设,即可主动适应训练动态。其核心在于利用基于z分数的异常检测机制来识别并缓解大幅梯度尖峰,从而在避免恶性损失尖峰的同时不影响模型的正常收敛。我们的代码已开源:https://github.com/bluorion-com/ZClip。