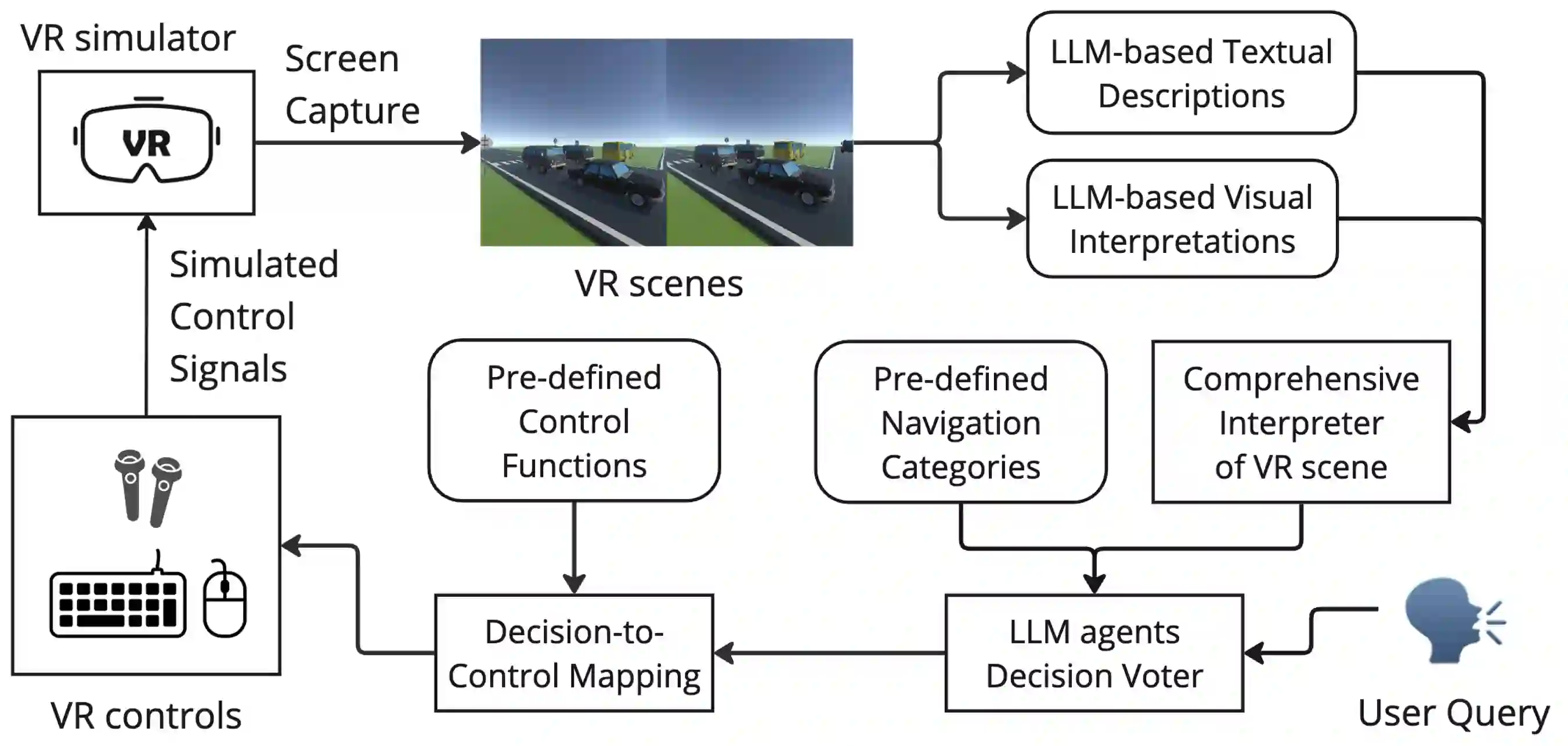

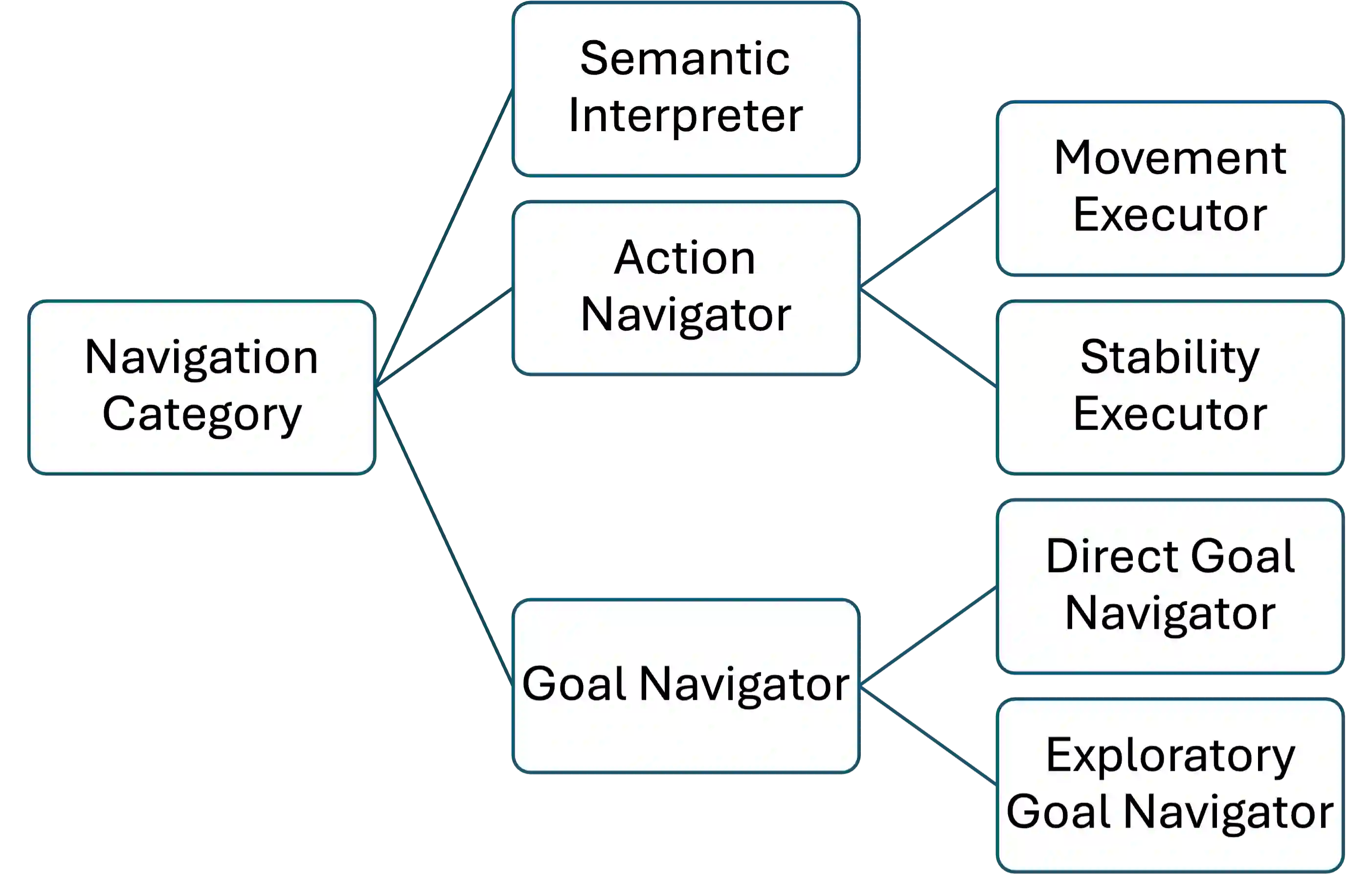

Navigation is one of the fundamental tasks for automated exploration in Virtual Reality (VR). Existing technologies primarily focus on path optimization in 360-degree image datasets and 3D simulators, which cannot be directly applied to immersive VR environments. To address this gap, we present NavAI, a generalizable large language model (LLM)-based navigation framework that supports both basic actions and complex goal-directed tasks across diverse VR applications. We evaluate NavAI in three distinct VR environments through goal-oriented and exploratory tasks. Results show that it achieves high accuracy, with an 89% success rate in goal-oriented tasks. Our analysis also highlights current limitations of relying entirely on LLMs, particularly in scenarios that require dynamic goal assessment. Finally, we discuss the limitations observed during the experiments and offer insights for future research directions.

翻译:导航是虚拟现实(VR)中自动化探索的基本任务之一。现有技术主要集中于360度图像数据集与三维模拟器中的路径优化,无法直接应用于沉浸式VR环境。为弥补这一空白,本文提出NavAI——一个基于大语言模型(LLM)的可泛化导航框架,其支持跨不同VR应用的基本动作与复杂目标导向任务。我们在三个不同的VR环境中通过目标导向型任务与探索型任务对NavAI进行评估。实验结果表明,该框架在目标导向任务中取得了89%的成功率,展现出较高的准确性。我们的分析同时揭示了当前完全依赖大语言模型的局限性,尤其是在需要进行动态目标评估的场景中。最后,我们讨论了实验过程中观察到的不足,并对未来研究方向提出了展望。