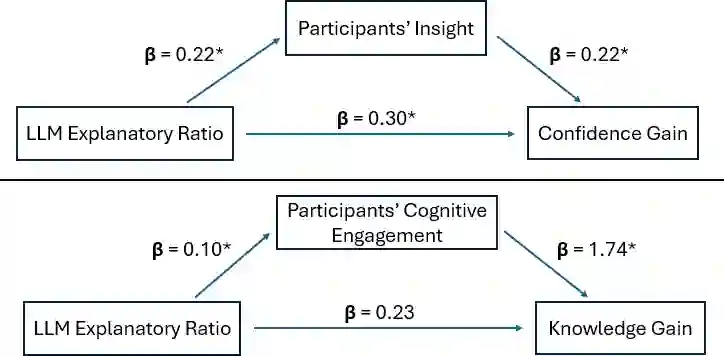

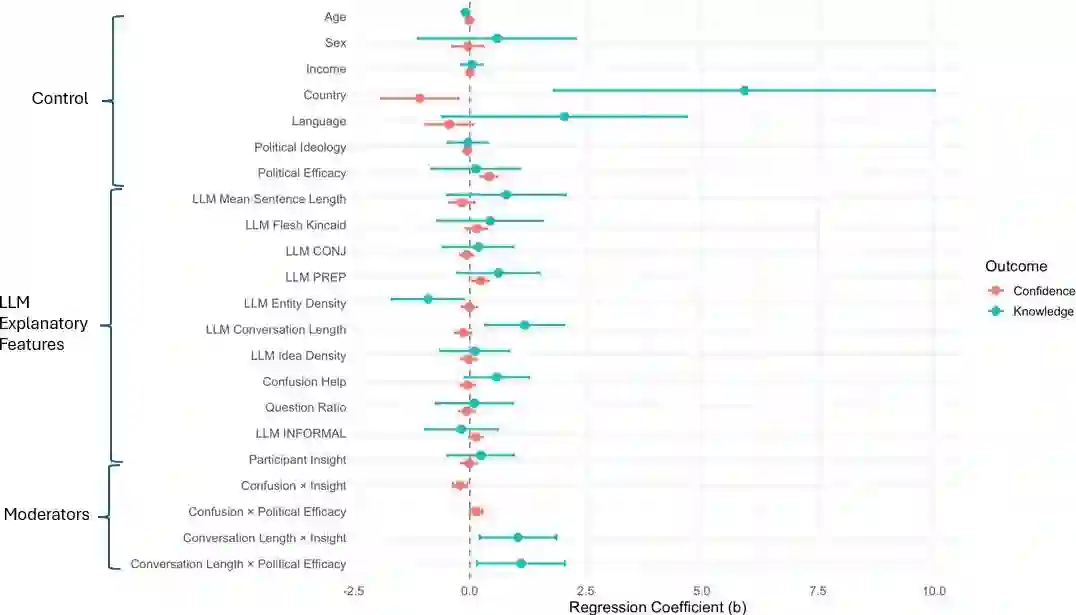

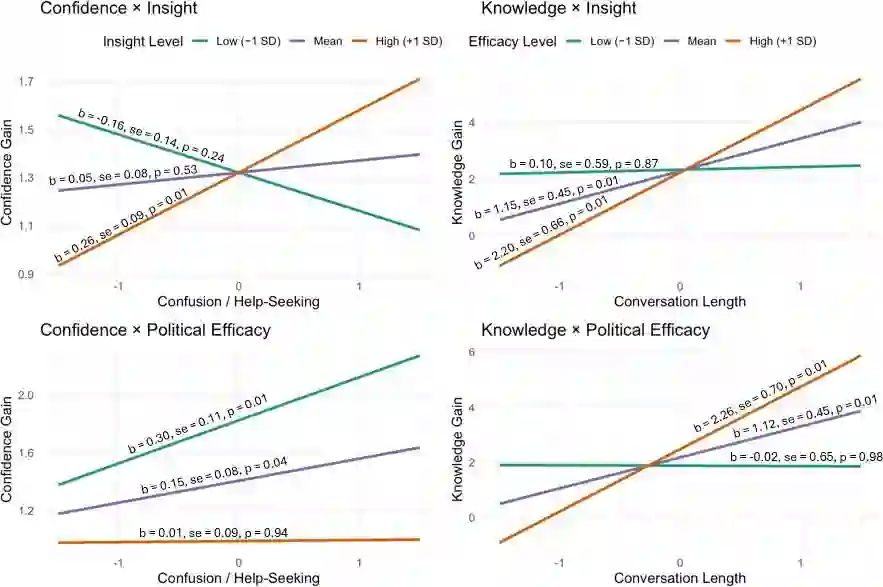

Large language models (LLMs) are increasingly used as conversational partners for learning, yet the interactional dynamics supporting users' learning and engagement are understudied. We analyze the linguistic and interactional features from both LLM and participant chats across 397 human-LLM conversations about socio-political issues to identify the mechanisms and conditions under which LLM explanations shape changes in political knowledge and confidence. Mediation analyses reveal that LLM explanatory richness partially supports confidence by fostering users' reflective insight, whereas its effect on knowledge gain operates entirely through users' cognitive engagement. Moderation analyses show that these effects are highly conditional and vary by political efficacy. Confidence gains depend on how high-efficacy users experience and resolve uncertainty. Knowledge gains depend on high-efficacy users' ability to leverage extended interaction, with longer conversations benefiting primarily reflective users. In summary, we find that learning from LLMs is an interactional achievement, not a uniform outcome of better explanations. The findings underscore the importance of aligning LLM explanatory behavior with users' engagement states to support effective learning in designing Human-AI interactive systems.

翻译:大型语言模型(LLM)日益被用作学习对话伙伴,然而支持用户学习与参与度的交互动态尚未得到充分研究。我们分析了397场关于社会政治议题的人类-LLM对话中LLM与参与者的语言及交互特征,以识别LLM解释影响政治知识与信心变化的机制与条件。中介分析表明,LLM解释的丰富性通过促进用户的反思性洞察部分支撑了信心提升,而其知识增益效应则完全通过用户的认知参与实现。调节分析显示这些效应具有高度条件性,并随政治效能感而变化:信心增益取决于高效能用户如何体验并化解不确定性;知识增益则依赖于高效能用户利用延伸对话的能力——较长的对话主要使反思型用户受益。总之,我们发现从LLM中学习是一种交互性成就,而非优质解释的单一结果。研究结果强调了在人类-人工智能交互系统设计中,需将LLM的解释行为与用户参与状态相协调以支持有效学习。