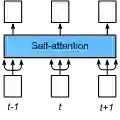

The quadratic complexity of self-attention mechanism presents a significant impediment to applying Transformer models to long sequences. This work explores computational principles derived from astrocytes-glial cells critical for biological memory and synaptic modulation-as a complementary approach to conventional architectural modifications for efficient self-attention. We introduce the Recurrent Memory Augmented Astromorphic Transformer (RMAAT), an architecture integrating abstracted astrocyte functionalities. RMAAT employs a recurrent, segment-based processing strategy where persistent memory tokens propagate contextual information. An adaptive compression mechanism, governed by a novel retention factor derived from simulated astrocyte long-term plasticity (LTP), modulates these tokens. Attention within segments utilizes an efficient, linear-complexity mechanism inspired by astrocyte short-term plasticity (STP). Training is performed using Astrocytic Memory Replay Backpropagation (AMRB), a novel algorithm designed for memory efficiency in recurrent networks. Evaluations on the Long Range Arena (LRA) benchmark demonstrate RMAAT's competitive accuracy and substantial improvements in computational and memory efficiency, indicating the potential of incorporating astrocyte-inspired dynamics into scalable sequence models.

翻译:自注意力机制的二次复杂度严重阻碍了Transformer模型在长序列上的应用。本研究探索了从星形胶质细胞(一种对生物记忆与突触调节至关重要的胶质细胞)中衍生的计算原理,将其作为传统架构修改之外的补充方法,以实现高效的自注意力。我们提出了循环记忆增强星形形态Transformer(RMAAT),这是一种整合了抽象化星形胶质细胞功能的架构。RMAAT采用一种基于片段的循环处理策略,其中持久性记忆标记传播上下文信息。一种由模拟星形胶质细胞长时程可塑性(LTP)衍生的新型保留因子控制的自适应压缩机制,对这些标记进行调制。片段内的注意力则利用一种受星形胶质细胞短时程可塑性(STP)启发的高效线性复杂度机制。训练使用星形胶质细胞记忆回放反向传播(AMRB)进行,这是一种专为循环网络中记忆效率设计的新算法。在长距离竞技场(LRA)基准测试上的评估表明,RMAAT具有竞争力的准确性,并在计算和内存效率上取得了显著提升,这揭示了将受星形胶质细胞启发的动力学融入可扩展序列模型的潜力。