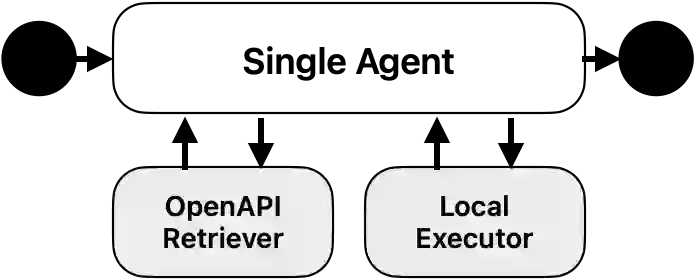

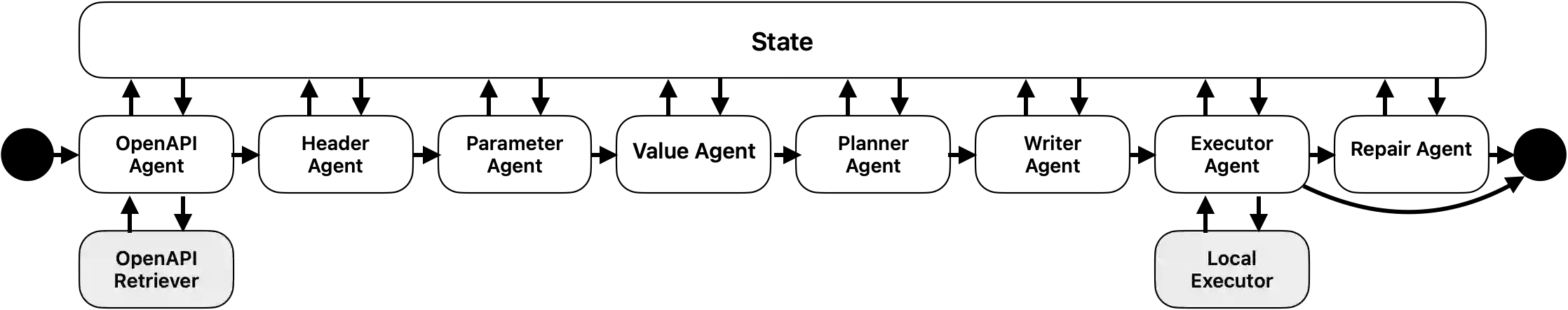

Representational State Transfer (REST) APIs are a cornerstone of modern cloud native systems. Ensuring their reliability demands automated test suites that exercise diverse and boundary level behaviors. Nevertheless, designing such test cases remains a challenging and resource intensive endeavor. This study extends prior work on Large Language Model (LLM) based test amplification by evaluating single agent and multi agent configurations across four additional cloud applications. The amplified test suites maintain semantic validity with minimal human intervention. The results demonstrate that agentic LLM systems can effectively generalize across heterogeneous API architectures, increasing endpoint and parameter coverage while revealing defects. Moreover, a detailed analysis of computational cost, runtime, and energy consumption highlights trade-offs between accuracy, scalability, and efficiency. These findings underscore the potential of LLM driven test amplification to advance the automation and sustainability of REST API testing in complex cloud environments.

翻译:表述性状态转移(REST)API是现代云原生系统的基石。确保其可靠性需要能够覆盖多样化及边界行为的自动化测试套件。然而,设计此类测试用例仍是一项具有挑战性且资源密集的任务。本研究扩展了先前基于大语言模型(LLM)的测试扩增工作,通过在四个新增云应用中评估单代理与多代理配置。扩增后的测试套件在最小人工干预下保持了语义有效性。结果表明,智能LLM代理系统能够有效泛化至异构API架构,在提升端点与参数覆盖率的同时揭示缺陷。此外,对计算成本、运行时间及能耗的详细分析揭示了准确性、可扩展性与效率之间的权衡。这些发现凸显了LLM驱动的测试扩增在推动复杂云环境中REST API测试自动化与可持续性方面的潜力。