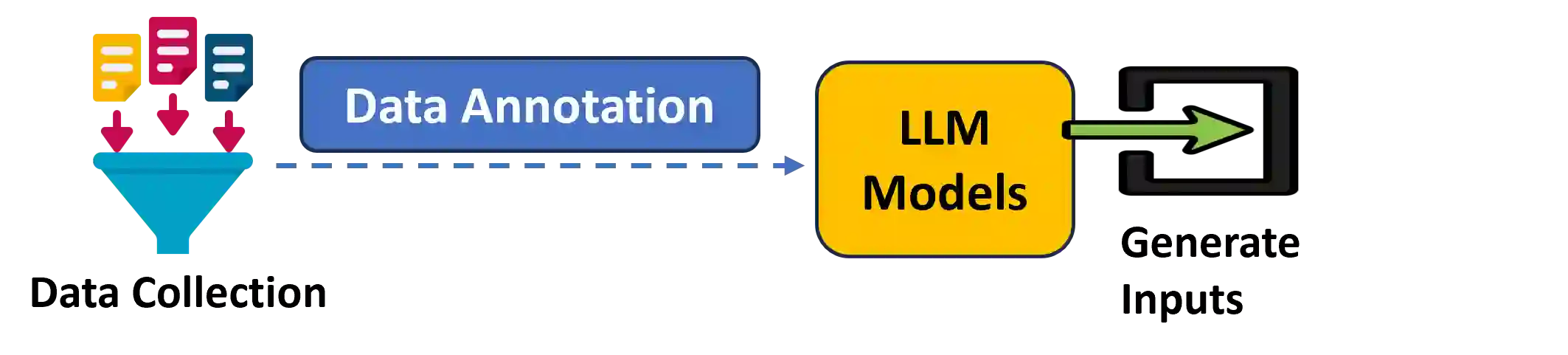

Failure-inducing inputs play a crucial role in diagnosing and analyzing software bugs. Bug reports typically contain these inputs, which developers extract to facilitate debugging. Since bug reports are written in natural language, prior research has leveraged various Natural Language Processing (NLP) techniques for automated input extraction. With the advent of Large Language Models (LLMs), an important research question arises: how effectively can generative LLMs extract failure-inducing inputs from bug reports? In this paper, we propose LLPut, a technique to empirically evaluate the performance of three open-source generative LLMs -- LLaMA, Qwen, and Qwen-Coder -- in extracting relevant inputs from bug reports. We conduct an experimental evaluation on a dataset of 206 bug reports to assess the accuracy and effectiveness of these models. Our findings provide insights into the capabilities and limitations of generative LLMs in automated bug diagnosis.

翻译:故障诱导输入在软件缺陷诊断与分析中起着至关重要的作用。错误报告通常包含这些输入,开发者会将其提取出来以辅助调试。由于错误报告以自然语言撰写,先前的研究已利用各种自然语言处理(NLP)技术实现自动化输入提取。随着大型语言模型(LLMs)的出现,一个重要的研究问题随之产生:生成式LLMs从错误报告中提取故障诱导输入的效果如何?本文提出LLPut,一种用于实证评估三种开源生成式LLM——LLaMA、Qwen与Qwen-Coder——从错误报告中提取相关输入性能的技术。我们在包含206份错误报告的数据集上进行了实验评估,以衡量这些模型的准确性与有效性。我们的研究结果为生成式LLM在自动化缺陷诊断中的能力与局限性提供了深入见解。