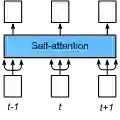

Attention mechanisms underpin the computational power of Transformer models, which have achieved remarkable success across diverse domains. Yet understanding and extending the principles underlying self-attention remains a key challenge for advancing artificial intelligence. Drawing inspiration from the multiscale dynamics of biological attention and from dynamical systems theory, we introduce Fractional Neural Attention (FNA), a principled, neuroscience-inspired framework for multiscale information processing. FNA models token interactions through Lévy diffusion governed by the fractional Laplacian, intrinsically realizing simultaneous short- and long-range dependencies across multiple scales. This mechanism yields greater expressivity and faster information mixing, advancing the foundational capacity of Transformers. Theoretically, we show that FNA's dynamics are governed by the fractional diffusion equation, and that the resulting attention networks exhibit larger spectral gaps and shorter path lengths -- mechanistic signatures of enhanced computational efficiency. Empirically, FNA achieves competitive text-classification performance even with a single layer and a single head; it also improves performance in image processing and neural machine translation. Finally, the diffusion map algorithm from geometric harmonics enables dimensionality reduction of FNA weights while preserving the intrinsic structure of embeddings and hidden states. Together, these results establish FNA as a principled mechanism connecting self-attention, stochastic dynamics, and geometry, providing an interpretable, biologically grounded foundation for powerful, neuroscience-inspired AI.

翻译:注意力机制是Transformer模型计算能力的基石,该模型已在多个领域取得显著成功。然而,理解和扩展自注意力背后的原理仍是推动人工智能发展的关键挑战。受生物注意力的多尺度动力学和动力系统理论的启发,我们提出了分数神经注意力(FNA),这是一个基于原理、受神经科学启发的多尺度信息处理框架。FNA通过由分数拉普拉斯算子控制的Lévy扩散来建模标记间的相互作用,从而在多个尺度上内在地实现同时的短程和长程依赖关系。该机制带来了更强的表达能力和更快的信息混合,提升了Transformer的基础能力。理论上,我们证明了FNA的动力学由分数扩散方程控制,并且由此产生的注意力网络展现出更大的谱间隙和更短的路径长度——这是计算效率提升的机制性标志。实证上,FNA即使仅使用单层和单头注意力,也能在文本分类任务中取得有竞争力的性能;同时,它在图像处理和神经机器翻译任务中也提升了性能。最后,来自几何谐波的扩散映射算法能够在保持嵌入和隐藏状态内在结构的同时,实现FNA权重的降维。综上所述,这些结果确立了FNA作为一种连接自注意力、随机动力学和几何学的原理性机制,为强大、受神经科学启发的人工智能提供了一个可解释、基于生物学的基础。