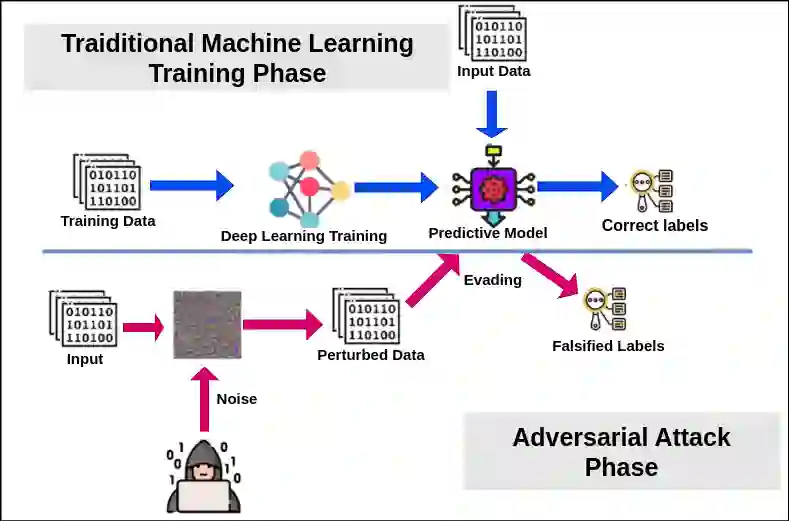

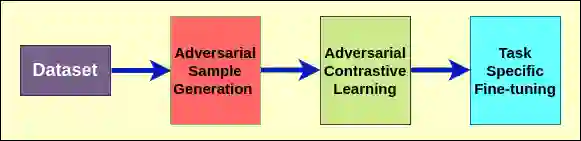

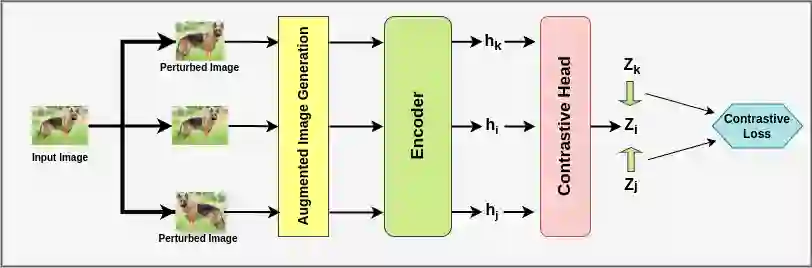

Deep neural networks (DNNs) have achieved remarkable success in computer vision tasks such as image classification, segmentation, and object detection. However, they are vulnerable to adversarial attacks, which can cause incorrect predictions with small perturbations in input images. Addressing this issue is crucial for deploying robust deep-learning systems. This paper presents a novel approach that utilizes contrastive learning for adversarial defense, a previously unexplored area. Our method leverages the contrastive loss function to enhance the robustness of classification models by training them with both clean and adversarially perturbed images. By optimizing the model's parameters alongside the perturbations, our approach enables the network to learn robust representations that are less susceptible to adversarial attacks. Experimental results show significant improvements in the model's robustness against various types of adversarial perturbations. This suggests that contrastive loss helps extract more informative and resilient features, contributing to the field of adversarial robustness in deep learning.

翻译:深度神经网络(DNNs)在图像分类、分割和目标检测等计算机视觉任务中取得了显著成功。然而,它们容易受到对抗攻击的影响,这些攻击可通过在输入图像中添加微小扰动导致错误预测。解决这一问题对于部署鲁棒的深度学习系统至关重要。本文提出了一种利用对比学习进行对抗防御的新方法,这是一个先前未被探索的领域。我们的方法利用对比损失函数,通过使用干净图像和对抗性扰动图像进行训练,增强分类模型的鲁棒性。通过同时优化模型参数和扰动,我们的方法使网络能够学习到更不易受对抗攻击影响的鲁棒表示。实验结果表明,该方法在应对多种类型对抗扰动时,模型的鲁棒性显著提升。这表明对比损失有助于提取更具信息量和抗干扰能力的特征,为深度学习中的对抗鲁棒性研究领域做出了贡献。