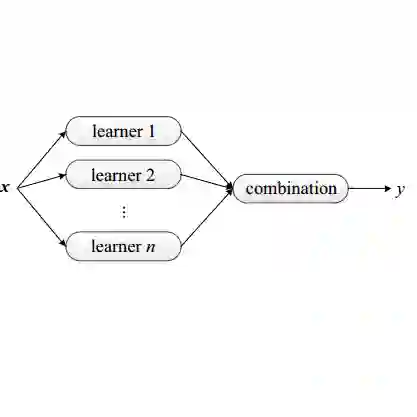

The remarkable performance of Large Language Models (LLMs) highly relies on crafted prompts. However, manual prompt engineering is a laborious process, creating a core bottleneck for practical application of LLMs. This phenomenon has led to the emergence of a new research area known as Automatic Prompt Optimization (APO), which develops rapidly in recent years. Existing APO methods such as those based on evolutionary algorithms or trial-and-error approaches realize an efficient and accurate prompt optimization to some extent. However, those researches focus on a single model or algorithm for the generation strategy and optimization process, which limits their performance when handling complex tasks. To address this, we propose a novel framework called Ensemble Learning based Prompt Optimization (ELPO) to achieve more accurate and robust results. Motivated by the idea of ensemble learning, ELPO conducts voting mechanism and introduces shared generation strategies along with different search methods for searching superior prompts. Moreover, ELPO creatively presents more efficient algorithms for the prompt generation and search process. Experimental results demonstrate that ELPO outperforms state-of-the-art prompt optimization methods across different tasks, e.g., improving F1 score by 7.6 on ArSarcasm dataset.

翻译:大语言模型(LLMs)的卓越性能高度依赖于精心设计的提示。然而,手动提示工程是一个费力的过程,成为LLMs实际应用的核心瓶颈。这一现象催生了近年来快速发展的新研究领域——自动提示优化(APO)。现有的APO方法,如基于进化算法或试错方法的研究,在一定程度上实现了高效且准确的提示优化。然而,这些研究在生成策略和优化过程中通常聚焦于单一模型或算法,限制了其在处理复杂任务时的性能。为此,我们提出了一种名为基于集成学习的提示优化(ELPO)的新框架,以获得更准确和鲁棒的结果。受集成学习思想的启发,ELPO采用投票机制,并引入共享的生成策略以及不同的搜索方法来寻找更优提示。此外,ELPO创新性地提出了更高效的提示生成与搜索算法。实验结果表明,ELPO在不同任务上均优于当前最先进的提示优化方法,例如在ArSarcasm数据集上将F1分数提高了7.6分。