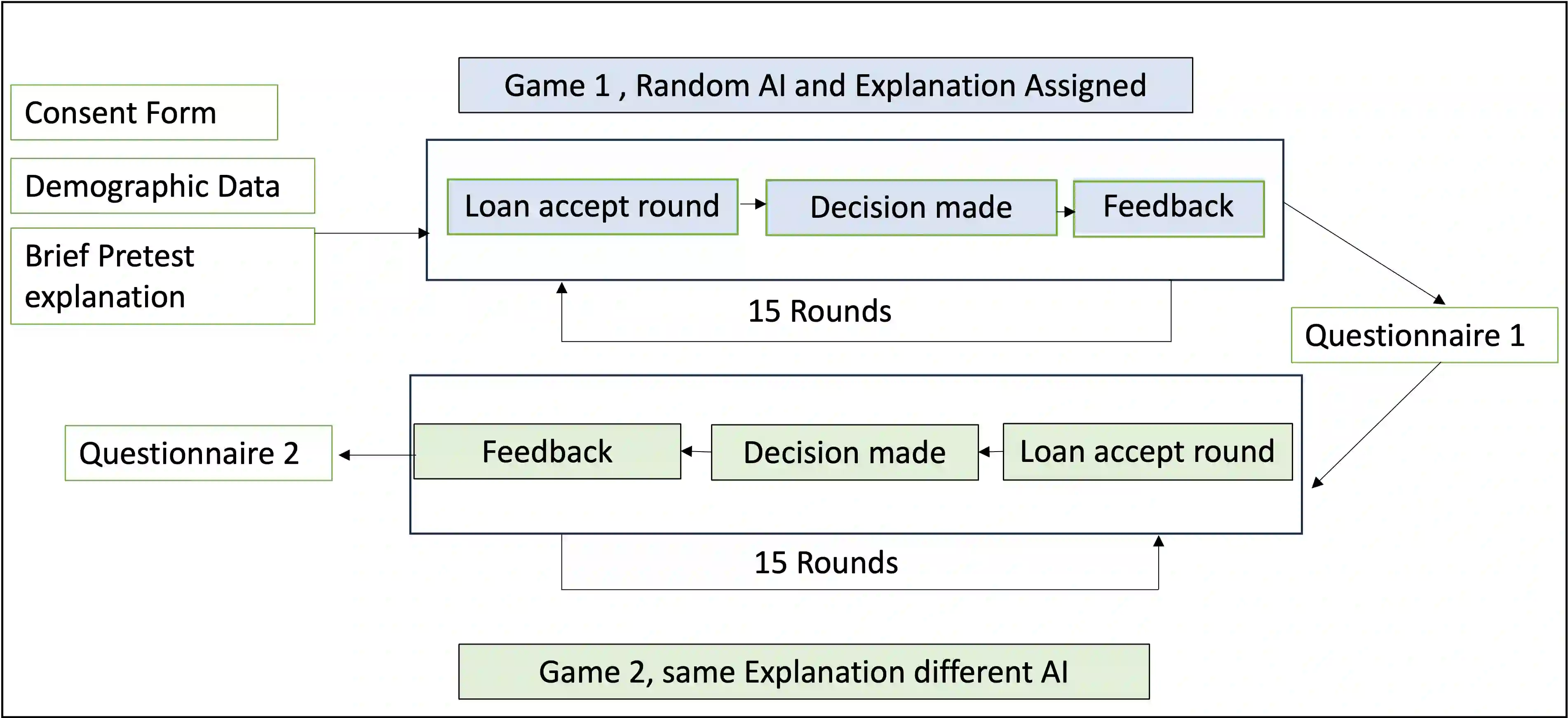

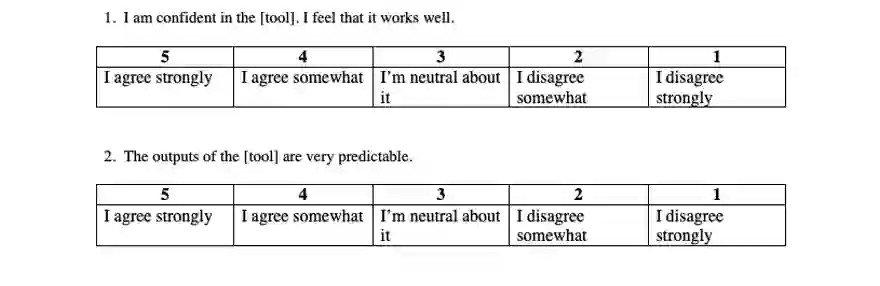

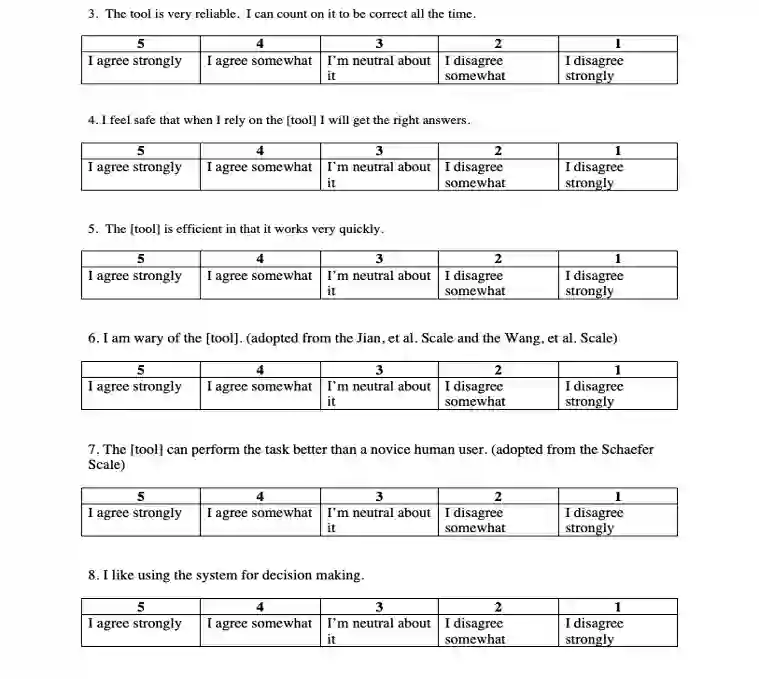

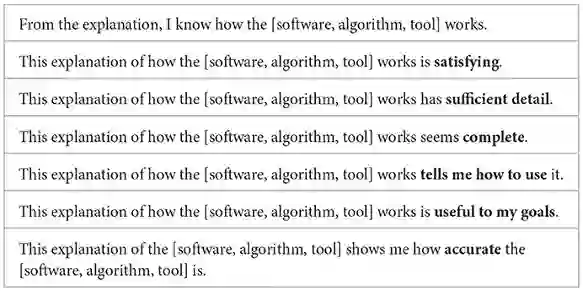

Large-scale AI models such as GPT-4 have accelerated the deployment of artificial intelligence across critical domains including law, healthcare, and finance, raising urgent questions about trust and transparency. This study investigates the relationship between explainability and user trust in AI systems through a quantitative experimental design. Using an interactive, web-based loan approval simulation, we compare how different types of explanations, ranging from basic feature importance to interactive counterfactuals influence perceived trust. Results suggest that interactivity enhances both user engagement and confidence, and that the clarity and relevance of explanations are key determinants of trust. These findings contribute empirical evidence to the growing field of human-centered explainable AI, highlighting measurable effects of explainability design on user perception

翻译:以GPT-4为代表的大规模人工智能模型加速了人工智能在法律、医疗和金融等关键领域的部署,引发了关于信任与透明度的迫切问题。本研究通过定量实验设计,探讨人工智能系统中可解释性与用户信任之间的关系。我们基于交互式网络贷款审批模拟系统,比较了从基础特征重要性到交互式反事实解释等不同类型解释对感知信任度的影响。结果表明:交互性既能提升用户参与度,又能增强用户信心;解释的清晰度与相关性是信任度的关键决定因素。这些发现为日益发展的人本可解释人工智能领域提供了实证依据,揭示了可解释性设计对用户感知的可量化影响。