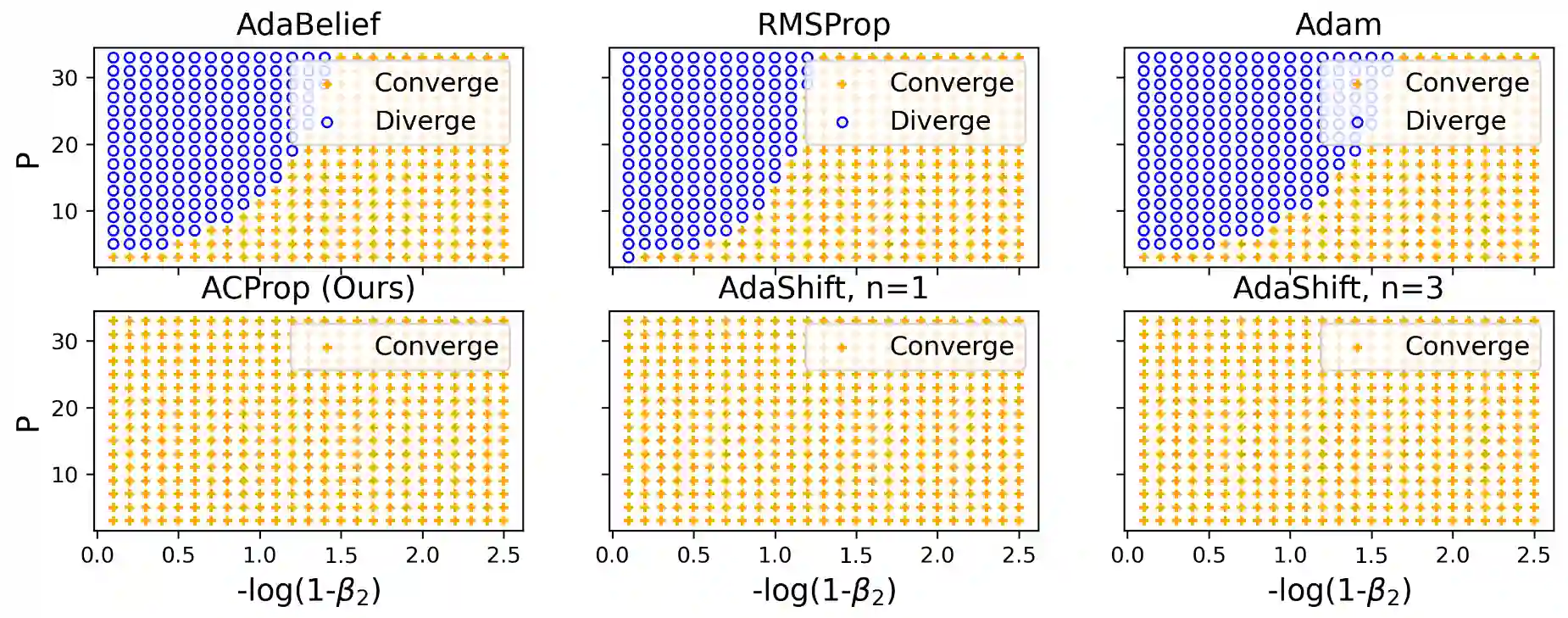

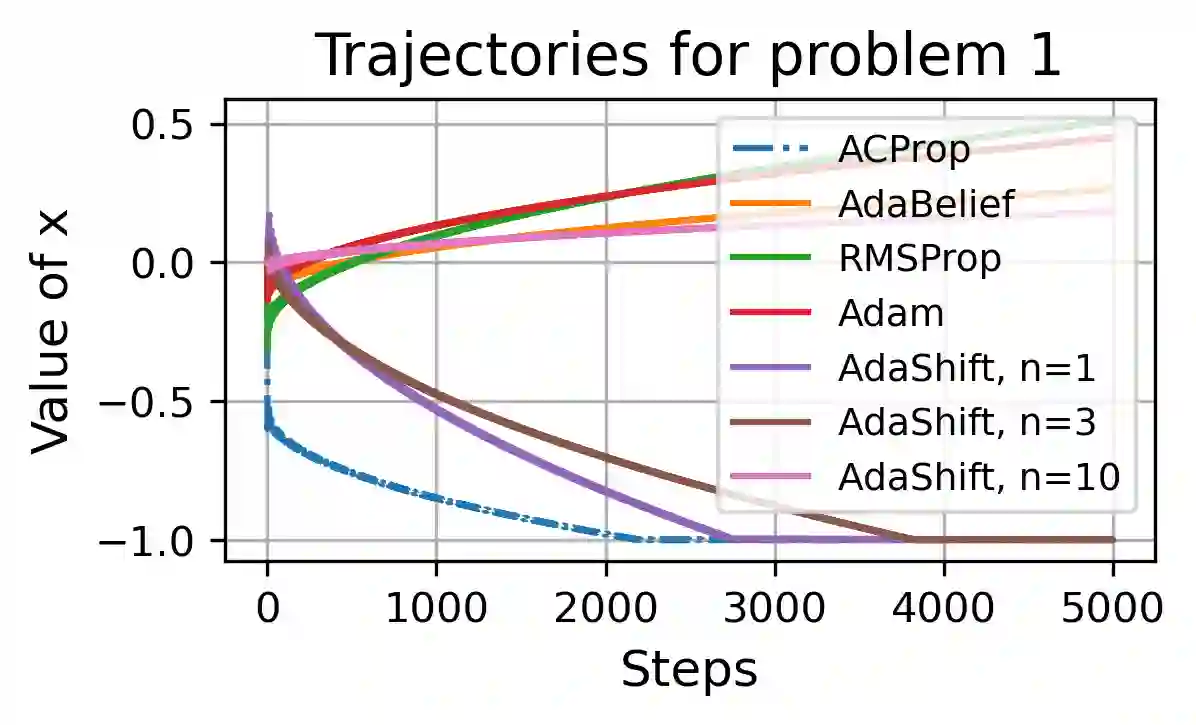

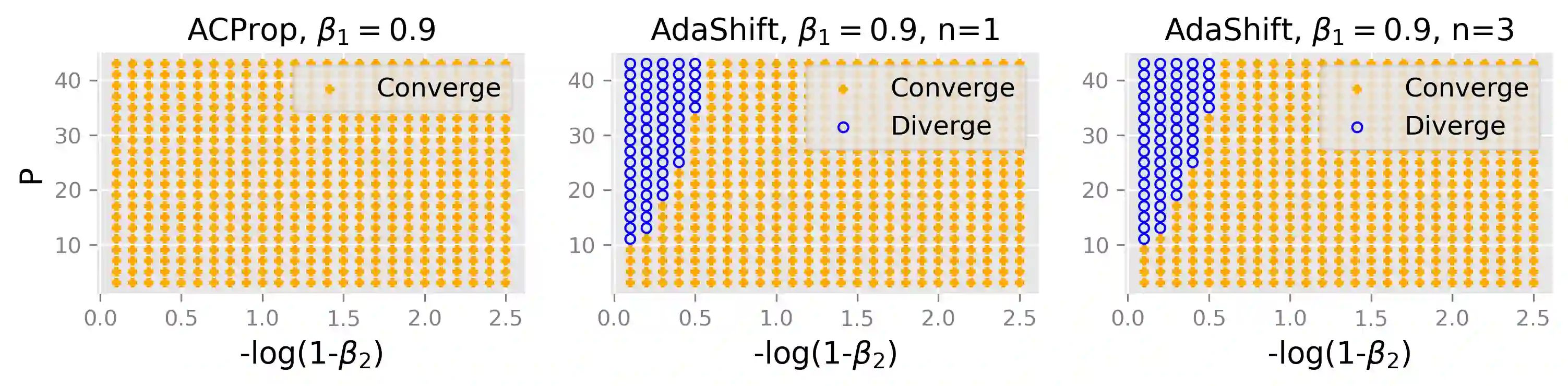

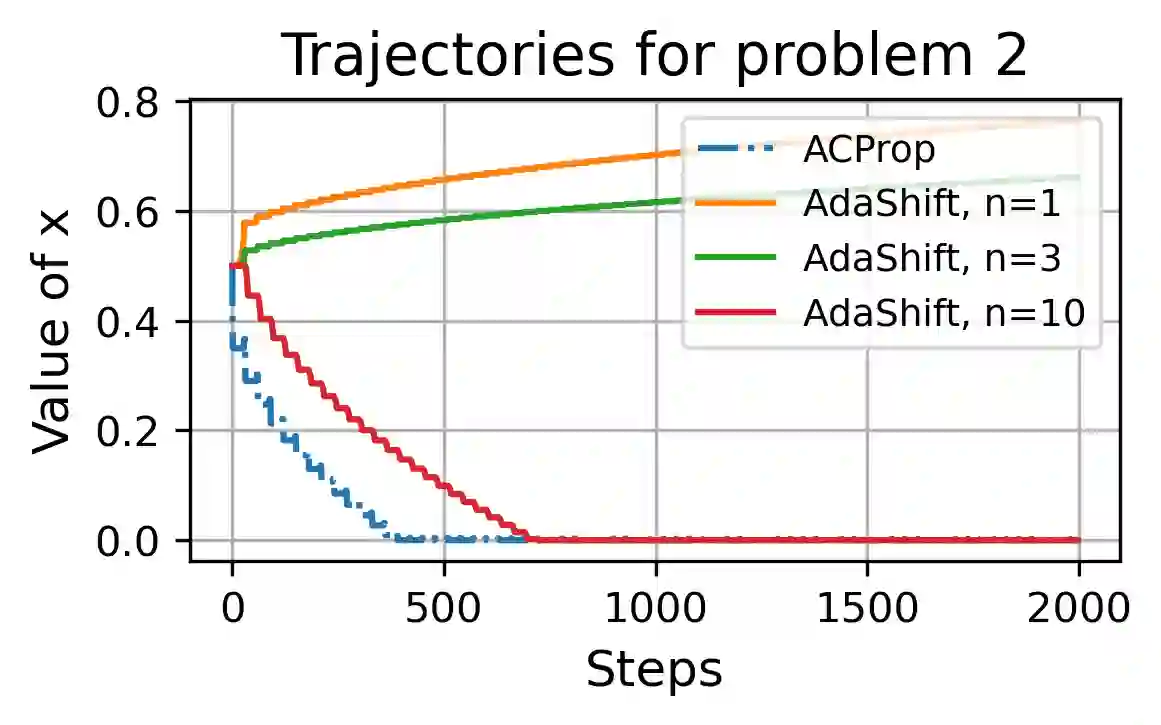

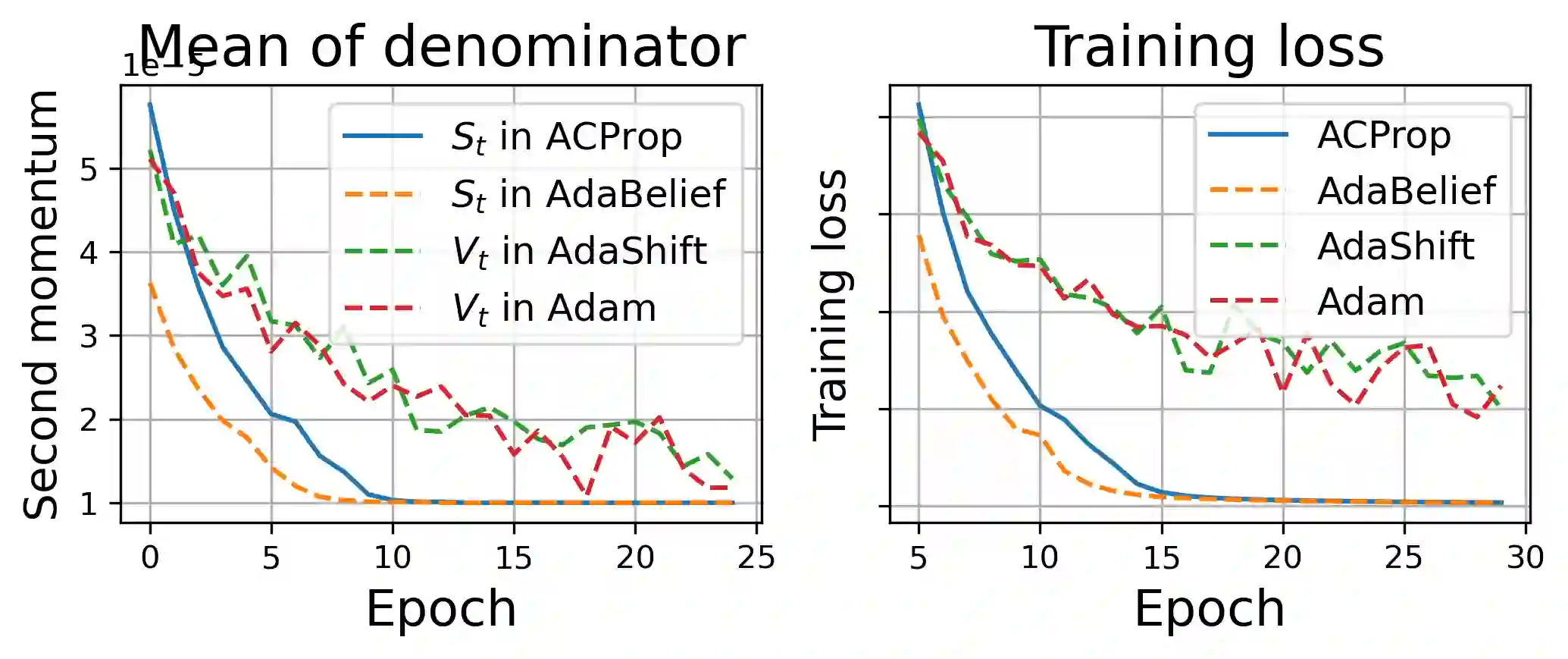

We propose ACProp (Asynchronous-centering-Prop), an adaptive optimizer which combines centering of second momentum and asynchronous update (e.g. for $t$-th update, denominator uses information up to step $t-1$, while numerator uses gradient at $t$-th step). ACProp has both strong theoretical properties and empirical performance. With the example by Reddi et al. (2018), we show that asynchronous optimizers (e.g. AdaShift, ACProp) have weaker convergence condition than synchronous optimizers (e.g. Adam, RMSProp, AdaBelief); within asynchronous optimizers, we show that centering of second momentum further weakens the convergence condition. We demonstrate that ACProp has a convergence rate of $O(\frac{1}{\sqrt{T}})$ for the stochastic non-convex case, which matches the oracle rate and outperforms the $O(\frac{logT}{\sqrt{T}})$ rate of RMSProp and Adam. We validate ACProp in extensive empirical studies: ACProp outperforms both SGD and other adaptive optimizers in image classification with CNN, and outperforms well-tuned adaptive optimizers in the training of various GAN models, reinforcement learning and transformers. To sum up, ACProp has good theoretical properties including weak convergence condition and optimal convergence rate, and strong empirical performance including good generalization like SGD and training stability like Adam.

翻译:我们提出ACPROp(Synor-center-centering-Prop),这是一个适应性优化机制,它将第二次势头和不同步更新(例如,美元-美元更新,分母使用信息到美元-美元,分子使用梯度为美元-美元-美元-第一级)。ACPROp既具有很强的理论属性,也具有很强的经验性表现。用Reddi等人(2018年)的例子,我们表明,不同步优化机制(例如,AdaShift、ACProp)比同步优化机制(例如,Adam、RMSProp、Adabelief)的趋同性优化机制(例如,Adam、RMSProp、ADBelifer)的趋同条件(例如,Adam-ACPROp)的趋同性趋同性(例如,ASMAIC-GMAS-SAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAAA, ASU ASU ASU ASU, ASU ASULI, ASU ASU ASU ASU ASU, ASU ASU, ASU ASU ASU ASU ASU ASU,包括:AMAAMAAMAAFAMAAMAAMAAMAAMAAMA AS ASMA AS AS ASMA AS AS ASMA ASMA ASU ASU ASU ASU ASLBLI ASL ASL AS AS ASTI ASTI AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS AS ASl AS ASl ASl AS AS ASl ASl AS ASl AS AS ASl ASl AS AS AS AS AS AS AS AS AS AS ASl ASl ASl ASl AS AS ASl ASl AS