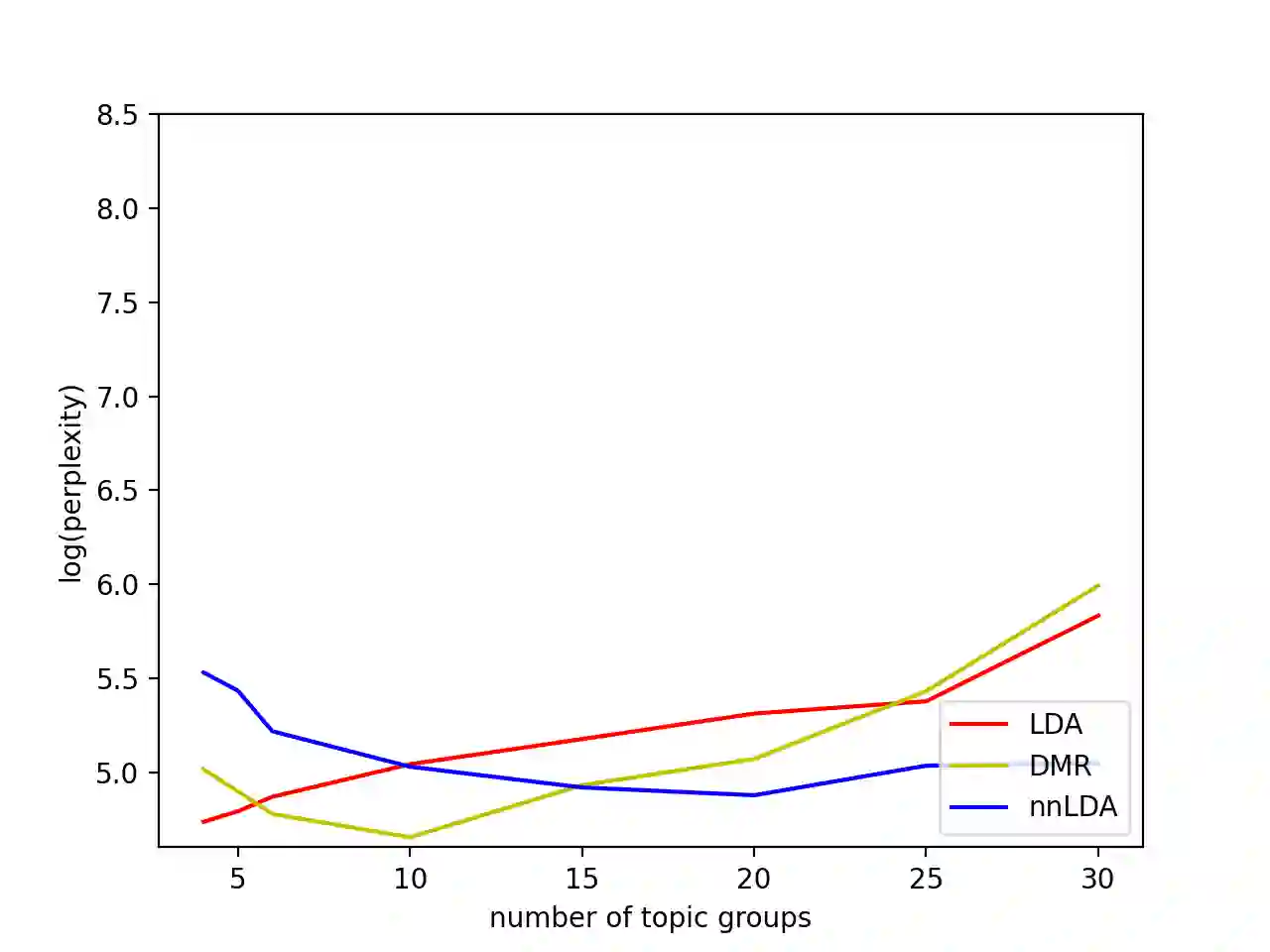

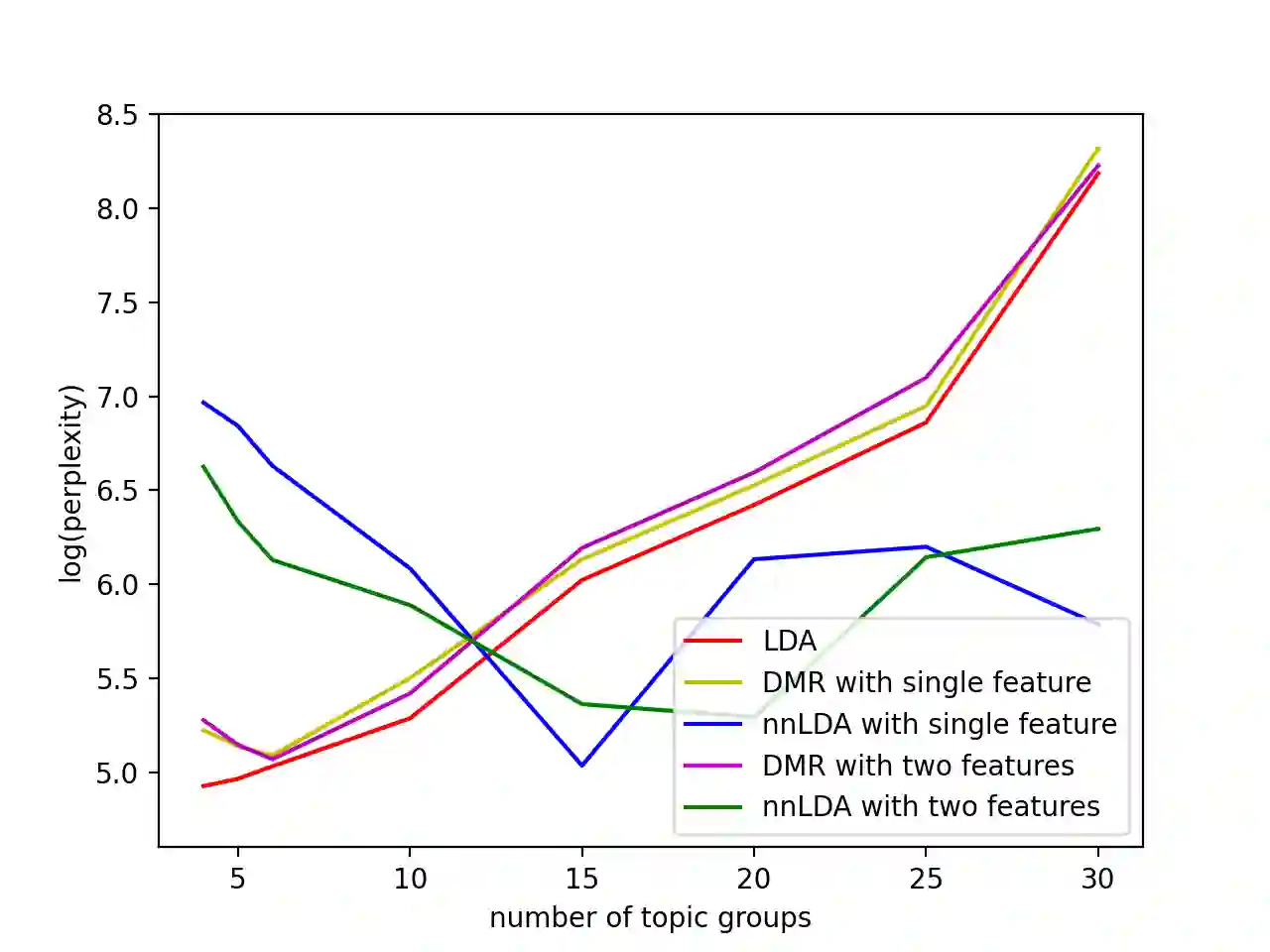

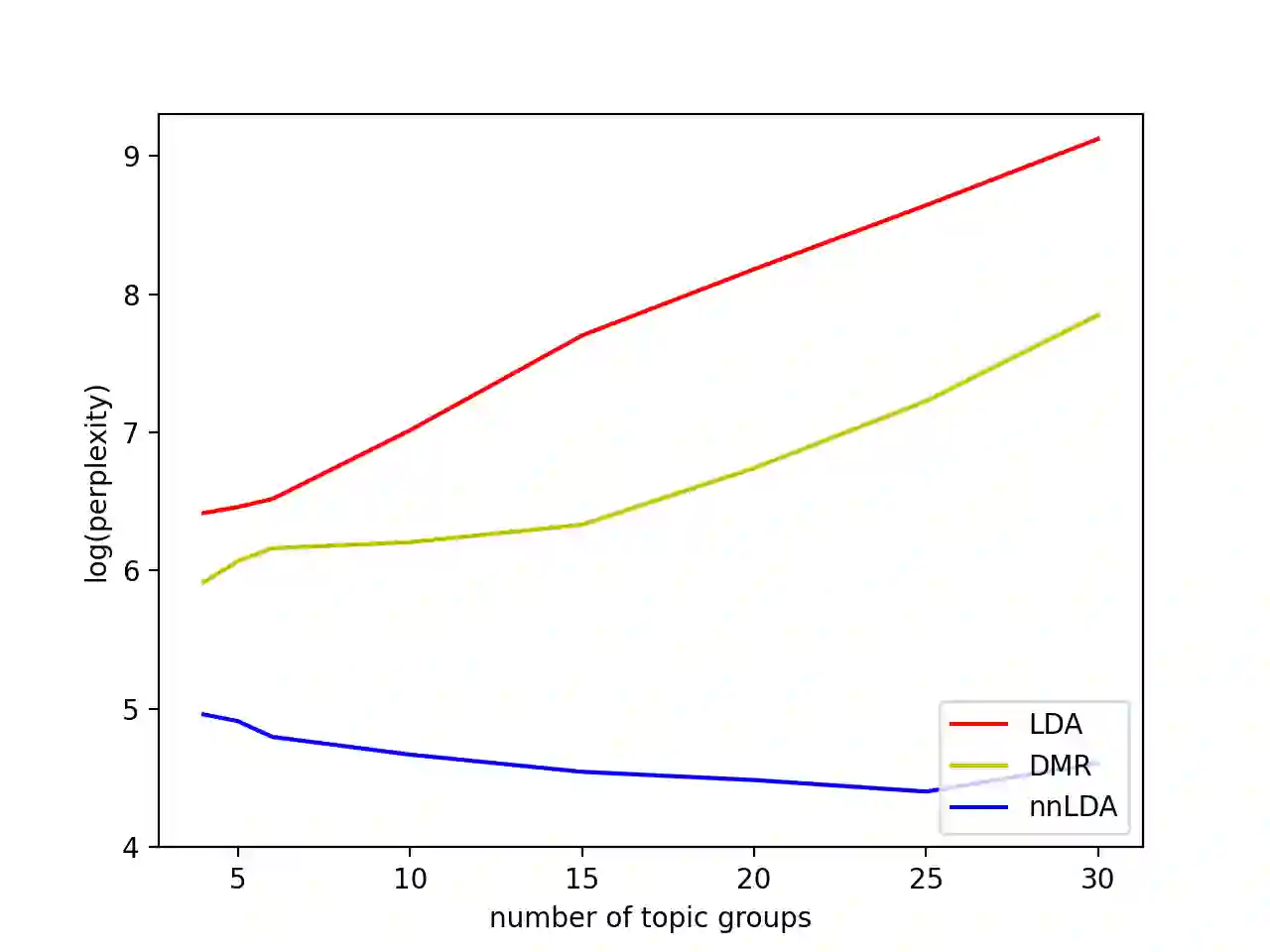

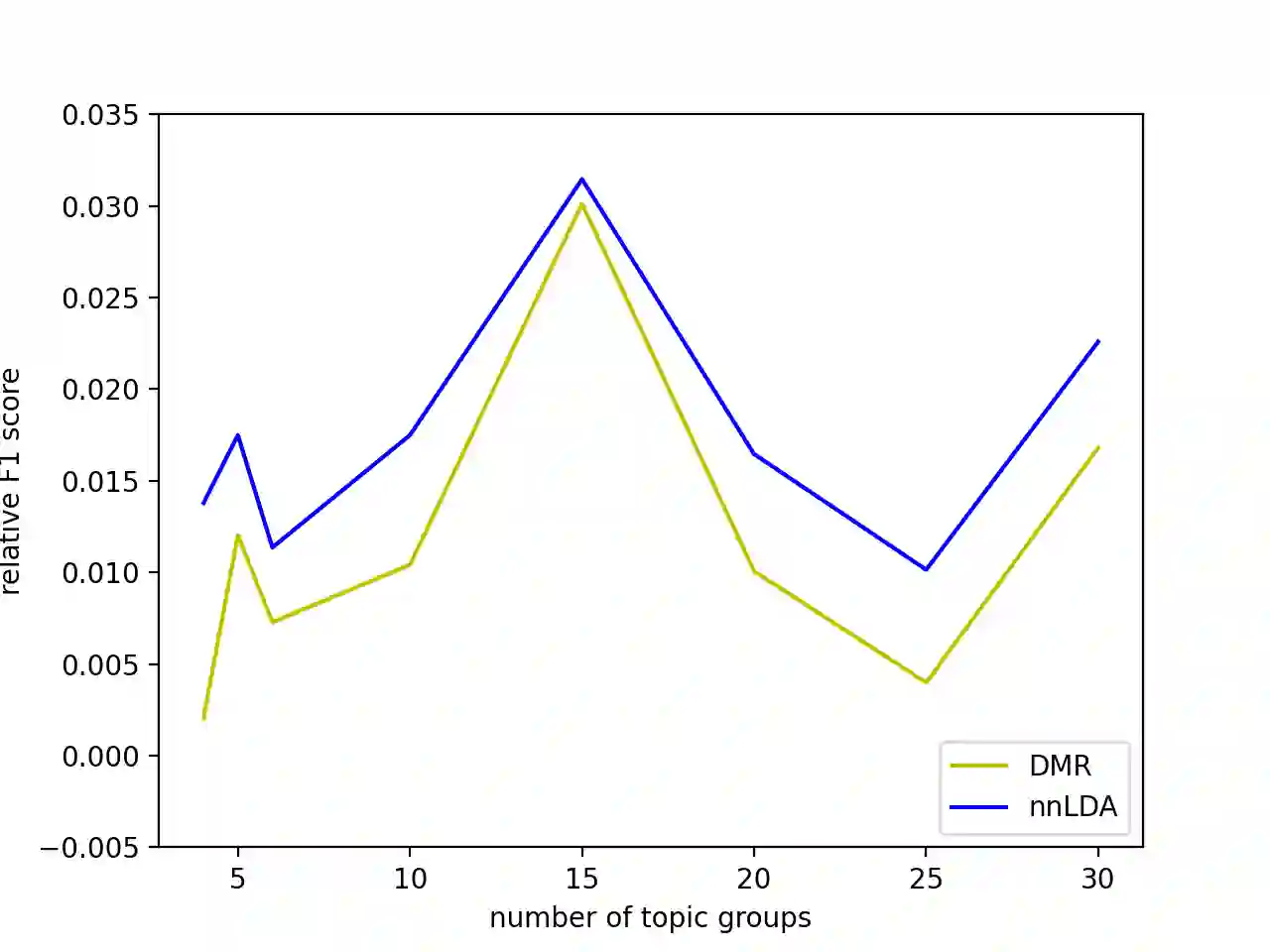

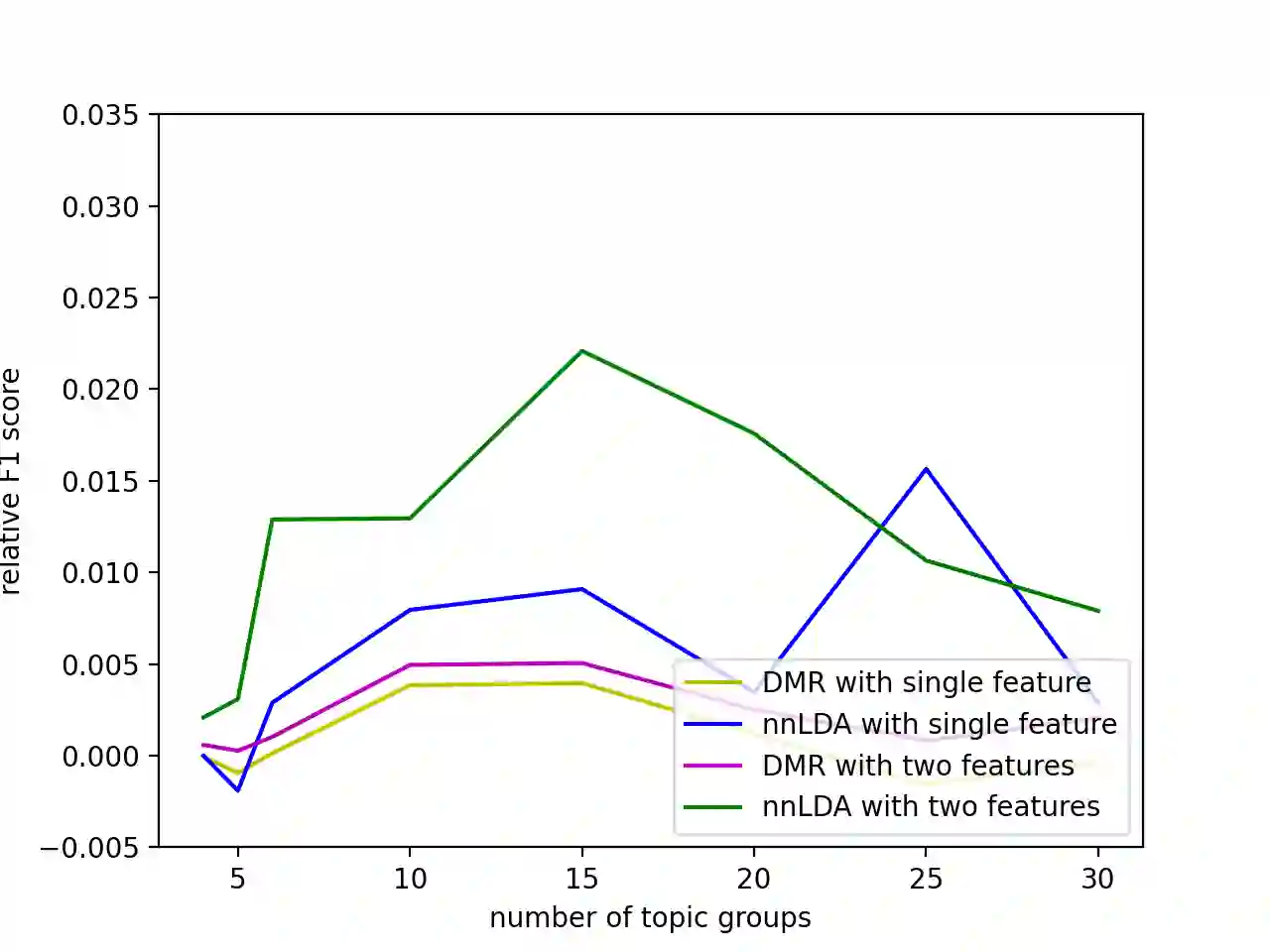

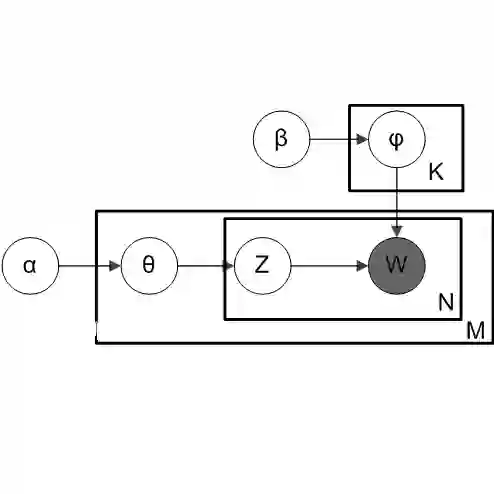

Traditional topic models such as Latent Dirichlet Allocation (LDA) have been widely used to uncover latent structures in text corpora, but they often struggle to integrate auxiliary information such as metadata, user attributes, or document labels. These limitations restrict their expressiveness, personalization, and interpretability. To address this, we propose nnLDA, a neural-augmented probabilistic topic model that dynamically incorporates side information through a neural prior mechanism. nnLDA models each document as a mixture of latent topics, where the prior over topic proportions is generated by a neural network conditioned on auxiliary features. This design allows the model to capture complex nonlinear interactions between side information and topic distributions that static Dirichlet priors cannot represent. We develop a stochastic variational Expectation-Maximization algorithm to jointly optimize the neural and probabilistic components. Across multiple benchmark datasets, nnLDA consistently outperforms LDA and Dirichlet-Multinomial Regression in topic coherence, perplexity, and downstream classification. These results highlight the benefits of combining neural representation learning with probabilistic topic modeling in settings where side information is available.

翻译:传统主题模型如隐狄利克雷分布(LDA)已被广泛用于揭示文本语料库中的潜在结构,但其在整合元数据、用户属性或文档标签等辅助信息方面常显不足。这些限制影响了模型的表达能力、个性化程度与可解释性。为此,我们提出nnLDA——一种神经增强的概率主题模型,其通过神经先验机制动态融合侧信息。nnLDA将每篇文档建模为潜在主题的混合分布,其中主题比例的先验由基于辅助特征条件化的神经网络生成。该设计使模型能够捕捉侧信息与主题分布之间静态狄利克雷先验无法表征的复杂非线性交互。我们开发了一种随机变分期望最大化算法,以联合优化神经组件与概率组件。在多个基准数据集上的实验表明,nnLDA在主题一致性、困惑度及下游分类任务上均持续优于LDA与狄利克雷多项式回归模型。这些结果凸显了在侧信息可用场景下,将神经表示学习与概率主题建模相结合的优势。