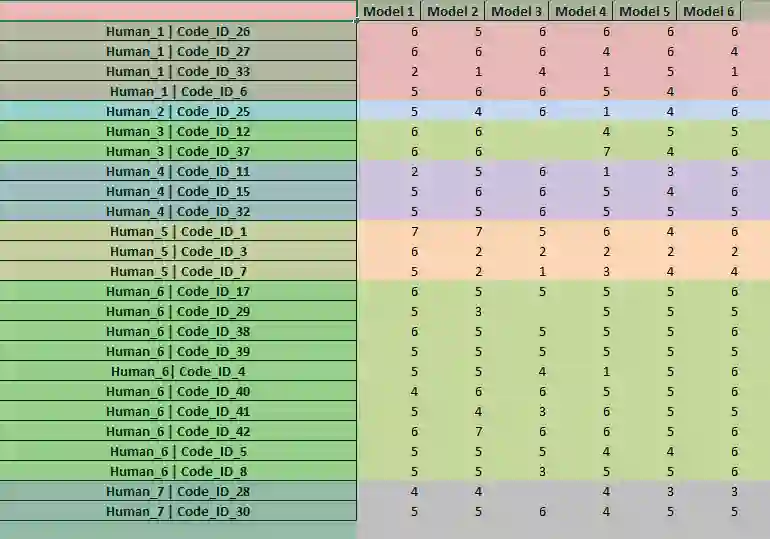

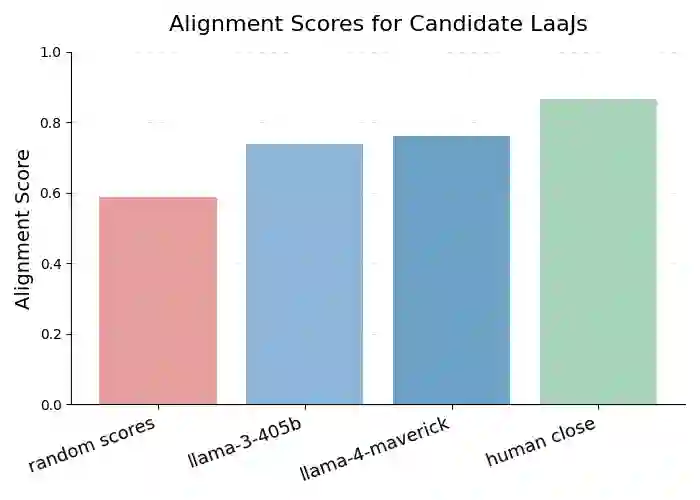

Application modernization in legacy languages such as COBOL, PL/I, and REXX faces an acute shortage of resources, both in expert availability and in high-quality human evaluation data. While Large Language Models as a Judge (LaaJ) offer a scalable alternative to expert review, their reliability must be validated before being trusted in high-stakes workflows. Without principled validation, organizations risk a circular evaluation loop, where unverified LaaJs are used to assess model outputs, potentially reinforcing unreliable judgments and compromising downstream deployment decisions. Although various automated approaches to validating LaaJs have been proposed, alignment with human judgment remains a widely used and conceptually grounded validation strategy. In many real-world domains, the availability of human-labeled evaluation data is severely limited, making it difficult to assess how well a LaaJ aligns with human judgment. We introduce SparseAlign, a formal framework for assessing LaaJ alignment with sparse human-labeled data. SparseAlign combines a novel pairwise-confidence concept with a score-sensitive alignment metric that jointly capture ranking consistency and score proximity, enabling reliable evaluator selection even when traditional statistical methods are ineffective due to limited annotated examples. SparseAlign was applied internally to select LaaJs for COBOL code explanation. The top-aligned evaluators were integrated into assessment workflows, guiding model release decisions. We present a case study of four LaaJs to demonstrate SparseAlign's utility in real-world evaluation scenarios.

翻译:在COBOL、PL/I和REXX等遗留语言的应用程序现代化过程中,面临着资源严重短缺的问题,既缺乏专家资源,也缺少高质量的人工评估数据。虽然作为裁判的大型语言模型(LaaJ)为专家评审提供了可扩展的替代方案,但在高风险工作流程中信任它们之前,必须验证其可靠性。若无原则性验证,组织可能陷入循环评估的困境:未经验证的LaaJ被用于评估模型输出,可能强化不可靠的判断,并损害下游部署决策。尽管已提出多种自动化验证LaaJ的方法,但与人类判断保持一致仍是一种广泛使用且概念上可靠的验证策略。在许多现实领域,人工标注的评估数据极为有限,难以评估LaaJ与人类判断的一致性。我们提出了SparseAlign,这是一个用于在稀疏人工标注数据下评估LaaJ一致性的形式化框架。SparseAlign结合了新颖的成对置信度概念与分数敏感的一致性度量,共同捕捉排序一致性和分数接近度,即使在标注样本有限导致传统统计方法失效时,也能实现可靠的评估器选择。SparseAlign已在内部应用于选择COBOL代码解释的LaaJ。一致性最高的评估器被集成到评估工作流程中,指导模型发布决策。我们通过四个LaaJ的案例研究,展示了SparseAlign在实际评估场景中的实用性。