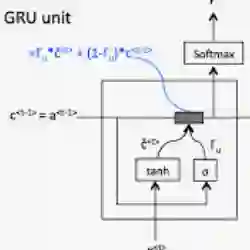

Audio-visual navigation represents a significant area of research in which intelligent agents utilize egocentric visual and auditory perceptions to identify audio targets. Conventional navigation methodologies typically adopt a staged modular design, which involves first executing feature fusion, then utilizing Gated Recurrent Unit (GRU) modules for sequence modeling, and finally making decisions through reinforcement learning. While this modular approach has demonstrated effectiveness, it may also lead to redundant information processing and inconsistencies in information transmission between the various modules during the feature fusion and GRU sequence modeling phases. This paper presents IRCAM-AVN (Iterative Residual Cross-Attention Mechanism for Audiovisual Navigation), an end-to-end framework that integrates multimodal information fusion and sequence modeling within a unified IRCAM module, thereby replacing the traditional separate components for fusion and GRU. This innovative mechanism employs a multi-level residual design that concatenates initial multimodal sequences with processed information sequences. This methodological shift progressively optimizes the feature extraction process while reducing model bias and enhancing the model's stability and generalization capabilities. Empirical results indicate that intelligent agents employing the iterative residual cross-attention mechanism exhibit superior navigation performance.

翻译:视听导航是智能体利用第一视角视觉与听觉感知定位音频目标的重要研究领域。传统导航方法通常采用分阶段模块化设计,即先进行特征融合,再利用门控循环单元(GRU)模块进行序列建模,最后通过强化学习进行决策。尽管这种模块化方法已展现有效性,但在特征融合与GRU序列建模阶段,各模块间可能存在冗余信息处理与信息传递不一致的问题。本文提出IRCAM-AVN(面向视听导航的迭代残差交叉注意力机制),这是一种端到端框架,将多模态信息融合与序列建模统一集成于IRCAM模块中,从而替代了传统的分离式融合与GRU组件。该创新机制采用多级残差设计,将初始多模态序列与处理后信息序列进行拼接。这种方法的转变逐步优化了特征提取过程,同时降低了模型偏差,增强了模型的稳定性与泛化能力。实验结果表明,采用迭代残差交叉注意力机制的智能体展现出更优越的导航性能。