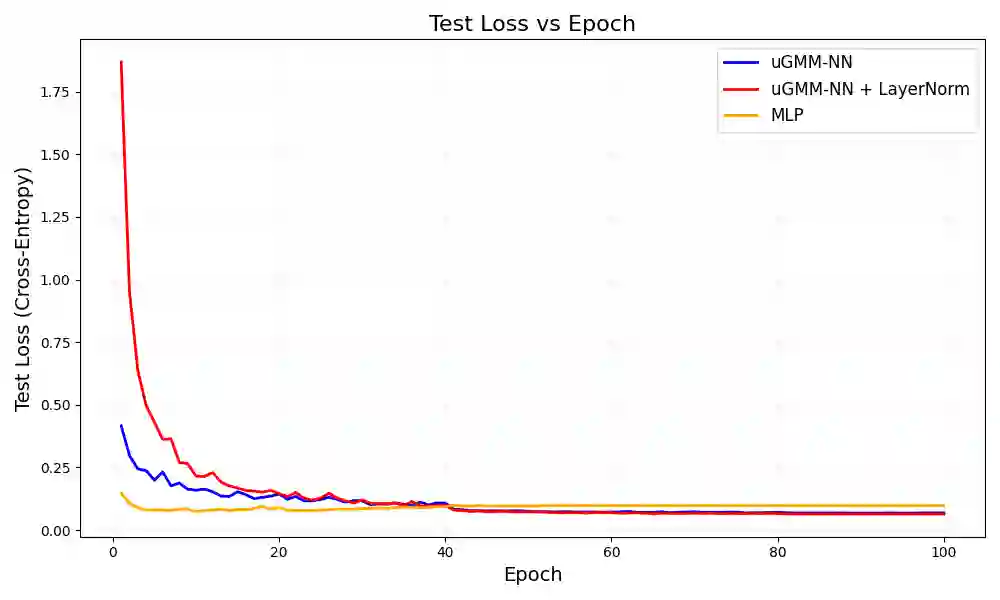

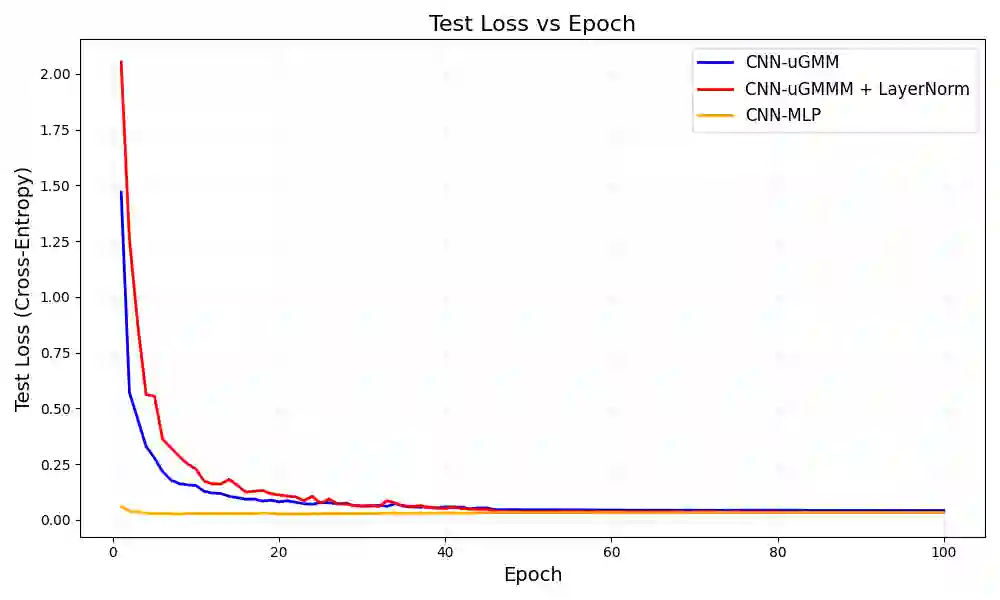

This paper introduces the Univariate Gaussian Mixture Model Neural Network (uGMM-NN), a novel neural architecture that embeds probabilistic reasoning directly into the computational units of deep networks. Unlike traditional neurons, which apply weighted sums followed by fixed non-linearities, each uGMM-NN node parameterizes its activations as a univariate Gaussian mixture, with learnable means, variances, and mixing coefficients. This design enables richer representations by capturing multimodality and uncertainty at the level of individual neurons, while retaining the scalability of standard feed-forward networks. We demonstrate that uGMM-NN can achieve competitive discriminative performance compared to conventional multilayer perceptrons, while additionally offering a probabilistic interpretation of activations. The proposed framework provides a foundation for integrating uncertainty-aware components into modern neural architectures, opening new directions for both discriminative and generative modeling.

翻译:本文介绍了单变量高斯混合模型神经网络(uGMM-NN),这是一种新颖的神经架构,它将概率推理直接嵌入到深度网络的计算单元中。与传统神经元应用加权和后接固定非线性变换不同,每个uGMM-NN节点将其激活参数化为一个单变量高斯混合模型,具有可学习的均值、方差和混合系数。该设计通过在单个神经元层面捕获多模态性和不确定性,实现了更丰富的表征,同时保留了标准前馈网络的可扩展性。我们证明,与传统的多层感知机相比,uGMM-NN能够实现具有竞争力的判别性能,同时额外提供了激活的概率解释。所提出的框架为将不确定性感知组件集成到现代神经架构中奠定了基础,为判别式和生成式建模开辟了新的方向。