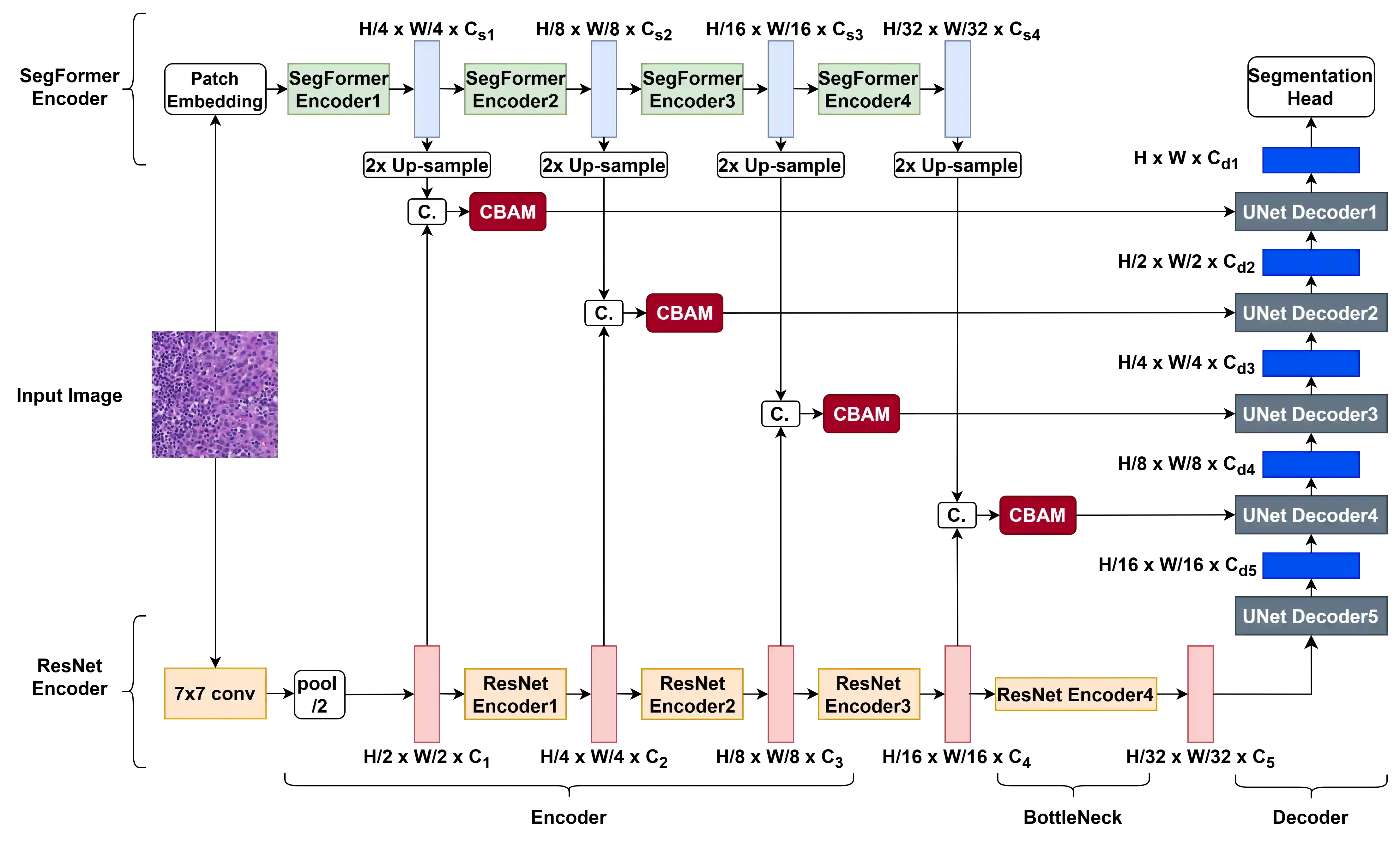

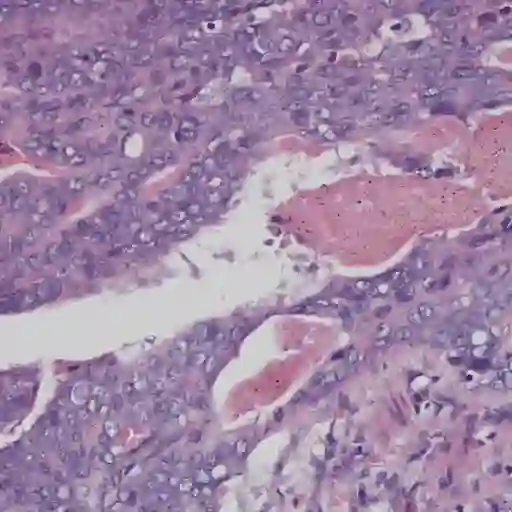

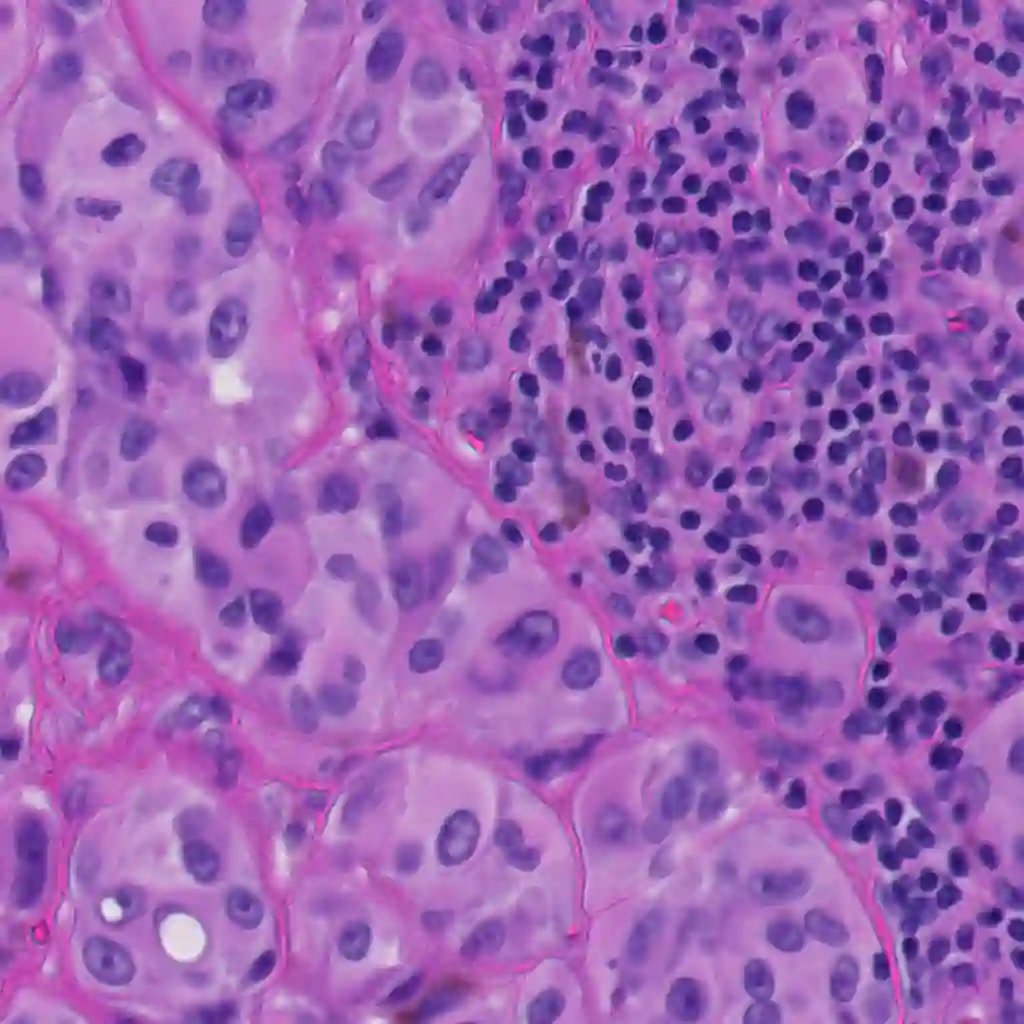

Automated histopathological image analysis plays a vital role in computer-aided diagnosis of various diseases. Among developed algorithms, deep learning-based approaches have demonstrated excellent performance in multiple tasks, including semantic tissue segmentation in histological images. In this study, we propose a novel approach based on attention-driven feature fusion of convolutional neural networks (CNNs) and vision transformers (ViTs) within a unified dual-encoder model to improve semantic segmentation performance. Evaluation on two publicly available datasets showed that our model achieved {\mu}IoU/{\mu}Dice scores of 76.79%/86.87% on the GCPS dataset and 64.93%/76.60% on the PUMA dataset, outperforming state-of-the-art and baseline benchmarks. The implementation of our method is publicly available in a GitHub repository: https://github.com/NimaTorbati/ACS-SegNet

翻译:自动化组织病理学图像分析在各种疾病的计算机辅助诊断中发挥着至关重要的作用。在已开发的算法中,基于深度学习的方法在包括组织学图像语义组织分割在内的多项任务中展现出优异性能。本研究提出了一种新颖方法,该方法基于卷积神经网络(CNNs)与视觉变换器(ViTs)在统一双编码器模型内的注意力驱动特征融合,以提升语义分割性能。在两个公开数据集上的评估表明,我们的模型在GCPS数据集上取得了76.79%/86.87%的μIoU/μDice分数,在PUMA数据集上取得了64.93%/76.60%的分数,其性能超越了当前最先进方法和基线基准。我们方法的实现已公开于GitHub仓库:https://github.com/NimaTorbati/ACS-SegNet