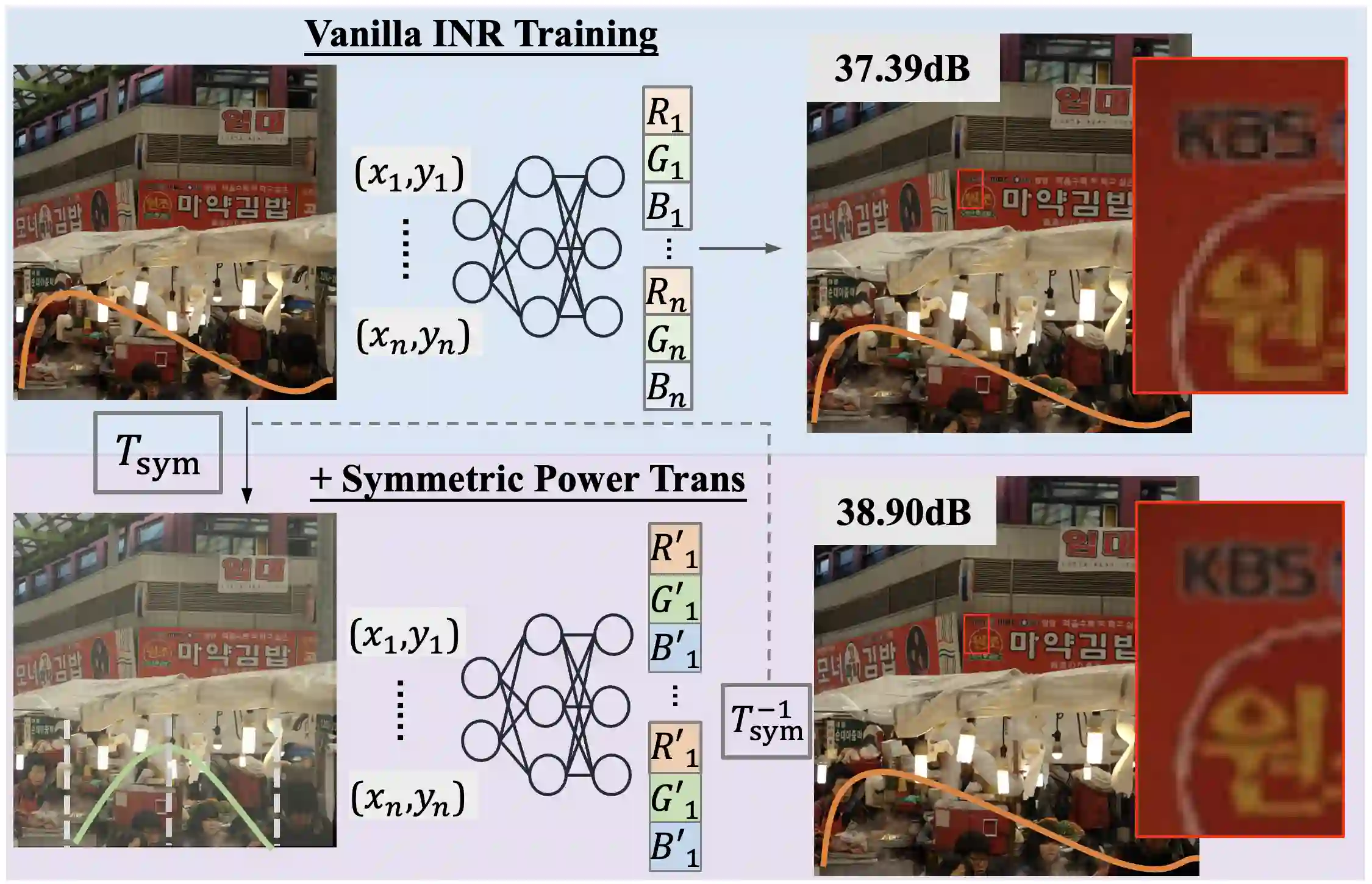

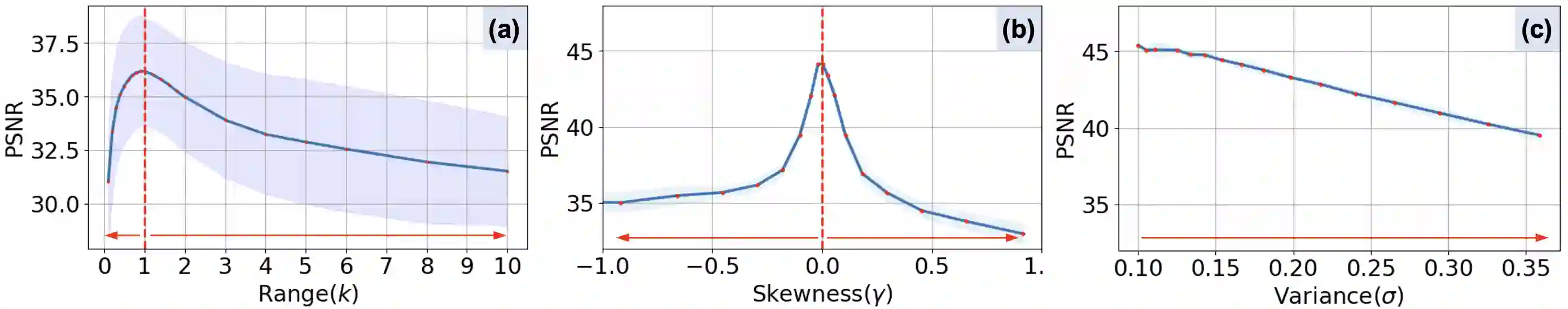

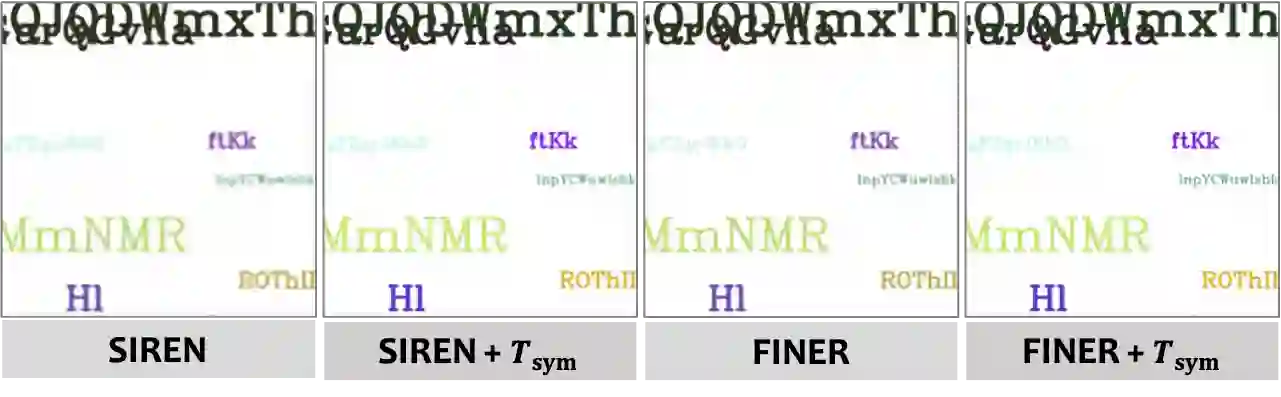

We propose symmetric power transformation to enhance the capacity of Implicit Neural Representation~(INR) from the perspective of data transformation. Unlike prior work utilizing random permutation or index rearrangement, our method features a reversible operation that does not require additional storage consumption. Specifically, we first investigate the characteristics of data that can benefit the training of INR, proposing the Range-Defined Symmetric Hypothesis, which posits that specific range and symmetry can improve the expressive ability of INR. Based on this hypothesis, we propose a nonlinear symmetric power transformation to achieve both range-defined and symmetric properties simultaneously. We use the power coefficient to redistribute data to approximate symmetry within the target range. To improve the robustness of the transformation, we further design deviation-aware calibration and adaptive soft boundary to address issues of extreme deviation boosting and continuity breaking. Extensive experiments are conducted to verify the performance of the proposed method, demonstrating that our transformation can reliably improve INR compared with other data transformations. We also conduct 1D audio, 2D image and 3D video fitting tasks to demonstrate the effectiveness and applicability of our method.

翻译:我们提出对称幂变换方法,从数据变换的角度增强隐式神经表示(INR)的建模能力。与先前利用随机置换或索引重排的工作不同,我们的方法采用可逆操作,无需额外存储开销。具体而言,我们首先研究了有利于INR训练的数据特征,提出了范围定义对称假说,该假说认为特定的范围与对称性能提升INR的表达能力。基于此假说,我们提出非线性对称幂变换,以同时实现范围定义与对称特性。我们通过幂系数对数据进行重分布,使其在目标范围内逼近对称分布。为提升变换的鲁棒性,我们进一步设计了偏差感知校准与自适应软边界机制,以解决极端偏差放大与连续性破坏的问题。通过大量实验验证了所提方法的性能,结果表明相较于其他数据变换方法,我们的变换能稳定提升INR的表现。我们还在一维音频、二维图像和三维视频拟合任务中验证了方法的有效性与适用性。