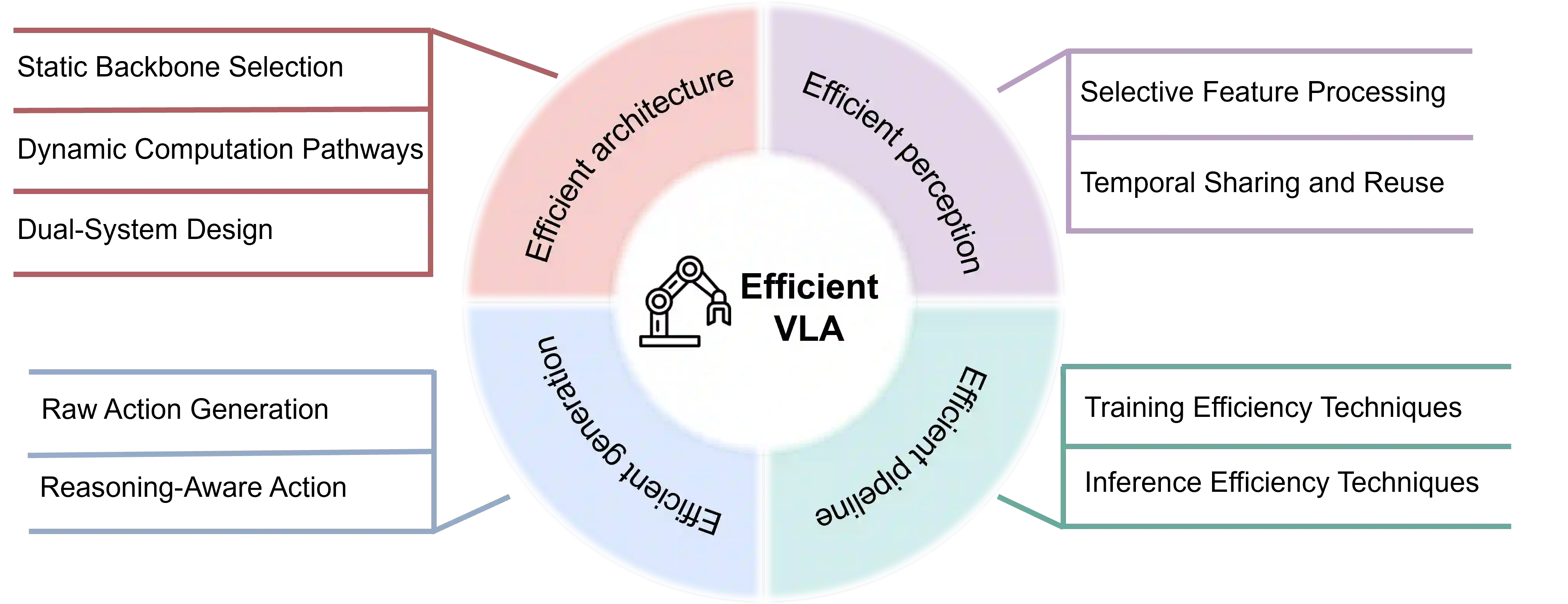

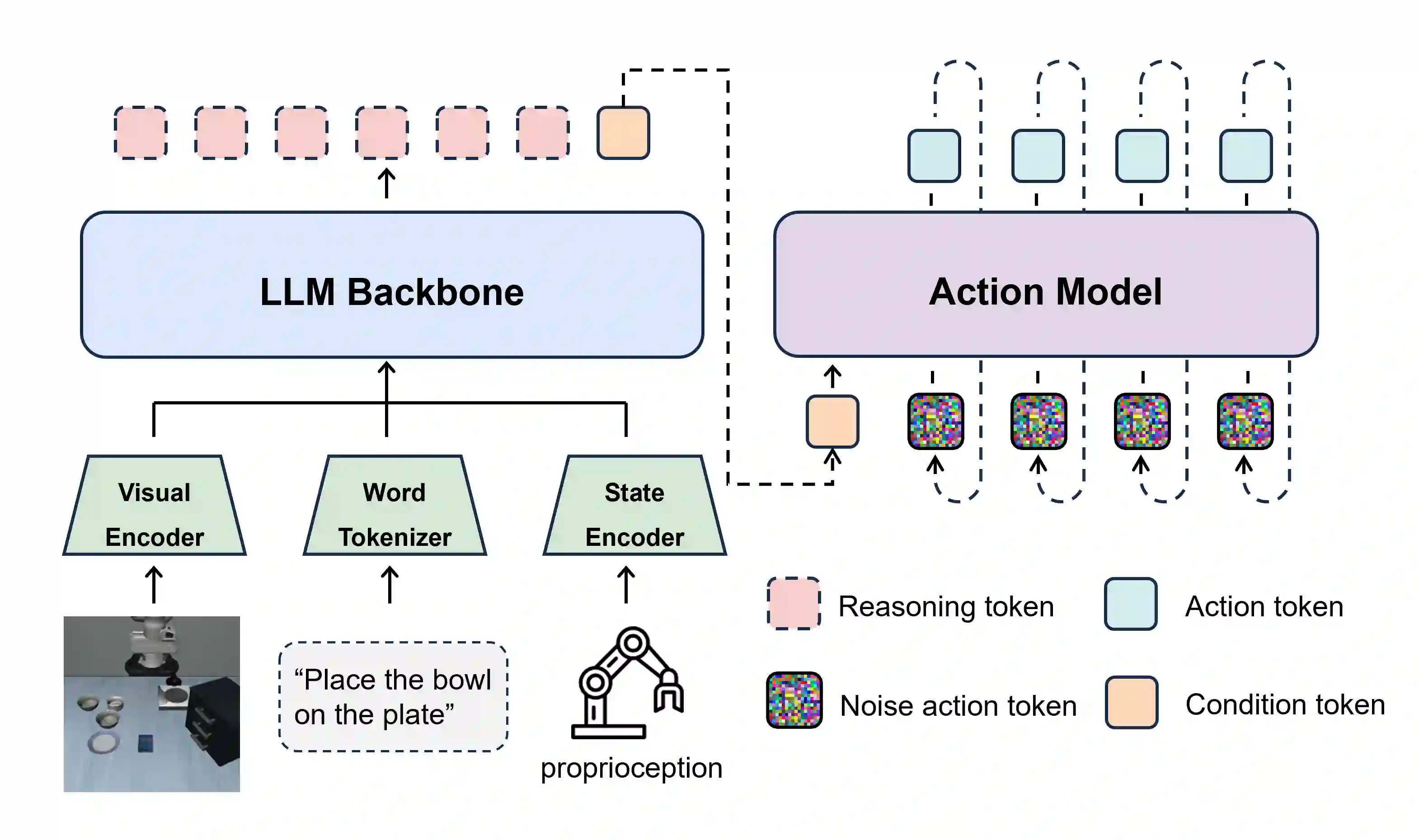

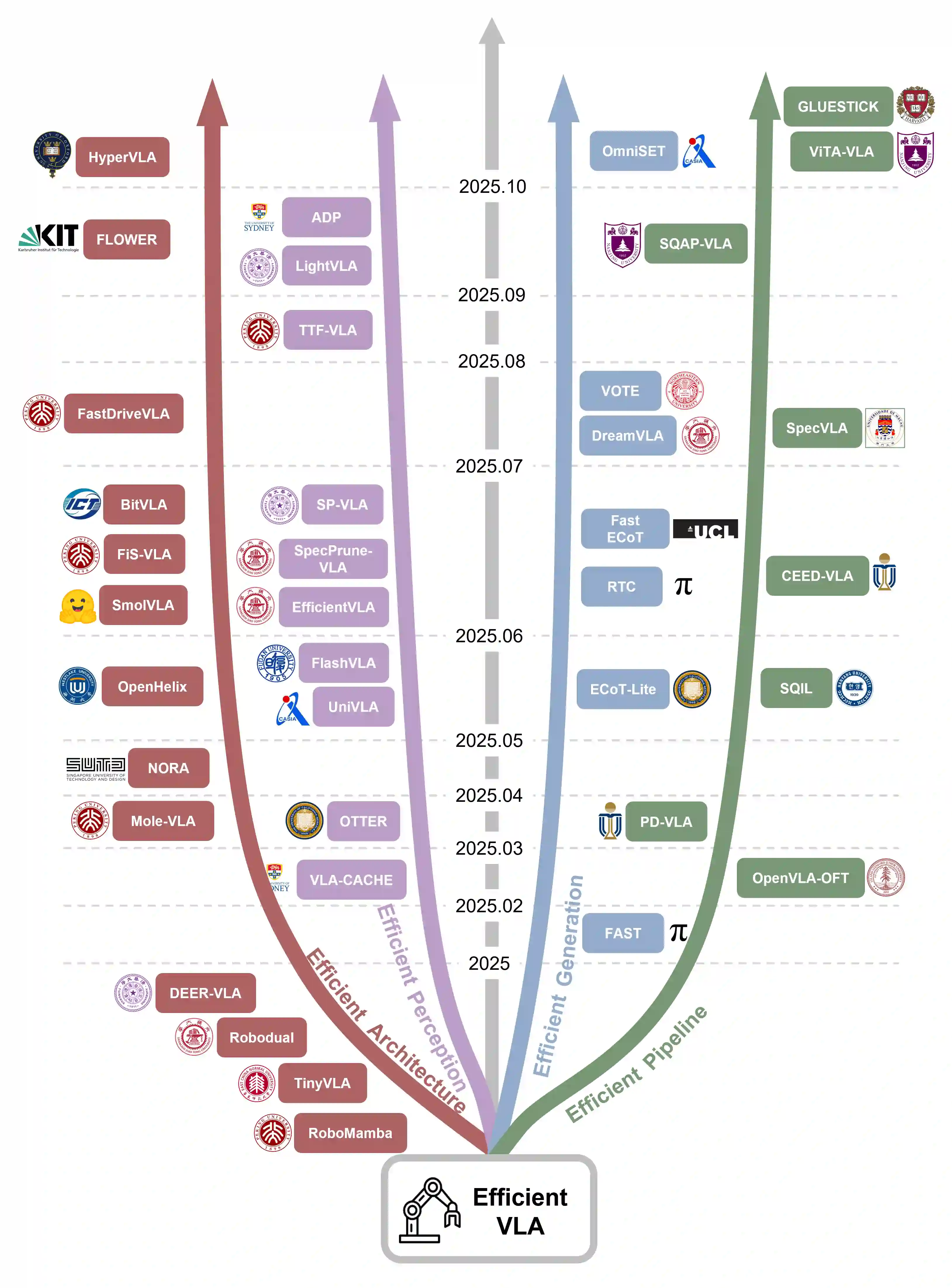

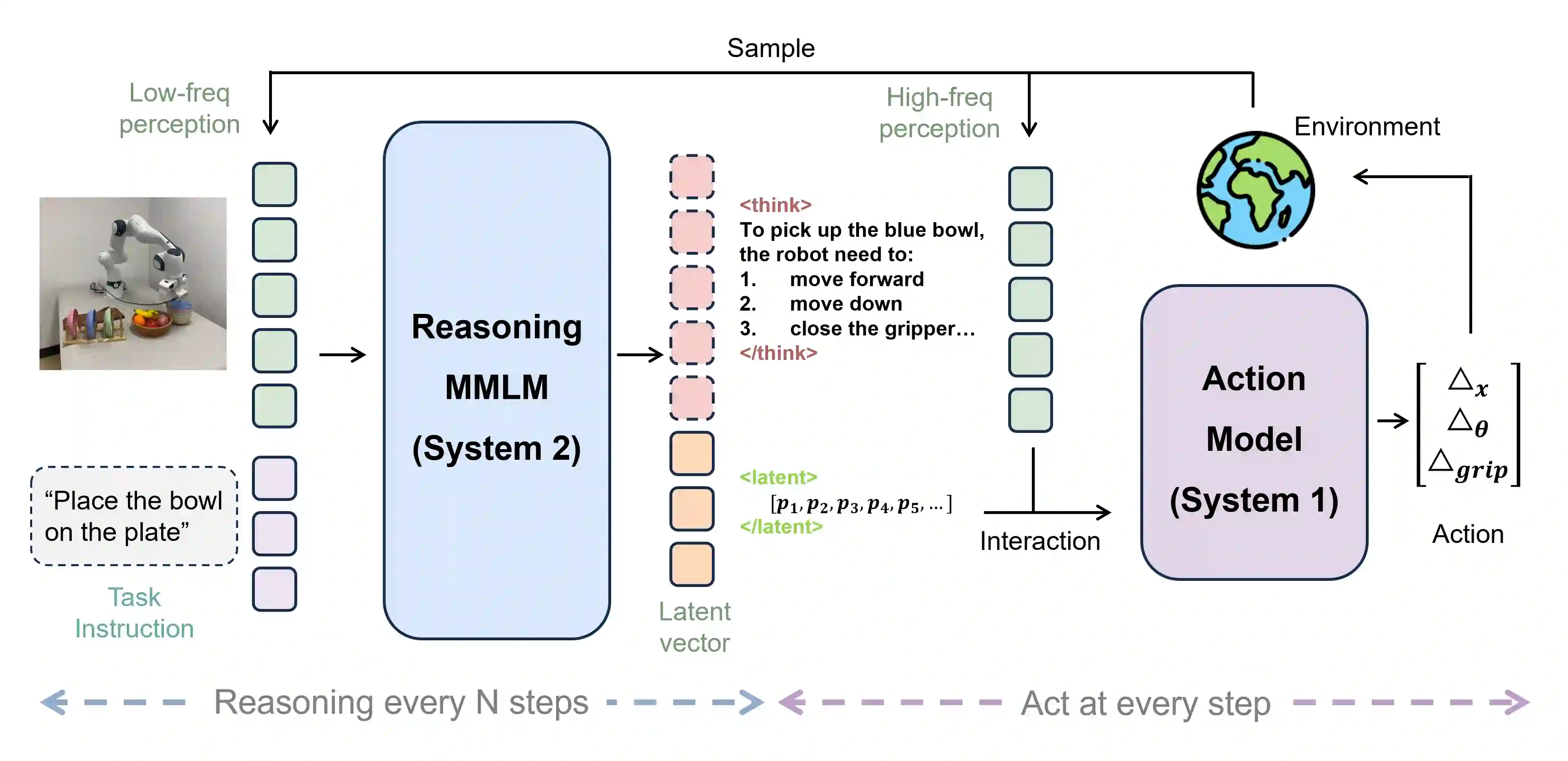

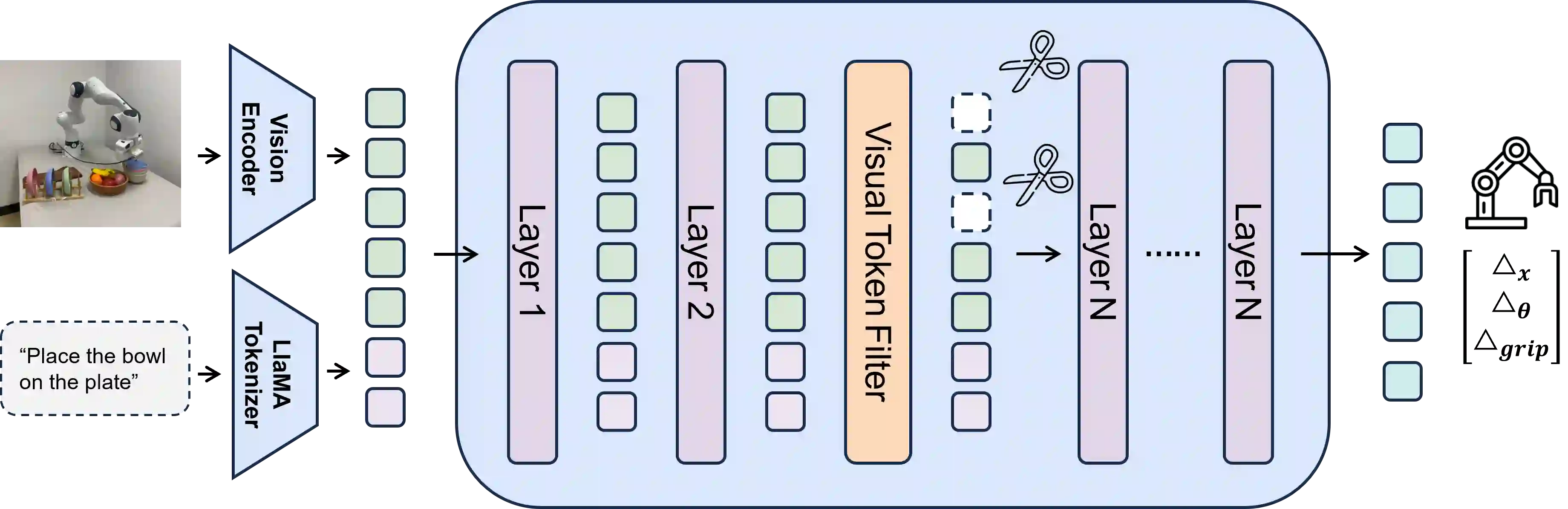

Vision-Language-Action (VLA) models extend vision-language models to embodied control by mapping natural-language instructions and visual observations to robot actions. Despite their capabilities, VLA systems face significant challenges due to their massive computational and memory demands, which conflict with the constraints of edge platforms such as on-board mobile manipulators that require real-time performance. Addressing this tension has become a central focus of recent research. In light of the growing efforts toward more efficient and scalable VLA systems, this survey provides a systematic review of approaches for improving VLA efficiency, with an emphasis on reducing latency, memory footprint, and training and inference costs. We categorize existing solutions into four dimensions: model architecture, perception feature, action generation, and training/inference strategies, summarizing representative techniques within each category. Finally, we discuss future trends and open challenges, highlighting directions for advancing efficient embodied intelligence.

翻译:视觉-语言-动作(VLA)模型通过将自然语言指令和视觉观测映射为机器人动作,将视觉-语言模型扩展至具身控制领域。尽管具备强大能力,VLA系统仍面临巨大挑战:其庞大的计算与内存需求与边缘平台(如需要实时性能的机载移动操控器)的硬件约束存在根本矛盾。解决这一矛盾已成为近期研究的核心焦点。随着学界对构建更高效、可扩展VLA系统的努力日益增长,本综述系统性地回顾了提升VLA效率的方法,重点关注降低延迟、内存占用以及训练与推理成本。我们将现有解决方案归纳为四个维度:模型架构、感知特征、动作生成以及训练/推理策略,并总结了各维度内的代表性技术。最后,我们探讨了未来趋势与开放挑战,为推进高效具身智能的发展指明方向。