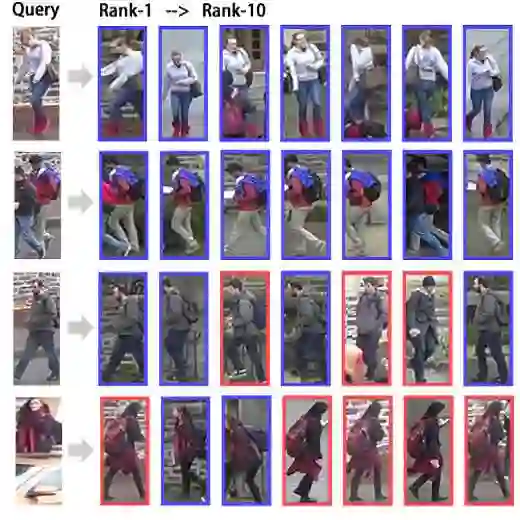

Text-to-image person retrieval (TIPR) aims to identify the target person using textual descriptions, facing challenge in modality heterogeneity. Prior works have attempted to address it by developing cross-modal global or local alignment strategies. However, global methods typically overlook fine-grained cross-modal differences, whereas local methods require prior information to explore explicit part alignments. Additionally, current methods are English-centric, restricting their application in multilingual contexts. To alleviate these issues, we pioneer a multilingual TIPR task by developing a multilingual TIPR benchmark, for which we leverage large language models for initial translations and refine them by integrating domain-specific knowledge. Correspondingly, we propose Bi-IRRA: a Bidirectional Implicit Relation Reasoning and Aligning framework to learn alignment across languages and modalities. Within Bi-IRRA, a bidirectional implicit relation reasoning module enables bidirectional prediction of masked image and text, implicitly enhancing the modeling of local relations across languages and modalities, a multi-dimensional global alignment module is integrated to bridge the modality heterogeneity. The proposed method achieves new state-of-the-art results on all multilingual TIPR datasets. Data and code are presented in https://github.com/Flame-Chasers/Bi-IRRA.

翻译:文本到图像行人检索旨在通过文本描述识别目标行人,面临模态异质性的挑战。先前工作尝试通过开发跨模态全局或局部对齐策略来解决此问题。然而,全局方法通常忽略细粒度跨模态差异,而局部方法需要先验信息来探索显式部件对齐。此外,现有方法以英语为中心,限制了其在多语言场景中的应用。为缓解这些问题,我们通过构建多语言TIPR基准首次提出多语言TIPR任务,利用大语言模型进行初始翻译,并通过整合领域专业知识进行优化。相应地,我们提出Bi-IRRA:一种双向隐式关系推理与对齐框架,用于学习跨语言与跨模态的对齐关系。在Bi-IRRA框架中,双向隐式关系推理模块通过对掩码图像与文本进行双向预测,隐式增强跨语言与跨模态的局部关系建模;同时集成多维全局对齐模块以弥合模态异质性。所提方法在所有多语言TIPR数据集上均取得了新的最优性能。数据与代码公开于https://github.com/Flame-Chasers/Bi-IRRA。