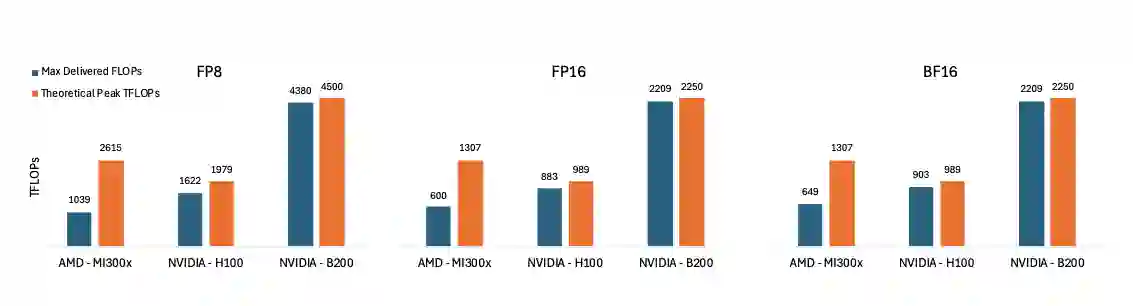

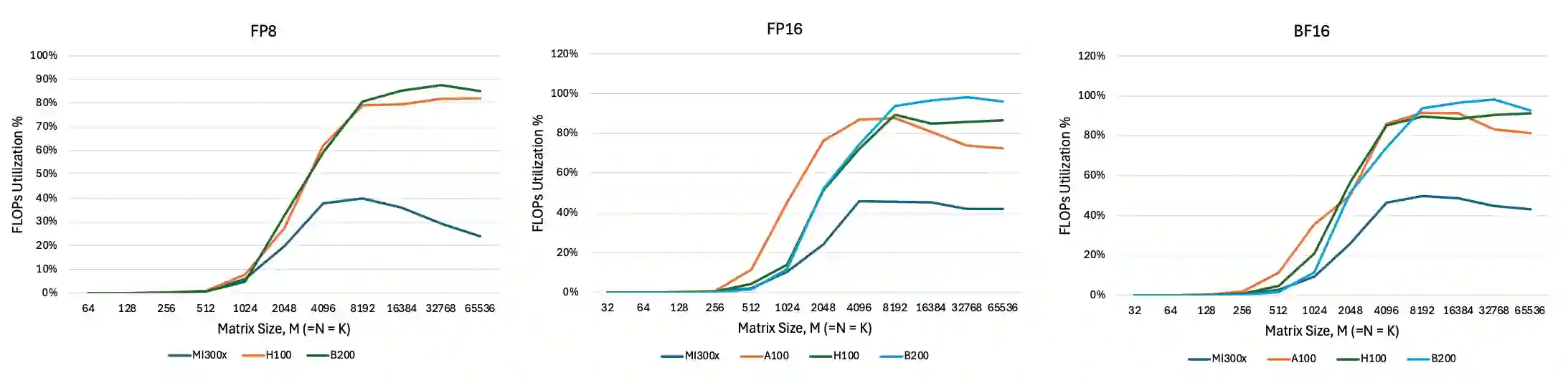

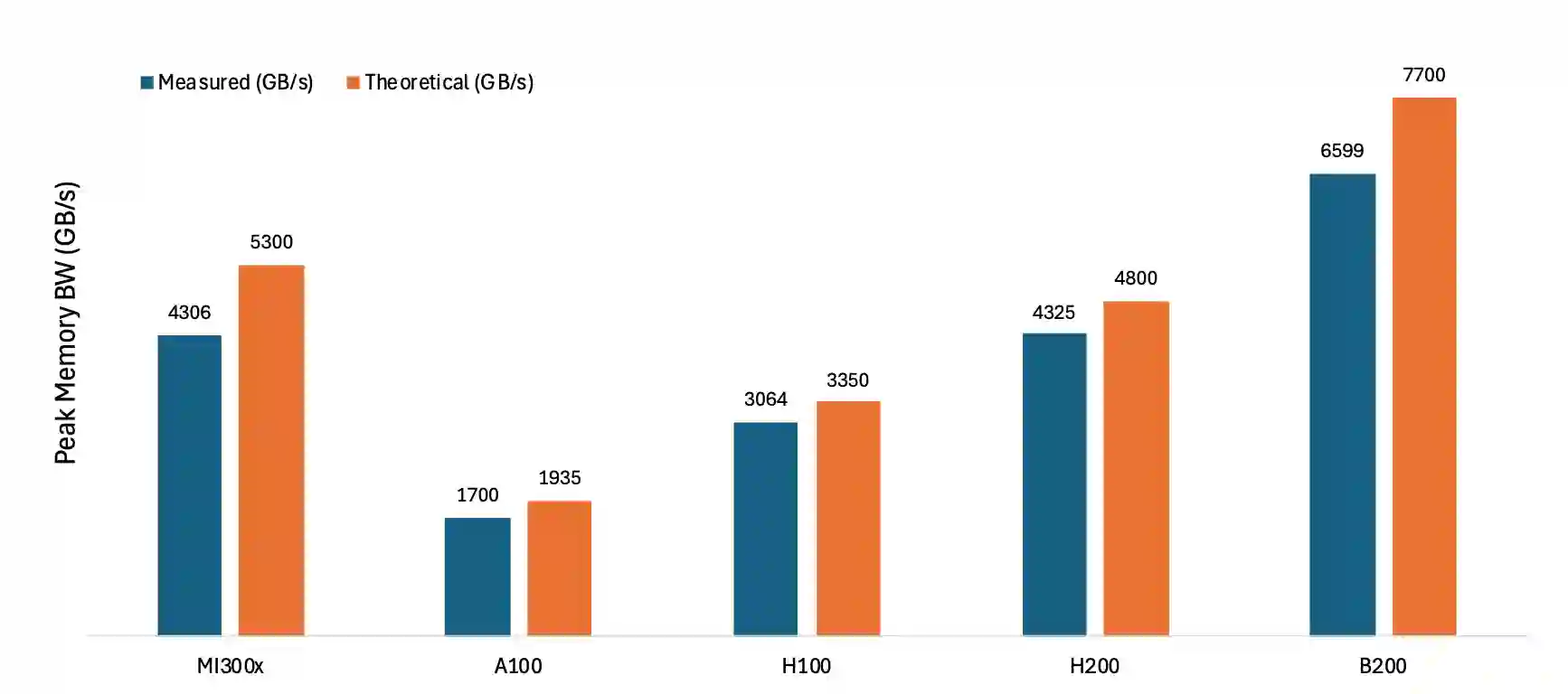

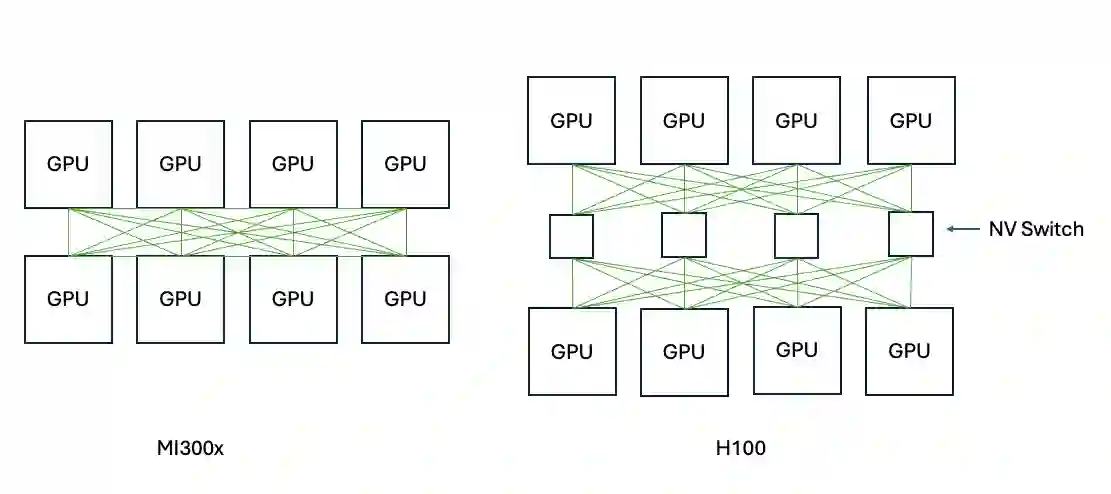

The rapid growth of large language models (LLMs) has driven the need for high-performance, scalable GPU hardware capable of efficiently serving models with hundreds of billions of parameters. While NVIDIA GPUs have traditionally dominated LLM deployments due to their mature CUDA software stack and state-of the-art accelerators, AMD's latest MI300X GPUs offer a compelling alternative, featuring high HBM capacity, matrix cores, and their proprietary interconnect. In this paper, we present a comprehensive evaluation of the AMD MI300X GPUs across key performance domains critical to LLM inference including compute throughput, memory bandwidth, and interconnect communication.

翻译:大型语言模型(LLMs)的快速增长推动了对高性能、可扩展GPU硬件的需求,这些硬件需要能够高效地服务于具有数千亿参数的模型。尽管NVIDIA GPU凭借其成熟的CUDA软件栈和先进的加速器在LLM部署中一直占据主导地位,但AMD最新的MI300X GPU提供了一个引人注目的替代方案,其具备高HBM容量、矩阵核心以及专有的互连技术。本文对AMD MI300X GPU在LLM推理关键性能领域进行了全面评估,包括计算吞吐量、内存带宽和互连通信。