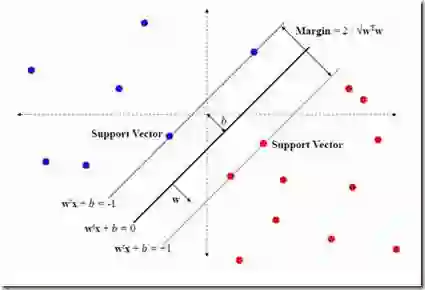

Mathematical modelling, particularly through approaches such as structured sparse support vector machines (SS-SVM), plays a crucial role in processing data with complex feature structures, yet efficient algorithms for distributed large-scale data remain lacking. To address this gap, this paper proposes a unified optimization framework based on a consensus structure. This framework is not only applicable to various loss functions and combined regularization terms but can also be effectively extended to non-convex regularizers, demonstrating strong scalability. Building upon this framework, we develop a distributed parallel alternating direction method of multipliers (ADMM) algorithm to efficiently solve SS-SVMs under distributed data storage. To ensure convergence, we incorporate a Gaussian back-substitution technique. Additionally, for completeness, we introduce a family of sparse group Lasso support vector machine (SGL-SVM) and apply it to music information retrieval. Theoretical analysis confirms that the computational complexity of the proposed algorithm is independent of the choice of regularization terms and loss functions, underscoring the universality of the parallel approach. Experiments on both synthetic and real-world music archive datasets validate the reliability, stability, and efficiency of our algorithm.

翻译:数学建模,特别是通过结构化稀疏支持向量机等方法,在处理具有复杂特征结构的数据中起着至关重要的作用,然而针对分布式大规模数据的高效算法仍然缺乏。为弥补这一不足,本文提出了一种基于共识结构的统一优化框架。该框架不仅适用于多种损失函数和组合正则化项,还能有效扩展至非凸正则化器,展现出强大的可扩展性。在此框架基础上,我们开发了一种分布式并行交替方向乘子法算法,以高效解决分布式数据存储下的结构化稀疏支持向量机问题。为确保收敛性,我们引入了高斯回代技术。此外,为完善方法体系,我们提出了一类稀疏群Lasso支持向量机模型,并将其应用于音乐信息检索。理论分析证实,所提算法的计算复杂度与正则化项和损失函数的选择无关,这凸显了该并行方法的普适性。在合成数据及真实世界音乐档案数据集上的实验验证了我们算法的可靠性、稳定性与高效性。