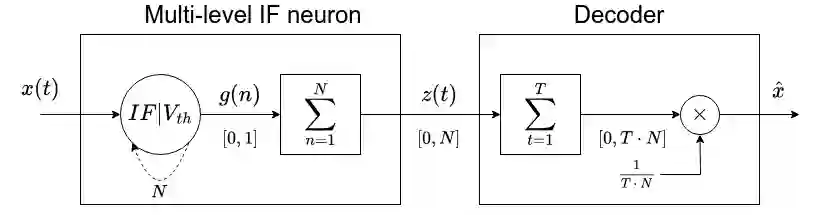

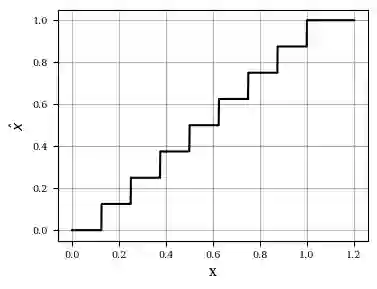

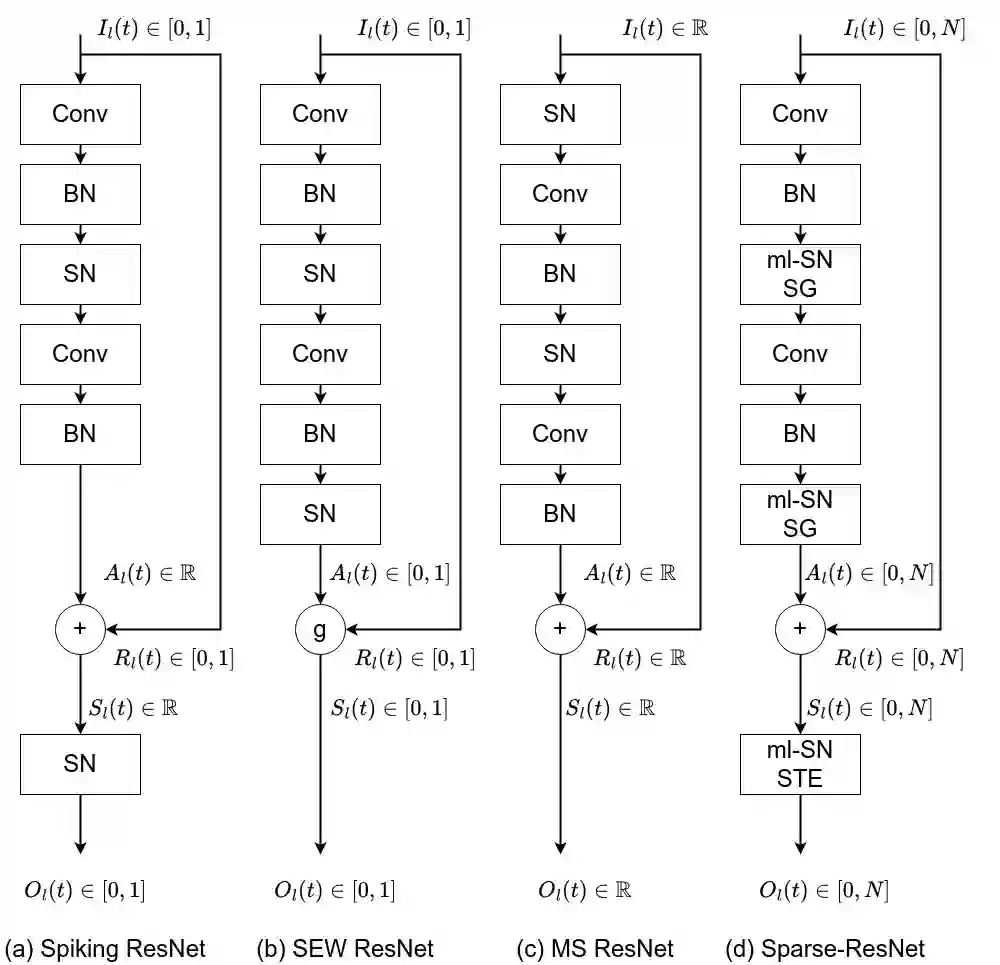

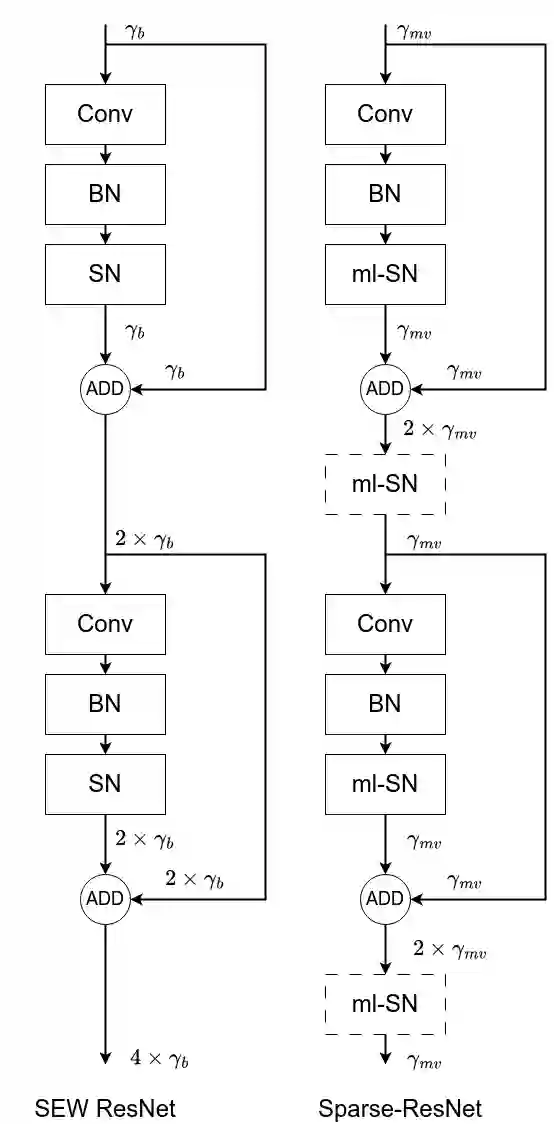

Spiking Neural Networks (SNNs) are one of the most promising bio-inspired neural networks models and have drawn increasing attention in recent years. The event-driven communication mechanism of SNNs allows for sparse and theoretically low-power operations on dedicated neuromorphic hardware. However, the binary nature of instantaneous spikes also leads to considerable information loss in SNNs, resulting in accuracy degradation. To address this issue, we propose a multi-level spiking neuron model able to provide both low-quantization error and minimal inference latency while approaching the performance of full precision Artificial Neural Networks (ANNs). Experimental results with popular network architectures and datasets, show that multi-level spiking neurons provide better information compression, allowing therefore a reduction in latency without performance loss. When compared to binary SNNs on image classification scenarios, multi-level SNNs indeed allow reducing by 2 to 3 times the energy consumption depending on the number of quantization intervals. On neuromorphic data, our approach allows us to drastically reduce the inference latency to 1 timestep, which corresponds to a compression factor of 10 compared to previously published results. At the architectural level, we propose a new residual architecture that we call Sparse-ResNet. Through a careful analysis of the spikes propagation in residual connections we highlight a spike avalanche effect, that affects most spiking residual architectures. Using our Sparse-ResNet architecture, we can provide state-of-the-art accuracy results in image classification while reducing by more than 20% the network activity compared to the previous spiking ResNets.

翻译:脉冲神经网络(SNNs)是最具前景的生物启发神经网络模型之一,近年来受到越来越多的关注。SNNs的事件驱动通信机制使其能够在专用神经形态硬件上实现稀疏且理论上低功耗的操作。然而,瞬时脉冲的二元特性也导致SNNs存在显著的信息损失,进而造成精度下降。为解决这一问题,我们提出了一种多级脉冲神经元模型,能够在接近全精度人工神经网络(ANNs)性能的同时,提供低量化误差和最小推理延迟。基于流行网络架构和数据集的实验结果表明,多级脉冲神经元提供了更好的信息压缩能力,从而可在不损失性能的情况下降低延迟。在图像分类场景中,与二元SNNs相比,多级SNNs根据量化间隔数量的不同,可将能耗降低2至3倍。在神经形态数据上,我们的方法能够将推理延迟大幅缩减至1个时间步,相当于相比已发表结果实现了10倍的压缩因子。在架构层面,我们提出了一种新的残差架构,称为稀疏残差网络(Sparse-ResNet)。通过对残差连接中脉冲传播的细致分析,我们揭示了一种影响多数脉冲残差架构的脉冲雪崩效应。采用我们的稀疏残差网络架构,我们能够在图像分类任务中取得最先进的精度结果,同时相比之前的脉冲残差网络减少超过20%的网络活动。