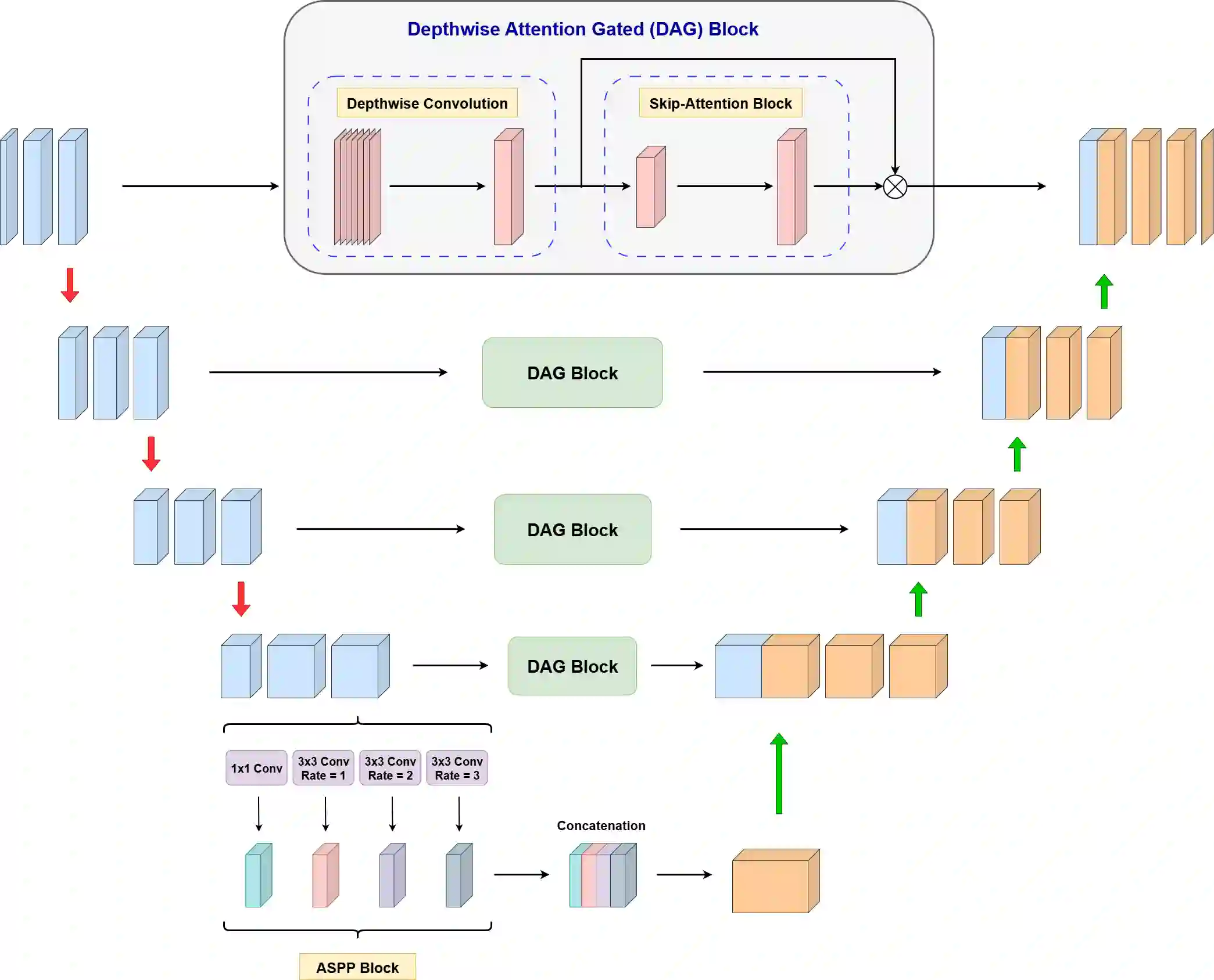

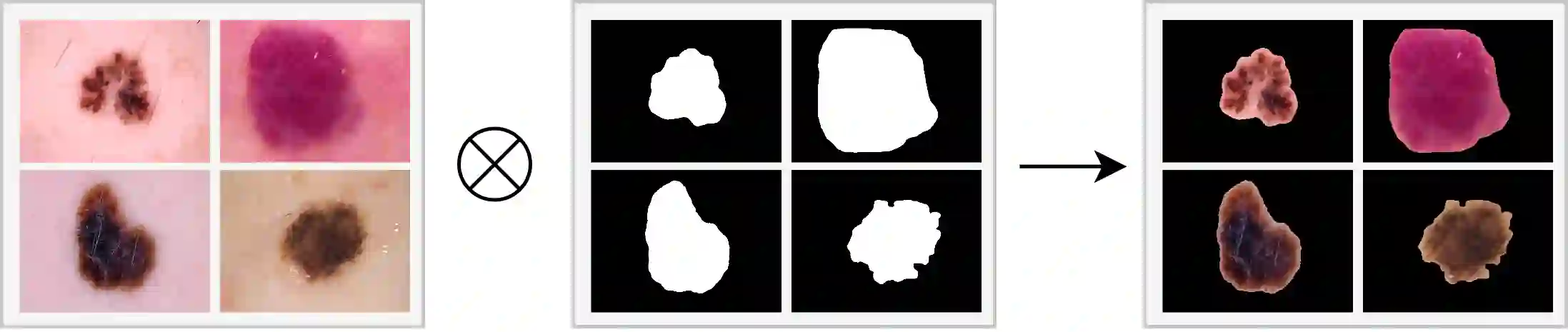

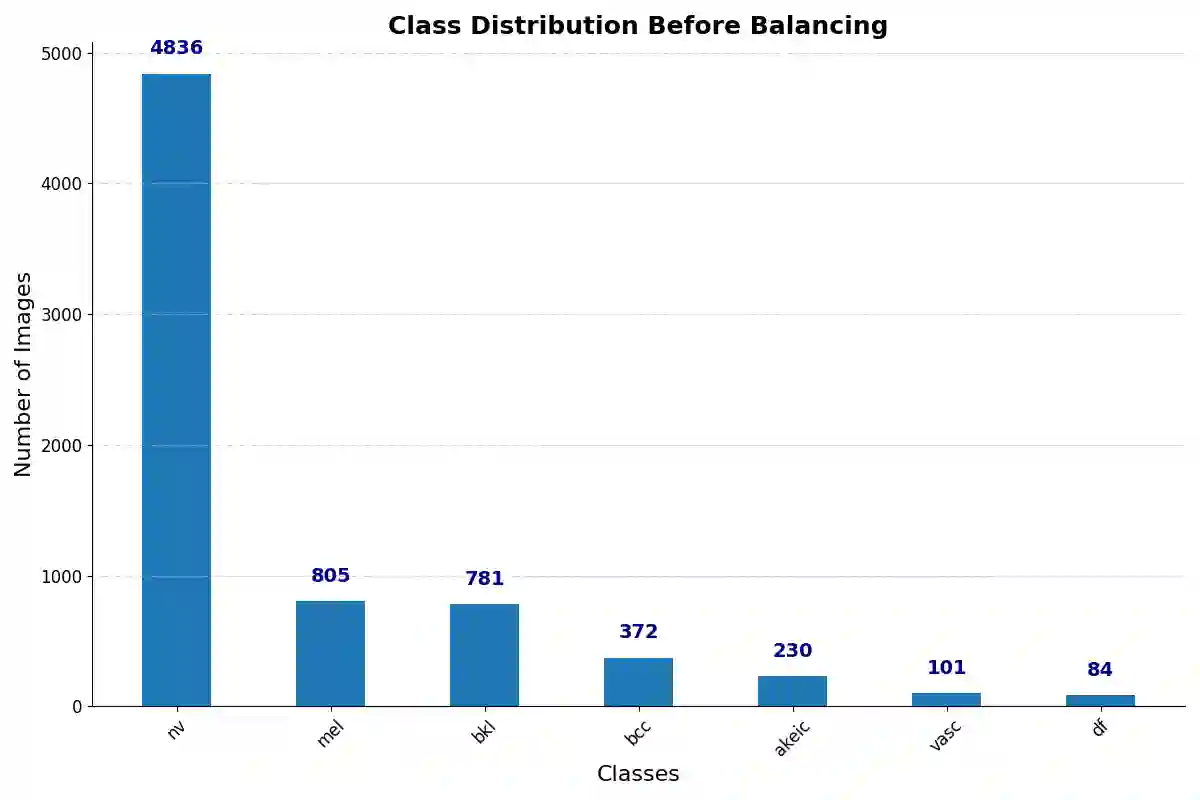

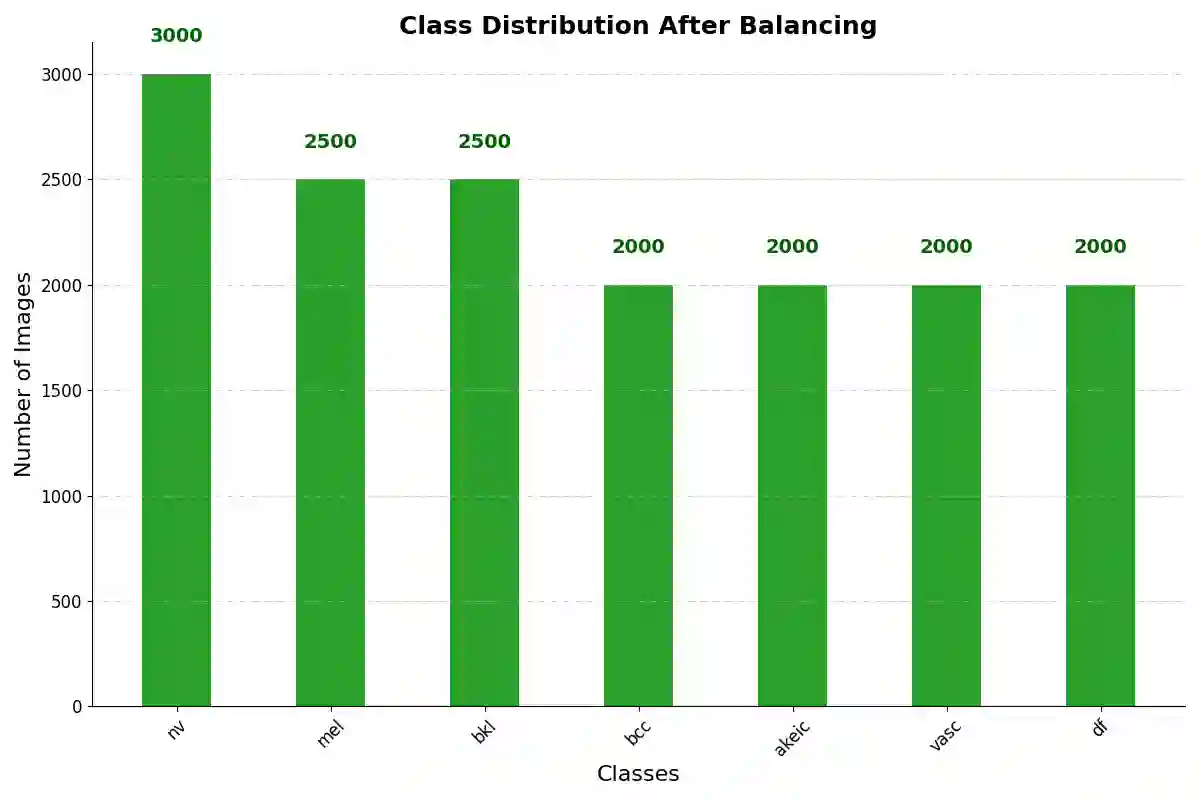

Skin cancer is a life-threatening disease where early detection significantly improves patient outcomes. Automated diagnosis from dermoscopic images is challenging due to high intra-class variability and subtle inter-class differences. Many deep learning models operate as "black boxes," limiting clinical trust. In this work, we propose a dual-encoder attention-based framework that leverages both segmented lesions and clinical metadata to enhance skin lesion classification in terms of both accuracy and interpretability. A novel Deep-UNet architecture with Dual Attention Gates (DAG) and Atrous Spatial Pyramid Pooling (ASPP) is first employed to segment lesions. The classification stage uses two DenseNet201 encoders-one on the original image and another on the segmented lesion whose features are fused via multi-head cross-attention. This dual-input design guides the model to focus on salient pathological regions. In addition, a transformer-based module incorporates patient metadata (age, sex, lesion site) into the prediction. We evaluate our approach on the HAM10000 dataset and the ISIC 2018 and 2019 challenges. The proposed method achieves state-of-the-art segmentation performance and significantly improves classification accuracy and average AUC compared to baseline models. To validate our model's reliability, we use Gradient-weighted Class Activation Mapping (Grad-CAM) to generate heatmaps. These visualizations confirm that our model's predictions are based on the lesion area, unlike models that rely on spurious background features. These results demonstrate that integrating precise lesion segmentation and clinical data with attention-based fusion leads to a more accurate and interpretable skin cancer classification model.

翻译:皮肤癌是一种危及生命的疾病,早期检测可显著改善患者预后。由于类内差异大且类间差异细微,基于皮肤镜图像的自动诊断具有挑战性。许多深度学习模型作为"黑箱"运行,限制了临床信任度。本研究提出一种基于双编码器注意力的框架,利用分割后的病灶和临床元数据,在准确性和可解释性两方面提升皮肤病变分类性能。首先采用一种融合双重注意力门控(DAG)与空洞空间金字塔池化(ASPP)的新型Deep-UNet架构进行病灶分割。分类阶段使用两个DenseNet201编码器——一个处理原始图像,另一个处理分割病灶,通过多头交叉注意力机制融合特征。这种双输入设计引导模型聚焦于显著病理区域。此外,基于Transformer的模块将患者元数据(年龄、性别、病灶部位)整合到预测中。我们在HAM10000数据集及ISIC 2018和2019挑战赛上评估所提方法。该方法实现了最先进的分割性能,与基线模型相比显著提升了分类准确率和平均AUC。为验证模型可靠性,我们采用梯度加权类激活映射(Grad-CAM)生成热力图。可视化结果证实,与依赖虚假背景特征的模型不同,本模型的预测基于病灶区域。这些结果表明,将精确的病灶分割和临床数据与基于注意力的融合相结合,可构建更准确且可解释的皮肤癌分类模型。