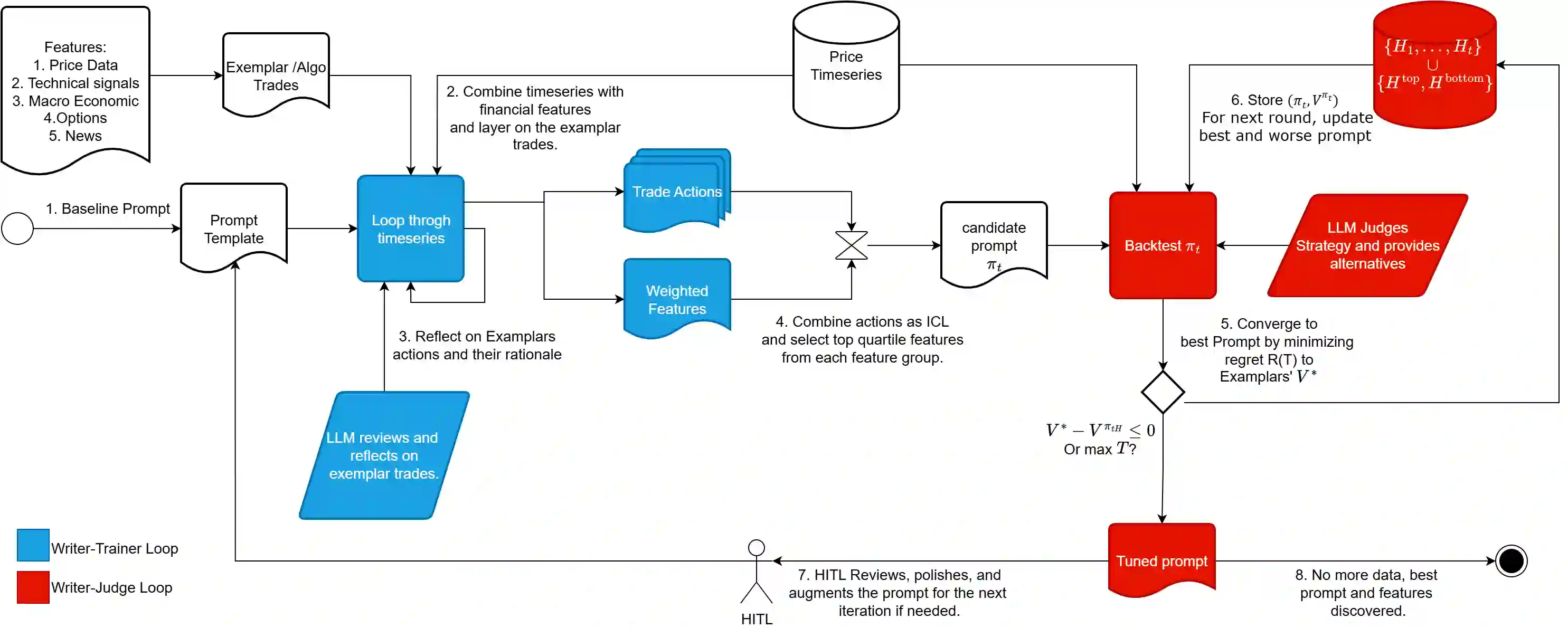

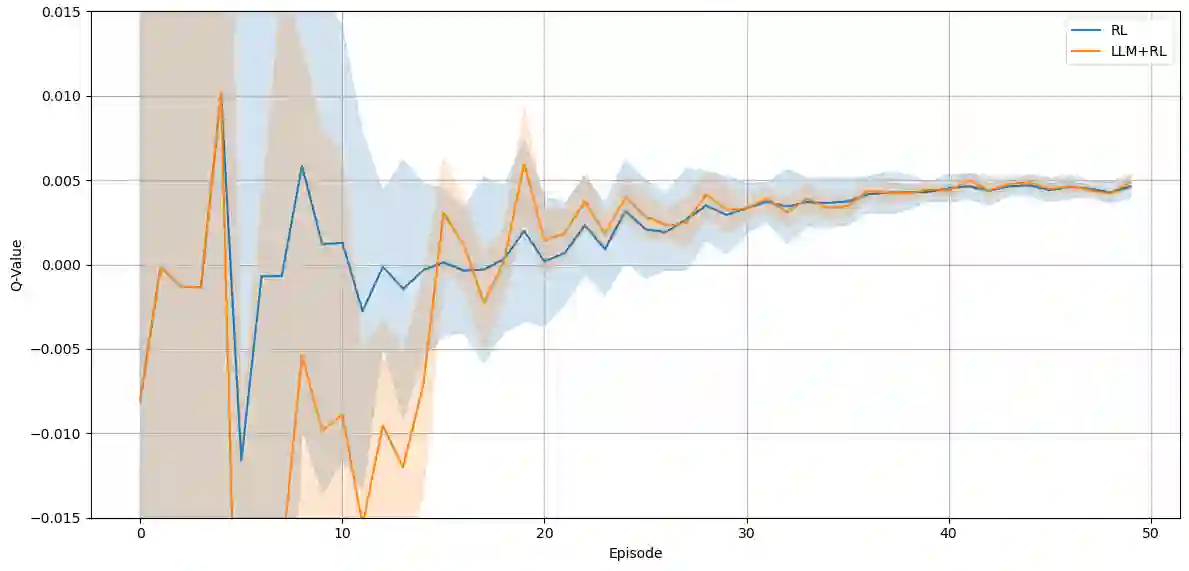

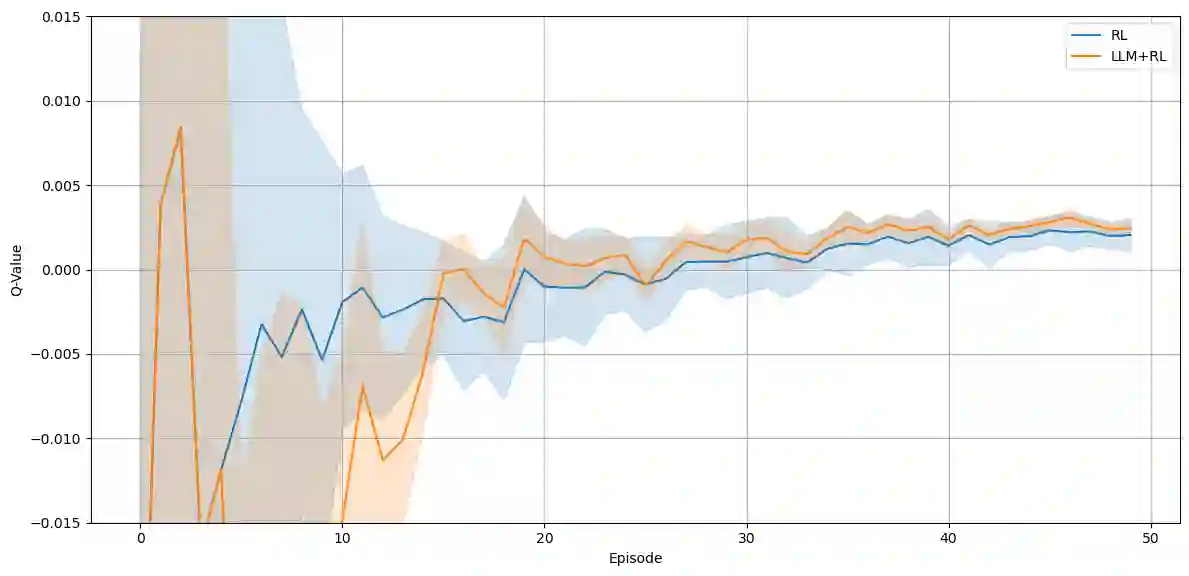

Algorithmic trading requires short-term tactical decisions consistent with long-term financial objectives. Reinforcement Learning (RL) has been applied to such problems, but adoption is limited by myopic behaviour and opaque policies. Large Language Models (LLMs) offer complementary strategic reasoning and multi-modal signal interpretation when guided by well-structured prompts. This paper proposes a hybrid framework in which LLMs generate high-level trading strategies to guide RL agents. We evaluate (i) the economic rationale of LLM-generated strategies through expert review, and (ii) the performance of LLM-guided agents against unguided RL baselines using Sharpe Ratio (SR) and Maximum Drawdown (MDD). Empirical results indicate that LLM guidance improves both return and risk metrics relative to standard RL.

翻译:算法交易要求短期战术决策与长期财务目标保持一致。强化学习(RL)已被应用于此类问题,但其采用受到短视行为和策略不透明的限制。大型语言模型(LLM)在结构化提示的引导下,能够提供互补的战略推理和多模态信号解读能力。本文提出一种混合框架,其中LLM生成高层交易策略以指导RL智能体。我们通过(i)专家评审评估LLM生成策略的经济合理性,以及(ii)使用夏普比率(SR)和最大回撤(MDD)将LLM引导的智能体与无引导的RL基线进行性能对比。实证结果表明,相对于标准RL,LLM引导在收益和风险指标上均有改善。